Eden supports OpenAI, Anthropic, Deepseek, Perplexity, and X.ai APIs. For reasoning models, you can choose whether or not to display the reasoning. For Perplexity and OpenAI web search, while citations are inlined, you can also list them in a dedicated buffer.

Eden’s interface is simple:

- You want to ask something to ChatGPT? Call

edencommand, enter your prompt, pressC-c C-cand you’re done. - You want to integrate the response in your

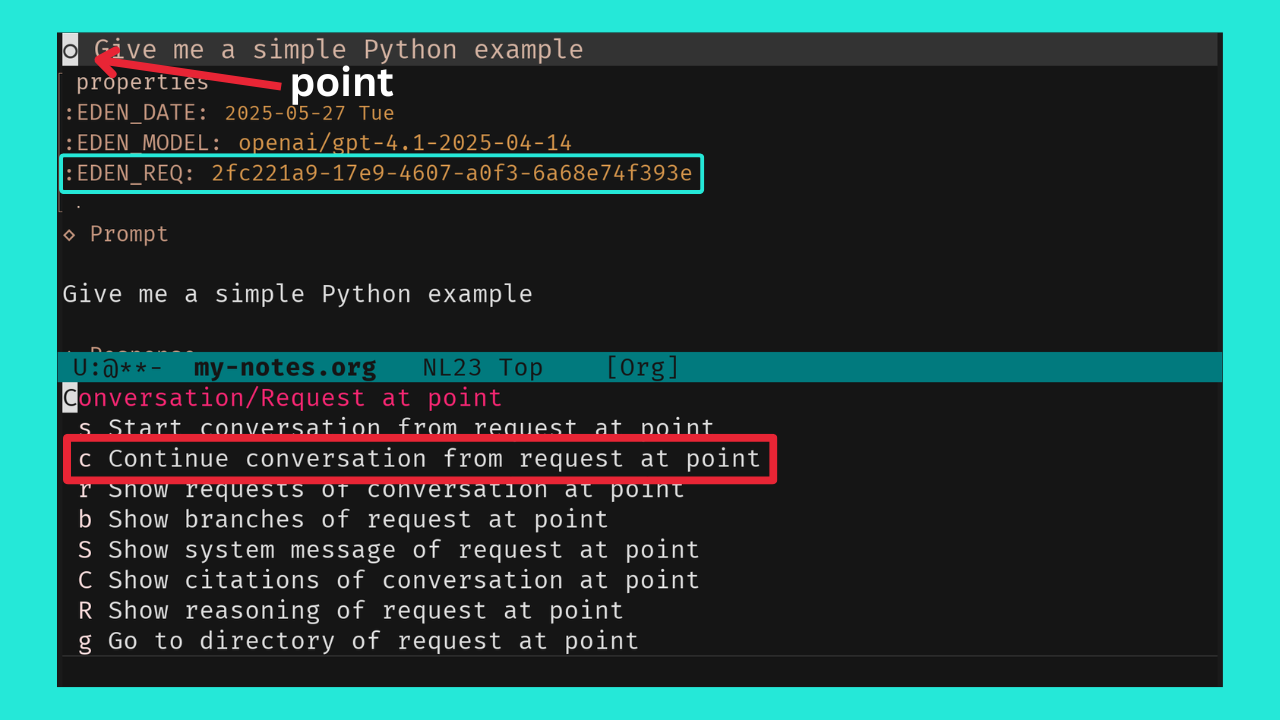

org-modenotes? Just copy/paste it. - You want to continue a conversation saved in your

org-modenotes? On the request at point, calledenwith theC-uprefix argument which opens a transient menu, then presscto to continue the conversation whose last request is the request at point. - You want to continue a conversation from a previous request?

Simple! In the prompt buffer, navigate through the prompt history

using

M-pandM-nto find the desired request, open the menu callingedenand presscto continue the conversation from that request. - You want to switch the API and the model? Just call

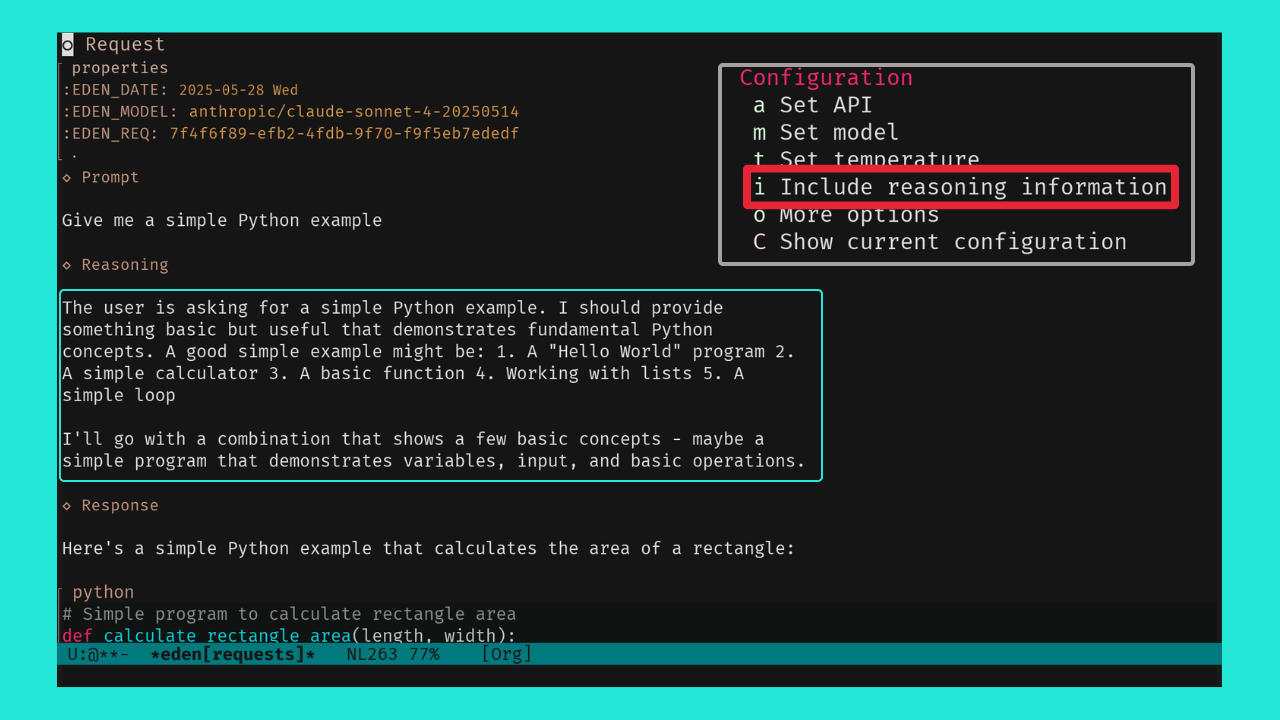

edenin the prompt buffer, then pressato change the API andmto change the model. - You want to read the reasoning behind the response? Great! In the

prompt buffer, call

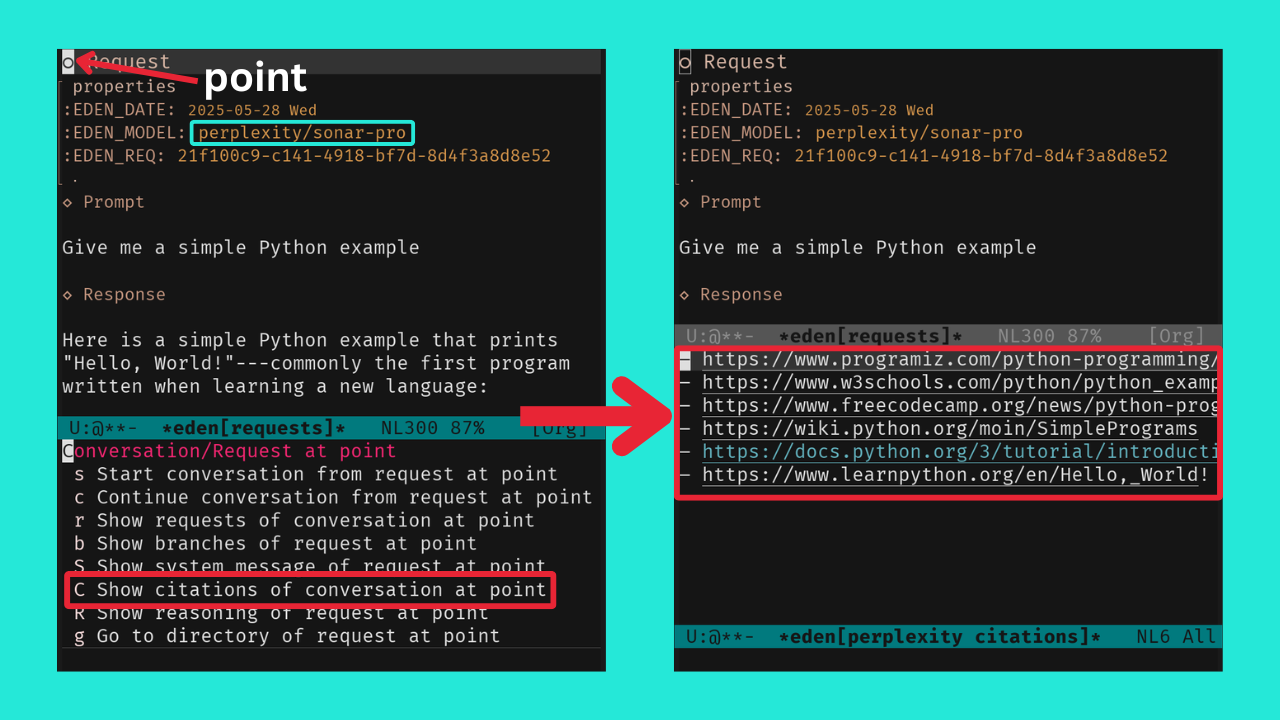

edenand pressito toggle the reasoning. Send your request and get the reasoning in its own section. - You want to list Perplexity citations? Fine! On the request at

point, call

edenwith theC-uprefix argument, then pressC.

Requests are independent by default and are appended to the

*eden[requests]* buffer. To link them together, you must be in a

conversation; in that case, they are appended to unique buffers named

like this: *eden[<conversation's name>]*.

Note that all requests are stored in the eden-dir directory, which

defaults to the eden subdirectory within your user-emacs-directory.

Also note that the prompt buffer *eden* uses eden-mode, which is

derived from org-mode. This means that everything you can do in

org-mode is also available in the prompt buffer, except for the

following key bindings: C-c C-c, M-p, M-n, C-M-p, and C-M-n.

- Ensure the following utilities are installed and present in one

of your

exec-pathdirectories:curluuidgenpandoc

You can ask ChatGPT to help you with this (replace

distributionwith your distribution be Mac, Ubuntu, etc.):How to install the utilities curl, uuidgen, and pandoc on <distribution>? - Add the directory containing

eden.elto yourload-pathand require the Eden package by adding the following lines to your init file, ensuring to replace/path/to/eden/with the appropriate directory:(add-to-list 'load-path "/path/to/eden/") (require 'eden)

Or if you’re using

straight:(straight-use-package '(eden :type git :host github :repo "tonyaldon/eden"))

- Store your OpenAI API key in either the ~/.authinfo.gpg file

(encrypted with gpg) or the ~/.authinfo file (plaintext):

- After funding your OpenAI account ($5.00 is enough to get started), create an OpenAI API key visiting https://platform.openai.com/api-keys. You can check my video if you’ve never done this before.

- Add the API key in the selected file as follows:

machine openai password <openai-api-key>where

<openai-api-key>is your API key. - Restart Emacs to apply this change.

- Call the command

edento switch to*eden*prompt buffer, - Enter your prompt,

- Press

C-c C-cto send your prompt to OpenAI API, - Finally, the response will asynchronously show up in a dedicated buffer upon receipt.

To use the APIs from OpenAI, Anthropic, Deepseek, Perplexity, or X.ai you need to store their API key in either the ~/.authinfo.gpg file (encrypted with gpg) or the ~/.authinfo file (plaintext) as follow:

machine openai password <openai-api-key>

machine anthropic password <anthropic-api-key>

machine deepseek password <deepseek-api-key>

machine perplexity password <perplexity-api-key>

machine x.ai password <x.ai-api-key>

You can create new API keys at the following links:

- https://platform.openai.com/api-keys

- https://console.anthropic.com/settings/keys

- https://platform.deepseek.com/api_keys

- https://perplexity.ai/account/api/keys

- https://console.x.ai

Eden focuses on conversations without enforcing them; defaulting to independent requests, it makes starting new conversations or continuing from previous ones easy!

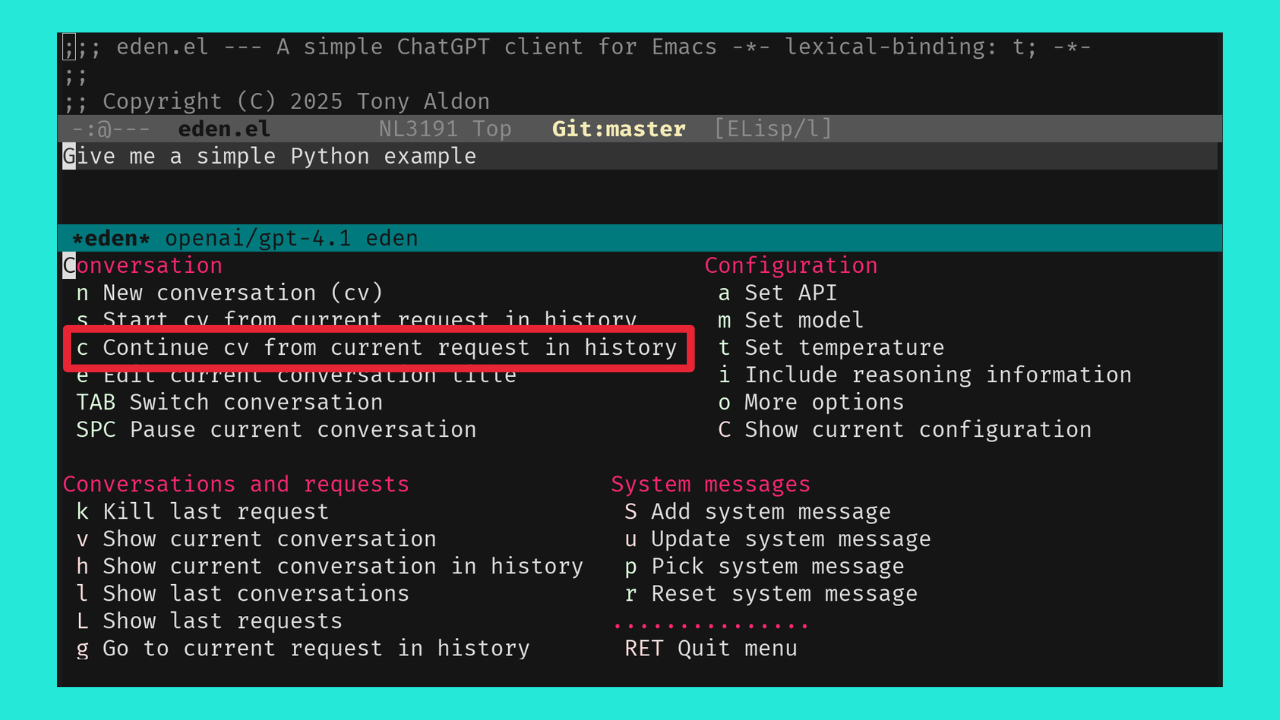

There are several ways to engage in a conversation while in the prompt buffer:

- To start a new conversation, call the

edencommand, pressn, and enter a title. This creates a new empty conversation, setting it as the current one for all new requests. - To start a conversation from the current request in history

(excluding earlier exchanges), navigate through the history using

M-pandM-nto find the desired request. Then, call theedencommand, presss, and enter a title. This creates a new conversation that already include one exchange. - To continue an existing conversation call the

edencommand, pressc, and enter a title. This will include all previous exchanges of the current request in history.

You can pause the current conversation by calling eden and pressing

SPC. Subsequent requests will then be independent again.

When you are in a conversation, the name of the conversation appears in the mode line of the prompt buffer, enclosed in brackets.

Note that conversation titles and IDs are not stored; they only exist

during your Emacs session. However, you can retrieve any conversation

later either by saving it in your notes (with its UUID) or navigating

the prompt history with M-p and M-n.

All requests are stored in the eden-dir directory, which defaults to

the eden subdirectory within your user-emacs-directory, providing a

range of benefits:

- Requests are always preserved, ensuring you can retrieve them at any time.

- With the request’s UUID, you can track down the associated request and check details like the API, model, system prompt, and timestamp.

- Should an error occur during processing, the corresponding

error.jsonfile can be consulted for troubleshooting. - You can start or continue a conversation from any existing request

(a feature known as “branching”):

- Either from a request at point in your notes,

- Or navigating through history in the prompt buffer using

M-pandM-nto find the desired request, opening the menu witheden, and pressingcto continue the conversation orsto start a new conversation from the request.

- All data is stored in JSON (or text format), facilitating integration with other software for further analysis.

In the prompt buffer, you can call eden and press C to show the

current configuration.

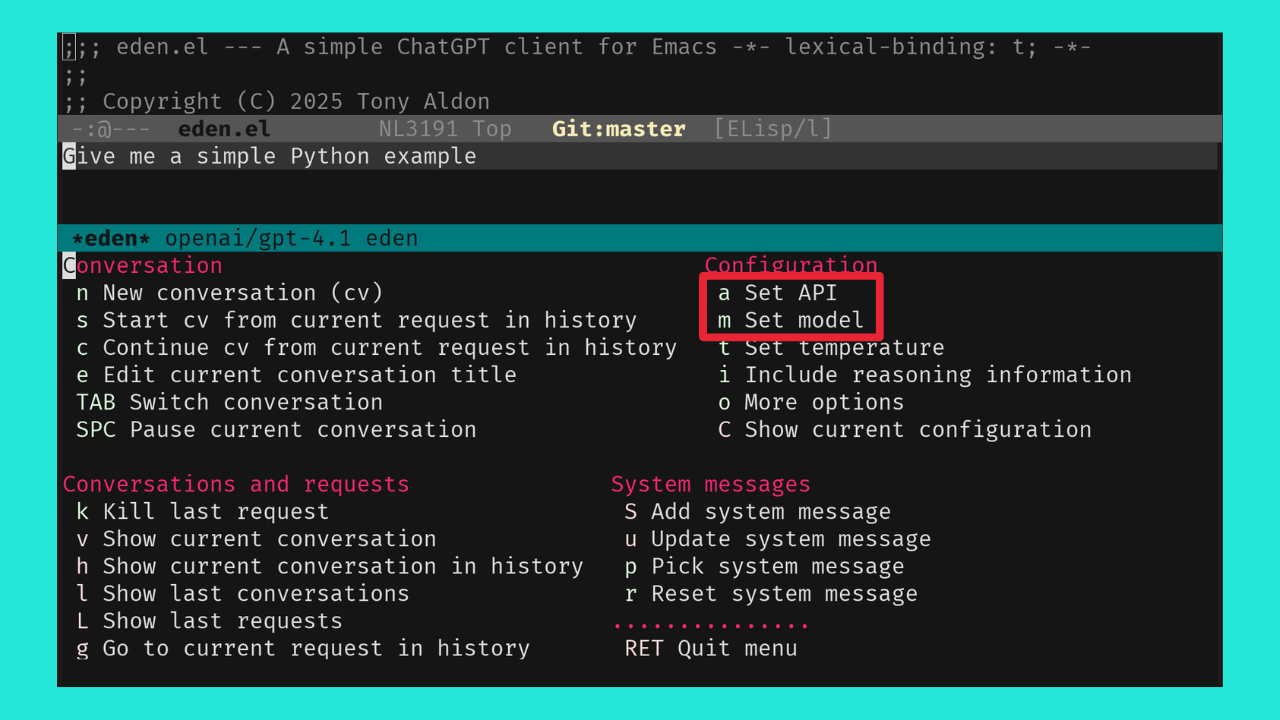

From the menu provided by eden, you can modify the current

configuration doing the following

- Press

ato set the current API (eden-api-set), - Press

mto set the model for the current API (eden-model-set), - Press

tto set the temperature (eden-temperature-set), - Press

ito include reasoning information (eden-include-reasoning-toggle), - Press

oto access another menu with more options (eden-more-options-menu),

or the following for modifying the system message:

- Press

Sto add a system message (eden-system-message-add), - Press

uto update the system message (eden-system-message-update), - Press

pto pick a system message (eden-system-message-set), - Press

rto reset the system message (eden-system-message-reset).

Each time you quit Eden’s menu, the current profile which includes

- the API,

- the request directory,

- the model,

- if you include the reasoning,

- the temperature,

- the current conversation if any, and,

- the system message if any

- the instructions appended to the system message if any

is pushed to an history that you can navigate from the prompt buffer with:

C-M-pbound toeden-profile-previousandC-M-nbound toeden-profile-next.

The complete list of user variables you may want to adjust includes:

eden-apieden-apiseden-modeleden-temperatureeden-system-messageeden-system-message-appendeden-system-messageseden-system-message->developer-for-modelseden-direden-anthropic-max-tokenseden-anthropic-thinking-budget-tokenseden-web-search-context-sizeeden-org-property-dateeden-org-property-modeleden-org-property-reqeden-pops-up-upon-receipteden-include-reasoningeden-prompt-buffer-name

For more information on these variables, consult their documentation

in the *Help* buffer using describe-variable command, bound by default

to C-h v.

See “Alternatives” section of gptel README for a comprehensive list of Emacs clients for LLMs not limited to OpenAI.

No.

I don’t like it.

Streaming the response forces me to read it immediately and linearly.

That’s not how I read. I often start from the end and go backward, picking out only the pieces I’m interested in. If I need a more profound understanding of the answer, I might then read it linearly to make sure I don’t miss anything.

And if I have to read the entire text of each response, I’ll get exhausted too quickly. My processing power can’t keep up with the production rate of LLMs. I have to choose wisely what I read and what I don’t.

You might say, “Nobody is forcing you to read it this way; you can just wait until the end.” That’s true! But in that case, why bother implementing streaming at all?