A curated list of grounding natural language in video and related area. :-)

本方向主要分为两类任务:

-

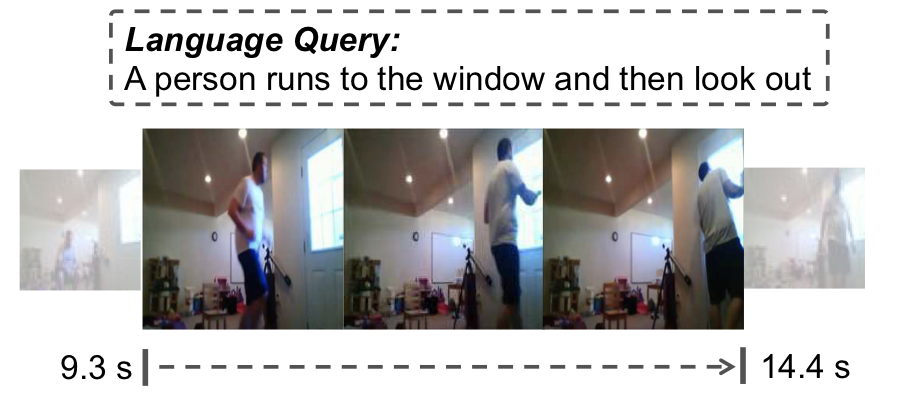

Temporal Activity Localization by Language:给定一个query(包含对activity的描述),找到对应动作(事件)的起止时间;

-

Spatio-temporal object referring by language: 给定一个query(包含对object/person的描述),在时空中找到连续的bounding box (也就是一个tube)。

Markdown format:

- [Paper Name](link) - Author 1 et al, `Conference Year`. [[code]](link)- 2019/12/16: Add CBP (AAAI 2020)

- None.

- Grounded Language Learning from Video Described with Sentences - H. Yu et al,

ACL 2013. - Visual Semantic Search: Retrieving Videos via Complex Textual Queries - Dahua Lin et al,

CVPR 2014. - Jointly Modeling Deep Video and Compositional Text to Bridge Vision and Language in a Unified Framework - R. Xu et al,

AAAI 2015. - Unsupervised Alignment of Actions in Video with Text Descriptions - Y. C. Song et al,

IJCAI 2016.

-

Localizing Moments in Video with Natural Language - Lisa Anne Hendricks et al,

ICCV 2017. [code] -

TALL: Temporal Activity Localization via Language Query - Jiyang Gao et al,

ICCV 2017. [code]. -

Spatio-temporal Person Retrieval via Natural Language Queries - M. Yamaguchi et al,

ICCV 2017. [code]

- Attention-based Natural Language Person Retrieval - Tao Zhou et al,

CVPR 2017. - Where to Play: Retrieval of Video Segments using Natural-Language Queries - S. Lee et al,

arxiv 2017.

-

Find and Focus: Retrieve and Localize Video Events with Natural Language Queries - Dian Shao et al,

ECCV 2018. -

Temporal Modular Networks for Retrieving Complex Compositional Activities in Videos - B. Liu et al,

ECCV 2018. -

Temporally Grounding Natural Sentence in Video - J. Chen et al,

EMNLP 2018. -

Localizing Moments in Video with Temporal Language - Lisa Anne Hendricks et al,

EMNLP 2018. -

Object Referring in Videos with Language and Human Gaze - A. B. Vasudevan et al,

CVPR 2018. [code]. -

Weakly Supervised Dense Event Captioning in Videos - X. Duan et al,

NIPS 2018. -

Actor and Action Video Segmentation from a Sentence - Kirill Gavrilyuk et al,

CVPR 2018. -

Attentive Moment Retrieval in Videos - M. Liu et al,

SIGIR 2018. -

Multilevel Language and Vision Integration for Text-to-Clip Retrieval - Huijuan Xu, AAAI 2018.

Motivation:we introduce a multilevel model that integrates vision and language features earlier and more tightly than prior work.

Methodology: (a) Generate query-guided proposals; (b) An early fusion approach and learn an LSTM to model fine-grained similarity between query sentences and video clips; (c) leverage captioning as an auxiliary task.

-

Multilevel Language and Vision Integration for Text-to-Clip Retrieval - H. Xu et al,

AAAI 2019. [code] -

Read, Watch, and Move: Reinforcement Learning for Temporally Grounding Natural Language Descriptions in Videos - He, Dongliang et al,

AAAI 2019. -

To Find Where You Talk: Temporal Sentence Localization in Video with Attention Based Location Regression - Y. Yuan et al,

AAAI 2019. [code]

Motivation:A co-attention mechanism.

Methodology: (a) Contextual Incorporated Feature Encoding using Bi-LSTM. (b) Multi-Modal Co-Attention Interaction: three times attention modulations. (c) Attention Based Coordinates Prediction: predictions using the attended visual and language features or the obtained visual attention weight.

-

Semantic Proposal for Activity Localization in Videos via Sentence Query - S. Chen et al,

AAAI 2019.Motivation:Previous proposal-based methods use anchors which only considers the class-agnostic "actionness" of video snippets. Here we integrate the sentence semantic information into the proposal generation process.

Methodology: (a) Video Concept Detection. Select visual concepts according to the frequencies. Since no spatial bounding box annotations, we train with NIL. (b) Semantic Activity Proposal generation based on grouping the visual-semantic correlation scores. (c) Proposal Evaluation and Refinement.

-

MAN: Moment Alignment Network for Natural Language Moment Retrieval via Iterative Graph Adjustment - Da Zhang et al,

CVPR 2019.Motivation:Monment Alignment and single stage forward network (maybe inspired by SSD or SSTD)

Methodology: (a) Language Encoder uses LSTM to generate the Dynamic Filters. (b) Video Encoder to generate multi-scale candidate moments. (c) Iterative Graph Adjustment Network to update these proposals in a GCN-like manner.

- Weakly Supervised Video Moment Retrieval From Text Queries - N. C. Mithun et al,

CVPR 2019. - Language-Driven Temporal Activity Localization_ A Semantic Matching Reinforcement Learning Model - W. Wang et al,

CVPR 2019. - Semantic Conditioned Dynamic Modulation for Temporal Sentence Grounding in Videos - Yitian Yuan et al,

NIPS 2019. [code] - WSLLN: Weakly Supervised Natural Language Localization Networks - M. Gao et al,

EMNLP 2019. - ExCL: Extractive Clip Localization Using Natural Language Descriptions - S. Ghosh et al,

NAACL 2019. - Cross-Modal Interaction Networks for Query-Based Moment Retrieval in Videos - Zhu Zhang et al,

SIGIR 2019. [code] - Cross-Modal Video Moment Retrieval with Spatial and Language-Temporal Attention - B. Jiang et al,

ICMR 2019. [code] - MAC: Mining Activity Concepts for Language-based Temporal Localization - Runzhou Ge Ge et al,

WACV 2019. [code] - Temporal Localization of Moments in Video Collections with Natural Language - V. Escorcia et al,

arxiv 2019. - Proposal-free Temporal Moment Localization of a Natural-Language Query in Video using Guided Attention - C. R. Opazo et al,

arxiv 2019. - Tripping through time: Efficient Localization of Activities in Videos - Meera Hahn et al,

arxiv 2019. - [Related] Localizing Unseen Activities in Video via Image Query - Zhu Zhang et al,

IJCAI 2019. - WSLLN:Weakly Supervised Natural Language Localization Networks - Mingfei Gao et al,

EMNLP2019. - Localizing Natural Language in Videos - Jingyuan Chen et al,

AAAI2019.

-

Temporally Grounding Language Queries in Videos by Contextual Boundary-aware Prediction - Jingwen Wang et al,

AAAI 2020. [code]Motivation: The inaccurate temporal boundaries.

Methodology: Propose a novel model that jointly predicts temporal anchors and boundaries at each time step. Boundary Submodule is implemented with a simple binary classifier with cross-entropy loss. The final score is achieved via Boundary Score Fusion.

-

Learning 2D Temporal Localization Networks for Moment Localization with Natural Language - Songyang Zhang et al,

AAAI 2020. [code1][code2] -

Multi-Scale 2D Temporal Adjacent Networks for Moment Localization with Natural Language - Songyang Zhang et al,

TPAMI submission. -

Jointly Cross- and Self-Modal Graph Attention Network for Query-Based Moment Localization - Daizong Liu et al,

ACM MM. [code] -

Weakly-Supervised Video Moment Retrieval via Semantic Completion Network - Zhijie Lin et al,

AAAI 2020.Motivation:The latent attention weights without extra supervision usually focus on the most discriminative but small regions (Singh and Lee 2017) instead of covering complete regions.

Methodology: (a) Proposal Generation Module: Transformer-based score assignment and decay rate Top-K selection. (b) Semantic Completion Module. (Masked language tokens and the video sequences input.) (c) Reconstruction Loss and Rank Loss.

-

Look Closer to Ground Better: Weakly-Supervised Temporal Grounding of Sentence in Video - Zhenfang Chen et al,

arXiv.Motivation: Ground in a coarse-to-fine manner.

Methodology: (a) Feature Encoder by Bi-LSTM for both video and language. (b) Coarse stage: proposal generation and compute the similarity to the sentence feature with classification stream and selection stream. (c) Fine stage: frame level feature similarity compution. (d) Training with MIL (cross entropy loss and ranking loss).

-

VLANet: Video-Language Alignment Network for Weakly-Supervised Video Moment Retrieval - Minuk Ma et al,

ECCV 2020. -

Counterfactual Contrastive Learning for Weakly-Supervised Vision-Language Grounding - Zhu Zhang et al,

NIPS 2020. -

Local-Global Video-Text Interactions for Temporal Grounding - Jonghwan Mun et al, CVPR 2020`.

-

Span-based Localizing Network for Natural Language Video Localization - Hao Zhang et al, ACL 2020`. [code]

Motivation: By treating the video as a text passage and the target moment as the answer span, NLVL shares significant similarities with span-based question answering (QA) task.

Methodology: In my opinion, it just directly predicts the start and the end temporal boundary.

-

Rethinking the Bottom-Up Framework for Query-based Video Localization - Long Chen et al, AAAI 2020`. [code]

Motivation: We argue that the performance of bottom-up framework is severely underestimated by current unreasonable designs, including both the backbone and head network.

Methodology: For backbone, use Graph to model the multi-level features. For head network, take the dense regression manner (FCOS).

-

Boundary Proposal Network for Two-Stage Natural Language Video Localization - Shaoning Xiao et al,

AAAI 2021.Motivation: Two stage methods are susceptible to the heuristic rules and can not handle variant length; One stage methods are lack of the segment-level interactions.

Methodology: (1) Proposal generation using 2D-TAN to generate sparse segment candidates. (2) Compute the Text Matching Score.

-

Context-aware Biaffine Localizing Network for Temporal Sentence Grounding - Daizong Liu et al,

CVPR 2021. -

Embracing Uncertainty: Decoupling and De-bias for Robust Temporal Grounding - Hao Zhou et al,

CVPR 2021.Motivation: Query uncertainty and Label uncertainty. Propose decoupling module in the language encoding, and de-bias mechanism in the temporal regression to generate diverse yet plausible predictions. For example, a person is washing their hands in the sink can be broken down into relation phrase [person, washing, hands, sink] and modified phrase [a, is, their, in, the].

Methodology: Video encoding to generate relation and modified phrases; temporal regression with multiple choice learning (min-loss).

-

Uncovering Hidden Challenges in Query-Based Video Moment Retrieval - Mayu Otani et al,

BMVC 2020.Motivation: Investigate the bias in the current dataset. (1) Video input is of less or non importance. (2) Action verb distribution is biased. (3) Recollect annotations for Charades-STA and ActivityNet Captions test/validation sets with 5 answers for each sample.

-

Interaction-Integrated Network for Natural Language Moment Localization - Ke Ning et al,

TIP. -

Cross-Sentence Temporal and Semantic Relations in Video Activity Localisation - Jiabo Huang et al,

ICCV.

- Overall, shared by 2D-TAN

- ActivityNet Captions

- TACos, shared by ChenJoya_2dtan

| R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | Method | |

|---|---|---|---|---|---|---|---|---|---|

| MCN | 42.80 | 21.37 | 9.58 | - | - | - | - | - | PB |

| CTRL | 49.09 | 28.70 | 14.0 | - | - | - | - | - | PB |

| ACRN | 50.37 | 31.29 | 16.17 | - | - | - | - | - | PB |

| QSPN | - | 45.3 | 27.7 | 13.6 | - | 75.7 | 59.2 | 38.3 | PB |

| TGN | 70.06 | 45.51 | 28.47 | - | 79.10 | 57.32 | 44.20 | - | PB |

| SCDM | - | 54.80 | 36.75 | 19.86 | - | 77.29 | 64.99 | 41.53 | PB |

| CBP | - | 54.30 | 35.76 | 17.80 | - | 77.63 | 65.89 | 46.20 | PB |

| TripNet | - | 48.42 | 32.19 | 13.93 | - | - | - | - | RL |

| ABLR | 73.30 | 55.67 | 36.79 | - | - | - | - | - | RL |

| ExCL | - | 63.30 | 43.6 | 24.1 | - | - | - | - | PF |

| PFGA | 75.25 | 51.28 | 33.04 | 19.26 | - | - | - | - | PF |

| WSDEC-X(Weakly) | 62.7 | 42.0 | 23.3 | - | - | - | - | - | |

| WSLLN (Weakly) | 75.4 | 42.8 | 22.7 | - | - | - | - | - |

| R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | Method | |

|---|---|---|---|---|---|---|---|---|---|

| CTRL | - | - | 23.63 | 8.89 | - | - | 58.92 | 29.52 | PB |

| ABLR | - | - | 24.36 | 9.01 | - | - | - | - | PB |

| SMRL | - | - | 24.36 | 11.17 | - | - | 61.25 | 32.08 | PB |

| ACL-K | - | - | 30.48 | 12.20 | - | - | 64.84 | 35.13 | PB |

| SAP | - | - | 27.42 | 13.36 | - | - | 66.37 | 38.15 | PB |

| QSPN | - | 54.7 | 35.6 | 15.8 | - | 95.8 | 79.4 | 45.4 | PB |

| MAN | - | - | 46.53 | 22.72 | - | - | 86.23 | 53.72 | PB |

| SCDM | - | - | 54.44 | 33.43 | - | - | 74.43 | 58.08 | PB |

| CBP | - | - | 36.80 | 18.87 | - | - | 70.94 | 50.19 | PB |

| TripNet | - | 51.33 | 36.61 | 14.50 | - | - | - | - | RL |

| ExCL | - | 65.1 | 44.1 | 23.3 | - | - | - | - | RL |

| PFGA | - | 67.53 | 52.02 | 33.74 | - | - | - | - | PF |

| R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | |

|---|---|---|---|---|---|---|---|---|

| TMN | 22.92 | - | - | - | 76.08 | - | - | - |

| MCN | 28.10 | - | - | - | 78.21 | - | - | - |

| TGN | 28.23 | - | - | - | 79.26 | - | - | - |

| MAN | 27.02 | - | - | - | 81.70 | - | - | - |

| WSLLN (Weakly) | 19.4 | - | - | - | 54.4 | - | - | - |

| R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@1 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | R@5 [email protected] | Method | |

|---|---|---|---|---|---|---|---|---|---|

| MCN | 2.62 | 1.64 | 1.25 | - | 2.88 | 1.82 | 1.01 | - | PB |

| CTRL | 24.32 | 18.32 | 13.30 | - | 48.73 | 36.69 | 25.42 | - | PB |

| TGN | 41.87 | 21.77 | 18.90 | - | 53.40 | 39.06 | 31.02 | - | PB |

| ACRN | 24.22 | 19.52 | 14.62 | - | 47.42 | 34.97 | 24.88 | - | PB |

| ACL-K | 31.64 | 24.17 | 20.01 | - | 57.85 | 42.15 | 30.66 | - | PB |

| SCDM | - | 26.11 | 21.17 | - | - | 40.16 | 32.18 | - | PB |

| CBP | - | 27.31 | 24.79 | 19.10 | - | 43.64 | 37.40 | 25.59 | PB |

| TripNet | - | 23.95 | 19.17 | 9.52 | - | - | - | - | RL |

| SMRL | 26.51 | 20.25 | 15.95 | - | 50.01 | 38.47 | 27.84 | - | RL |

| ABLR | 34.7 | 19.5 | 9.4 | - | - | - | - | - | RL |

| ExCL | - | 45.5 | 28.0 | 14.6 | - | - | - | - | PF |

- jiyanggao/TALL

- runzhouge/MAC

- BonnieHuangxin/SLTA

- yytzsy/ABLR_code

- yytzsy/SCDM

- JaywongWang/TGN

- JaywongWang/CBP

- None.

To the extent possible under law, muketong all copyright and related or neighboring rights to this work.