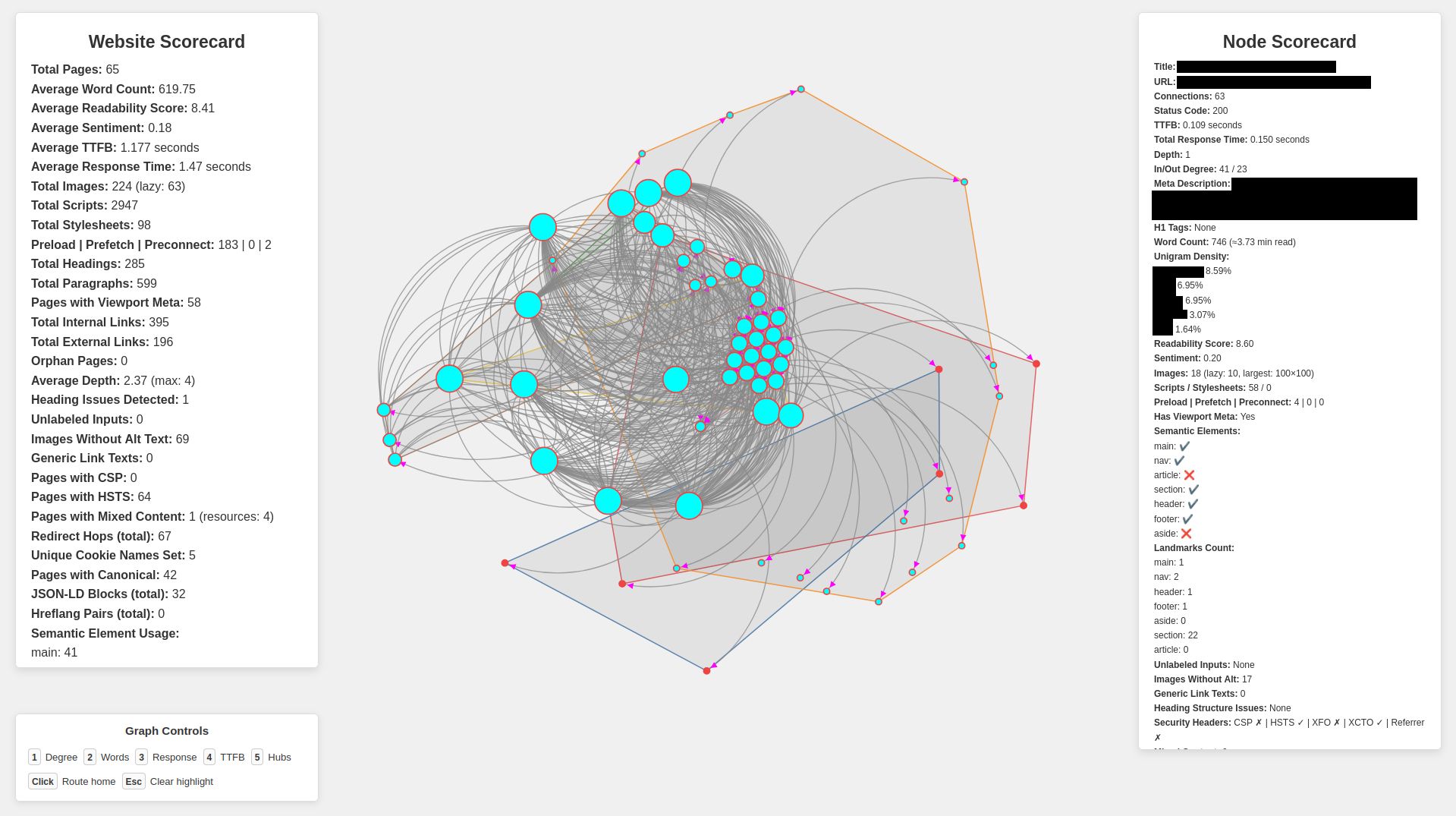

Explore a website's internal links, then visualize those connections as a network graph with scorecards and analysis using Claude AI.

- Git

- Python (When installing on Windows, make sure you check the "Add python 3.xx to PATH" box.)

-

Install the above programs.

-

Open a shell window (For Windows open PowerShell, for MacOS open Terminal & for Linux open your distro's terminal emulator).

-

Clone this repository using

gitby running the following command:git clone [email protected]:devbret/website-internal-links.git. -

Navigate to the repo's directory by running:

cd website-internal-links. -

Create a virtual environment with this command:

python3 -m venv venv. Then activate your virtual environment using:source venv/bin/activate. -

Install the needed dependencies for running the script by running:

pip install -r requirements.txt. -

Set the environment variable for the Anthropic API key by renaming the

.env.templatefile to.envand placing your value immediately after the=character. -

Edit the app.py file

WEBSITE_TO_CRAWLvariable (on line 21), this is the website you would like to visualize.- Also edit the app.py file

MAX_PAGES_TO_CRAWLvariable (on line 24), this specifies how many pages you would like to crawl.

- Also edit the app.py file

-

Run the script with the command

python3 app.py. -

To view the website's connections using the index.html file you will need to run a local web server. To do this run

python3 -m http.serverin a new terminal. -

Once the network map has been launched, hover over any given node for more information about the particular web page, as well as the option submit data for analysis via Claude AI. By clicking on a node, you will be sent to the related URL address in a new tab.

-

To exit the virtual environment (venv), type:

deactivatein the terminal.

We use textstat for readability and TextBlob for sentiment. Beyond headings, alt text, labels and semantic tags, the crawler also records:

-

Status/Timing: status code, TTFB, total response time

-

Structure: word counts, H1s, paragraphs

-

Links: internal/external, depth, orphan pages

-

SEO: canonical, JSON-LD, OpenGraph, Twitter, hreflang

-

Security/Delivery: CSP/HSTS headers, redirects, mixed content, cookies

-

Language:

langvs detected

Upon clicking any node, the shortest route back to the homepage is highlghted, giving a clear visual of how deeply the page sits within the site structure. This feature uses a breadth-first search to trace paths efficiently, even in large crawls. The result is an intuitive way to explore navigation depth and connectivity directly within the visualization.

Generating visualizations with this app takes an unexpectedly large amount of processing power. It is advisable to experiment with mapping less than one hundred pages per launch.

If working with GitHub codespaces, you may have to:

-

python -m nltk.downloader punkt_tab -

Then reattempt steps 6 - 9.

If all else fails, please contact the maintainer here on GitHub or via LinkedIn.

Cheers!