-

-

-### Connecting to your cluster

-

-Depending on your external IP address, you may need to use different strategies to connect to your cluster.

-

-If the external IP for both services is `127.0.0.1`, connect to Weaviate with:

-

-- Another way to confirm access to the gRPC service -

- -If you have netcat installed, you can also try: - -```bash -nc -zv 127.0.0.1 50051 -``` - -Which will show: - -```bash -Connection to 127.0.0.1 port 50051 [tcp/*] succeeded! -``` - -Note that not all systems have `nc` installed by default. It's okay if you don't have it - the `kubectl get svc` command output is sufficient to confirm access to the gRPC service. - - -

-## Install Node.js

-

-### Is Node.js installed?

-

-Open a terminal window (e.g. bash, zsh, Windows PowerShell, Windows Terminal), and run:

-

-```shell

-node --version # or node -v

-```

-

-If you have Node.js installed, you should see a response like `v22.3.0`. The minimum version of Node.js supported by the Weaviate Typescript/Javascript library is `v18`.

-

-

-### Install Node.js

-

-To install, follow the instructions for your system on [nodejs.org](https://nodejs.org/en/download/package-manager).

-

-Once you have Node.js installed, check the version again to confirm that you have a recommended version installed.

-

-:::tip Advanced option: `nvm`

-Another good way to install Python is to install `nvm`. This will allow you to manage multiple versions of Node.js on your system. You can find instructions on how to install `nvm` [here](https://github.com/nvm-sh/nvm?tab=readme-ov-file#installing-and-updating).

-:::

-

-

-### (Optional) Set up Typescript

-

-To install, follow the instructions for your system on [typescriptlang.org](https://www.typescriptlang.org/download/). Once installed, you can find instruction on how to configure Typescript to work with the Weaviate client documented [here](../../weaviate/client-libraries/typescript/index.mdx).

-

-Now Typescript is ready to use with the Weaviate client.

-

-## Install the Weaviate client

-

-Now, you can install the [Weaviate client library](../../weaviate/client-libraries/index.mdx), which will make it much easier to interact with Weaviate using Typescript.

-

-In a new folder for your project, install the Weaviate client with:

-

-```shell

-npm install weaviate-client

-```

-

-### Confirm the installation

-

-To confirm that the Weaviate client is installed, run the following in your terminal:

-

-```shell

-npm view weaviate-client

-```

-

-You should see an output like:

-

-```text

-3.1.4.

-```

-

-Congratulations, you are now set up to use Weaviate with the Weaviate TypeScript/JavaScript client library!

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-

-

-## Install Node.js

-

-### Is Node.js installed?

-

-Open a terminal window (e.g. bash, zsh, Windows PowerShell, Windows Terminal), and run:

-

-```shell

-node --version # or node -v

-```

-

-If you have Node.js installed, you should see a response like `v22.3.0`. The minimum version of Node.js supported by the Weaviate Typescript/Javascript library is `v18`.

-

-

-### Install Node.js

-

-To install, follow the instructions for your system on [nodejs.org](https://nodejs.org/en/download/package-manager).

-

-Once you have Node.js installed, check the version again to confirm that you have a recommended version installed.

-

-:::tip Advanced option: `nvm`

-Another good way to install Python is to install `nvm`. This will allow you to manage multiple versions of Node.js on your system. You can find instructions on how to install `nvm` [here](https://github.com/nvm-sh/nvm?tab=readme-ov-file#installing-and-updating).

-:::

-

-

-### (Optional) Set up Typescript

-

-To install, follow the instructions for your system on [typescriptlang.org](https://www.typescriptlang.org/download/). Once installed, you can find instruction on how to configure Typescript to work with the Weaviate client documented [here](../../weaviate/client-libraries/typescript/index.mdx).

-

-Now Typescript is ready to use with the Weaviate client.

-

-## Install the Weaviate client

-

-Now, you can install the [Weaviate client library](../../weaviate/client-libraries/index.mdx), which will make it much easier to interact with Weaviate using Typescript.

-

-In a new folder for your project, install the Weaviate client with:

-

-```shell

-npm install weaviate-client

-```

-

-### Confirm the installation

-

-To confirm that the Weaviate client is installed, run the following in your terminal:

-

-```shell

-npm view weaviate-client

-```

-

-You should see an output like:

-

-```text

-3.1.4.

-```

-

-Congratulations, you are now set up to use Weaviate with the Weaviate TypeScript/JavaScript client library!

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

- -

-This section aims to guide you through how to do that as you build applications with the Weaviate Typescript v3 client: [weaviate-client](https://www.npmjs.com/package/weaviate-client).

-

-

-

-### Using the weaviate-client in a client-server application

-

-The v3 client uses gRPC to connect to your Weaviate instance. The client supports Node.js, server-based development. It does not support browser-based web client development.

-

-Install the client by following [these instructions](../../../../weaviate/client-libraries/typescript/index.mdx#installation). A big benefit of using the new v3 client is the introduction of the gRPC protocol. A faster, more reliable platform to handle interactions with the database at scale. Unfortunately, gRPC does not support browser-based client development.

-

-Besides the requirements of running the [weaviate-client](../../../../weaviate/client-libraries/typescript/index.mdx), the client-server architecture is reliably more secure than interactions directly from the client.

-

-Having a client-server approach means you can optimize your use of Weaviate by implementing load balancing, user and session management, middleware and various other optimizations.

-

-In the next sections we'll look at how to build client-server applications with..

-- [Using backend web frameworks](./20_building-client-server.mdx)

-- [Using fullstack web frameworks](./30_fullstack.mdx)

-

diff --git a/docs/academy/js/standalone/client-server/20_building-client-server.mdx b/docs/academy/js/standalone/client-server/20_building-client-server.mdx

deleted file mode 100644

index 3e6917ed1..000000000

--- a/docs/academy/js/standalone/client-server/20_building-client-server.mdx

+++ /dev/null

@@ -1,133 +0,0 @@

----

-title: Using Backend Web frameworks

----

-

-import Tabs from '@theme/Tabs';

-import TabItem from '@theme/TabItem';

-import FilteredTextBlock from '@site/src/components/Documentation/FilteredTextBlock';

-import TSCode from '!!raw-loader!./_snippets/20_backend.js';

-import ClientCode from '!!raw-loader!./_snippets/index.html';

-import WeaviateTypescriptImgUrl from '/docs/academy/js/standalone/client-server/_img/backend.jpg';

-

-

-

-This approach involves having two separate tools. One to build your server application; ideally a backend framework and another to build your client application. For this example, we will be using [Express.js](https://expressjs.com/en/starter/hello-world.html) to build a backend server, and [Thunder Client](https://www.thunderclient.com/) to act as a client and make API calls to our backend server.

-

-

-

-This section aims to guide you through how to do that as you build applications with the Weaviate Typescript v3 client: [weaviate-client](https://www.npmjs.com/package/weaviate-client).

-

-

-

-### Using the weaviate-client in a client-server application

-

-The v3 client uses gRPC to connect to your Weaviate instance. The client supports Node.js, server-based development. It does not support browser-based web client development.

-

-Install the client by following [these instructions](../../../../weaviate/client-libraries/typescript/index.mdx#installation). A big benefit of using the new v3 client is the introduction of the gRPC protocol. A faster, more reliable platform to handle interactions with the database at scale. Unfortunately, gRPC does not support browser-based client development.

-

-Besides the requirements of running the [weaviate-client](../../../../weaviate/client-libraries/typescript/index.mdx), the client-server architecture is reliably more secure than interactions directly from the client.

-

-Having a client-server approach means you can optimize your use of Weaviate by implementing load balancing, user and session management, middleware and various other optimizations.

-

-In the next sections we'll look at how to build client-server applications with..

-- [Using backend web frameworks](./20_building-client-server.mdx)

-- [Using fullstack web frameworks](./30_fullstack.mdx)

-

diff --git a/docs/academy/js/standalone/client-server/20_building-client-server.mdx b/docs/academy/js/standalone/client-server/20_building-client-server.mdx

deleted file mode 100644

index 3e6917ed1..000000000

--- a/docs/academy/js/standalone/client-server/20_building-client-server.mdx

+++ /dev/null

@@ -1,133 +0,0 @@

----

-title: Using Backend Web frameworks

----

-

-import Tabs from '@theme/Tabs';

-import TabItem from '@theme/TabItem';

-import FilteredTextBlock from '@site/src/components/Documentation/FilteredTextBlock';

-import TSCode from '!!raw-loader!./_snippets/20_backend.js';

-import ClientCode from '!!raw-loader!./_snippets/index.html';

-import WeaviateTypescriptImgUrl from '/docs/academy/js/standalone/client-server/_img/backend.jpg';

-

-

-

-This approach involves having two separate tools. One to build your server application; ideally a backend framework and another to build your client application. For this example, we will be using [Express.js](https://expressjs.com/en/starter/hello-world.html) to build a backend server, and [Thunder Client](https://www.thunderclient.com/) to act as a client and make API calls to our backend server.

-

- -

-

-## Building a server

-

-The server will have a single route that accepts a `searchTerm` as a query parameter.

-

-### 1. Initialize a Node.js application

-

-We will use Express to build our server, in a new directory, run the following command to initialize a new project with Node.js

-

-```bash

-npm init

-```

-### 2. Install project dependencies

-

-With our project initialized, install `dotenv` to manage environment variables, `express` to build our server and the `weaviate-client` to manage communication with our Weaviate database.

-

-```bash

-npm install express dotenv weaviate-client

-```

-

-

-### 3. Setup your Weaviate database

-

-We'll start by creating a free sandbox account on [Weaviate Cloud](https://console.weaviate.cloud/). Follow [this guide](/cloud/manage-clusters/connect) if you have trouble setting up a sandbox project.

-

-

-You will need your Weaviate cluster URL and API key. If you don't already have one, create a new Cohere [API key](https://dashboard.cohere.com/api-keys), we use Cohere as our [embedding model](../using-ml-models/10_embedding.mdx). When done, add all three to your `.env` file.

-

-

-

-

-

-## Building a server

-

-The server will have a single route that accepts a `searchTerm` as a query parameter.

-

-### 1. Initialize a Node.js application

-

-We will use Express to build our server, in a new directory, run the following command to initialize a new project with Node.js

-

-```bash

-npm init

-```

-### 2. Install project dependencies

-

-With our project initialized, install `dotenv` to manage environment variables, `express` to build our server and the `weaviate-client` to manage communication with our Weaviate database.

-

-```bash

-npm install express dotenv weaviate-client

-```

-

-

-### 3. Setup your Weaviate database

-

-We'll start by creating a free sandbox account on [Weaviate Cloud](https://console.weaviate.cloud/). Follow [this guide](/cloud/manage-clusters/connect) if you have trouble setting up a sandbox project.

-

-

-You will need your Weaviate cluster URL and API key. If you don't already have one, create a new Cohere [API key](https://dashboard.cohere.com/api-keys), we use Cohere as our [embedding model](../using-ml-models/10_embedding.mdx). When done, add all three to your `.env` file.

-

-

-  -

-## Building with Next.js

-

-### 1. Create a Next.js application

-

-To create a new application with Next.js, run the following command in your terminal.

-

-```bash

-create-next-app

-

-## Building with Next.js

-

-### 1. Create a Next.js application

-

-To create a new application with Next.js, run the following command in your terminal.

-

-```bash

-create-next-app - { data } -

- - - ) - -} - -``` -#### 6. Run your Fullstack App - -In your terminal, run the following command to start your application. - - -```bash -npm run dev -``` - -Your application should be running on `localhost:3000`. - -## Other frameworks - - -Although only detailing Next.js in guide, you can build with Weaviate using a number of fullstack frameworks including but not limited to [Nuxt](https://nuxt.com/), [Solid](https://www.solidjs.com/) and [Angular](https://angular.dev/) - -We have a list of [starter](https://github.com/topics/weaviate-starter) applications you can play around with as well. - - - - diff --git a/docs/academy/js/standalone/client-server/_img/architecture.jpg b/docs/academy/js/standalone/client-server/_img/architecture.jpg deleted file mode 100644 index 9a8ddb7cf..000000000 Binary files a/docs/academy/js/standalone/client-server/_img/architecture.jpg and /dev/null differ diff --git a/docs/academy/js/standalone/client-server/_img/backend.jpg b/docs/academy/js/standalone/client-server/_img/backend.jpg deleted file mode 100644 index c5fe962ce..000000000 Binary files a/docs/academy/js/standalone/client-server/_img/backend.jpg and /dev/null differ diff --git a/docs/academy/js/standalone/client-server/_img/fullstack.jpg b/docs/academy/js/standalone/client-server/_img/fullstack.jpg deleted file mode 100644 index 417f0d518..000000000 Binary files a/docs/academy/js/standalone/client-server/_img/fullstack.jpg and /dev/null differ diff --git a/docs/academy/js/standalone/client-server/_snippets/20_backend.js b/docs/academy/js/standalone/client-server/_snippets/20_backend.js deleted file mode 100644 index fdac27ebb..000000000 --- a/docs/academy/js/standalone/client-server/_snippets/20_backend.js +++ /dev/null @@ -1,62 +0,0 @@ -// START weaviate.js -import weaviate from 'weaviate-client' -import 'dotenv/config'; - -export const connectToDB = async () => { - try { - const client = await weaviate.connectToWeaviateCloud(process.env.WEAVIATE_URL,{ - authCredentials: new weaviate.ApiKey(process.env.WEAVIATE_API_KEY), - headers: { - 'X-Cohere-Api-Key': process.env.COHERE_API_KEY || '', - } - } - ) - console.log(`We are connected! ${await client.isReady()}`); - return client - } catch (error) { - console.error(`Error: ${error.message}`); - process.exit(1); - } -}; - -// END weaviate.js -const dotEnv = ``` -// START .env -COHERE_API_KEY= -WEAVIATE_URL= -WEAVIATE_API_KEY= -// END .env -``` - -// START app.js -import express from 'express'; -import { connectToDB } from './config/weaviate.js'; - -const app = express(); -const port = 3005 - -const client = await connectToDB(); - -app.get('/', async function(req, res, next) { - var searchTerm = req.query.searchTerm; - - const wikipedia = client.collections.use("Wikipedia") - - try { - const response = await wikipedia.query.nearText(searchTerm, { - limit: 3 - }) - - res.send(response.objects) - } catch (error) { - console.error(`Error: ${error.message}`); - } - }) - -app.listen(port, () => { - console.log(`App listening on port ${port}`) -}) - - - -// END app.js diff --git a/docs/academy/js/standalone/client-server/_snippets/30_fullstack.js b/docs/academy/js/standalone/client-server/_snippets/30_fullstack.js deleted file mode 100644 index 2458d472a..000000000 --- a/docs/academy/js/standalone/client-server/_snippets/30_fullstack.js +++ /dev/null @@ -1,67 +0,0 @@ -// START weaviate.js - -import weaviate from 'weaviate-client' -import 'dotenv/config'; - -export const connectToDB = async () => { - try { - const client = await weaviate.connectToWeaviateCloud(process.env.WEAVIATE_URL,{ - authCredentials: new weaviate.ApiKey(process.env.WEAVIATE_API_KEY), - headers: { - 'X-Cohere-Api-Key': process.env.COHERE_API_KEY || '', - } - } - ) - console.log(`We are connected! ${await client.isReady()}`); - return client - } catch (error) { - console.error(`Error: ${error.message}`); - process.exit(1); - } -}; - -// END weaviate.js - -// .env -` -COHERE_API_KEY= -WEAVIATE_URL= -WEAVIATE_API_KEY= -` -// END .env - - -// START app.js -import express from 'express'; -import { connectToDB } from './config/weaviate.js'; - -const app = express(); -const port = 3005 - -const client = await connectToDB(); - - - -app.get('/', async function(req, res, next) { - var searchTerm = req.query.searchTerm; - - const wikipedia = client.collections.use("Wikipedia") - - try { - const response = await wikipedia.query.nearText(searchTerm, { - limit: 5 - }) - - res.send(response.objects) - } catch (error) { - console.error(`Error: ${error.message}`); - } - }) - -app.listen(port, () => { - console.log(`App listening on port ${port}`) -}) - - - -// END app.js diff --git a/docs/academy/js/standalone/client-server/_snippets/index.html b/docs/academy/js/standalone/client-server/_snippets/index.html deleted file mode 100644 index 0819a2dfe..000000000 --- a/docs/academy/js/standalone/client-server/_snippets/index.html +++ /dev/null @@ -1,83 +0,0 @@ - - - - -

-

-

-

-

-

-

\ No newline at end of file

diff --git a/docs/academy/js/standalone/client-server/index.md b/docs/academy/js/standalone/client-server/index.md

deleted file mode 100644

index 55bc75cc1..000000000

--- a/docs/academy/js/standalone/client-server/index.md

+++ /dev/null

@@ -1,44 +0,0 @@

----

-title: Building client-server Applications

----

-

-import Tabs from '@theme/Tabs';

-import TabItem from '@theme/TabItem';

-import FilteredTextBlock from '@site/src/components/Documentation/FilteredTextBlock';

-

-

-## Overview

-

-When building web applications in JavaScript with Weaviate using the [weaviate-client](https://www.npmjs.com/package/weaviate-client), it is recommended that you employ the client-server architecture.

-

-This may vary depending what tools you are using to build your web application.

-

-Fullstack frameworks like Next.js have support for server side development and API creation to communicate with Weaviate. This would happen via REST calls or for Next.js specifically, Server functions. This approach means coupling your client and server applications.

-

-Backend web frameworks like Express let you create an API to communicate with Weaviate. This API can be consumed via REST calls from your client application. This approach means completely decoupling your client and server applications.

-

-

-### Prerequisites

-

-- A Node.js environment with `weaviate-client` installed.

-- Familiarity with Weaviate's search capabilities.

-- Some experience building Modern Web Applications with JavaScript.

-- Intermediate coding proficiency (e.g. JavaScript).

-

-## Learning objectives

-

-import LearningGoalsExp from '/src/components/Academy/learningGoalsExp.mdx';

-

-Semantic Search Results

- -Loading...

-

-

-  -

-Generative Models encompass so many types of models, we will specifically focus on large language models (LLMs).

-

-## When to use Generative Models

-

-Generative models are stars in the limelight of retrieval augmented generation (RAG) and agentic workflows. They are great for...

-

-- **Translation:** Models can perform zero-shot translate text from one language to another with extremely high accuracy.

-- **Code Generation:** Models can take high-level instructions and turn them into functional custom code.

-- **Image Generation:** Models can consistently generate high quality images from text instructions in a prompt.

-

-

-## Applications of Generative Models

-

-

-Large Language Models (LLMs), like [Claude](https://www.anthropic.com/claude) family by Anthropic or [Gemini](https://cloud.google.com/vertex-ai/generative-ai/docs/model-reference/inference) by Google are specialized types of generative models focused on text data. These models, like most machine learning models are typically limited to one or more modalities.

-

-We use modality to describe the type of input or output that a machine learning model can process or interact with to run. Typically, generative modals fall into two buckets, uni-modal or multimodal.

-

-

-- **Uni-modal Generation:** In the context on LLMs, uni-modal generation defines a models ability to generate content and receive instructions in a single modality, this modality is usually text.

-

-

-

-Generative Models encompass so many types of models, we will specifically focus on large language models (LLMs).

-

-## When to use Generative Models

-

-Generative models are stars in the limelight of retrieval augmented generation (RAG) and agentic workflows. They are great for...

-

-- **Translation:** Models can perform zero-shot translate text from one language to another with extremely high accuracy.

-- **Code Generation:** Models can take high-level instructions and turn them into functional custom code.

-- **Image Generation:** Models can consistently generate high quality images from text instructions in a prompt.

-

-

-## Applications of Generative Models

-

-

-Large Language Models (LLMs), like [Claude](https://www.anthropic.com/claude) family by Anthropic or [Gemini](https://cloud.google.com/vertex-ai/generative-ai/docs/model-reference/inference) by Google are specialized types of generative models focused on text data. These models, like most machine learning models are typically limited to one or more modalities.

-

-We use modality to describe the type of input or output that a machine learning model can process or interact with to run. Typically, generative modals fall into two buckets, uni-modal or multimodal.

-

-

-- **Uni-modal Generation:** In the context on LLMs, uni-modal generation defines a models ability to generate content and receive instructions in a single modality, this modality is usually text.

-

-

- Example

-

-

-

-### Close the connection

-

-After you have finished using the Weaviate client, you should close the connection. This frees up resources and ensures that the connection is properly closed.

-

-We suggest using a `try`-`finally` block as a best practice. For brevity, we will not include the `try`-`finally` blocks in the remaining code snippets.

-

-Example getMeta() output

-

-

-

-

-Next, you will create a corresponding object collection and import the data.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-See sample text data

- -| | backdrop_path | genre_ids | id | original_language | original_title | overview | popularity | poster_path | release_date | title | video | vote_average | vote_count | -|---:|:---------------------------------|:----------------|-----:|:--------------------|:----------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------:|:---------------------------------|:---------------|:----------------------------|:--------|---------------:|-------------:| -| 0 | /3Nn5BOM1EVw1IYrv6MsbOS6N1Ol.jpg | [14, 18, 10749] | 162 | en | Edward Scissorhands | A small suburban town receives a visit from a castaway unfinished science experiment named Edward. | 45.694 | /1RFIbuW9Z3eN9Oxw2KaQG5DfLmD.jpg | 1990-12-07 | Edward Scissorhands | False | 7.7 | 12305 | -| 1 | /sw7mordbZxgITU877yTpZCud90M.jpg | [18, 80] | 769 | en | GoodFellas | The true story of Henry Hill, a half-Irish, half-Sicilian Brooklyn kid who is adopted by neighbourhood gangsters at an early age and climbs the ranks of a Mafia family under the guidance of Jimmy Conway. | 57.228 | /aKuFiU82s5ISJpGZp7YkIr3kCUd.jpg | 1990-09-12 | GoodFellas | False | 8.5 | 12106 | -| 2 | /6uLhSLXzB1ooJ3522ydrBZ2Hh0W.jpg | [35, 10751] | 771 | en | Home Alone | Eight-year-old Kevin McCallister makes the most of the situation after his family unwittingly leaves him behind when they go on Christmas vacation. But when a pair of bungling burglars set their sights on Kevin's house, the plucky kid stands ready to defend his territory. By planting booby traps galore, adorably mischievous Kevin stands his ground as his frantic mother attempts to race home before Christmas Day. | 3.538 | /onTSipZ8R3bliBdKfPtsDuHTdlL.jpg | 1990-11-16 | Home Alone | False | 7.4 | 10599 | -| 3 | /vKp3NvqBkcjHkCHSGi6EbcP7g4J.jpg | [12, 35, 878] | 196 | en | Back to the Future Part III | The final installment of the Back to the Future trilogy finds Marty digging the trusty DeLorean out of a mineshaft and looking for Doc in the Wild West of 1885. But when their time machine breaks down, the travelers are stranded in a land of spurs. More problems arise when Doc falls for pretty schoolteacher Clara Clayton, and Marty tangles with Buford Tannen. | 28.896 | /crzoVQnMzIrRfHtQw0tLBirNfVg.jpg | 1990-05-25 | Back to the Future Part III | False | 7.5 | 9918 | -| 4 | /3tuWpnCTe14zZZPt6sI1W9ByOXx.jpg | [35, 10749] | 114 | en | Pretty Woman | When a millionaire wheeler-dealer enters a business contract with a Hollywood hooker Vivian Ward, he loses his heart in the bargain. | 97.953 | /hVHUfT801LQATGd26VPzhorIYza.jpg | 1990-03-23 | Pretty Woman | False | 7.5 | 7671 | - -

-

-

-Query image

- - - -

-  -

- -

- -

- -

- -

-Weaviate output:

-

-```text

-Interstellar 2014 157336

-Distance to query: 0.354

-

-Gravity 2013 49047

-Distance to query: 0.384

-

-Arrival 2016 329865

-Distance to query: 0.386

-

-Armageddon 1998 95

-Distance to query: 0.400

-

-Godzilla 1998 929

-Distance to query: 0.441

-```

-

-

-

-Weaviate output:

-

-```text

-Interstellar 2014 157336

-Distance to query: 0.354

-

-Gravity 2013 49047

-Distance to query: 0.384

-

-Arrival 2016 329865

-Distance to query: 0.386

-

-Armageddon 1998 95

-Distance to query: 0.400

-

-Godzilla 1998 929

-Distance to query: 0.441

-```

-

-

-

-### Response object

-

-The returned object is an instance of a custom class. Its `objects` attribute is a list of search results, each object being an instance of another custom class.

-

-Each returned object will:

-- Include all properties and its UUID by default except those with blob data types.

- - Since the `poster` property is a blob, it is not included by default.

- - To include the `poster` property, you must specify it and the other properties to fetch in the `returnProperties` parameter.

-- Not include any other information (e.g. references, metadata, vectors.) by default.

-

-

-## Text search

-

-### Code

-

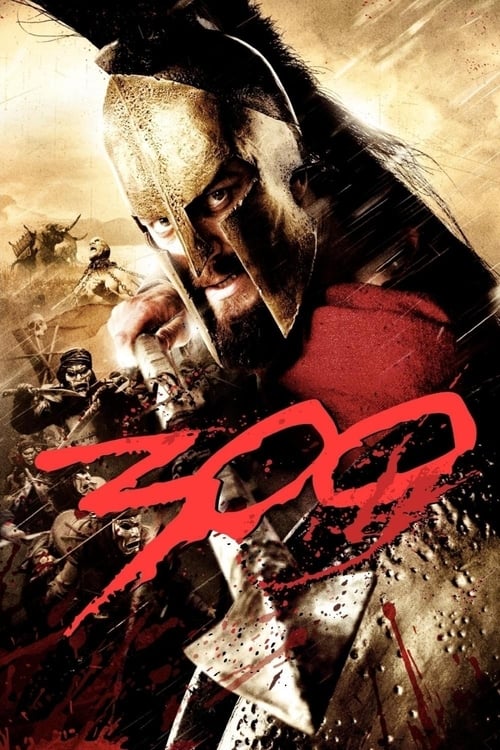

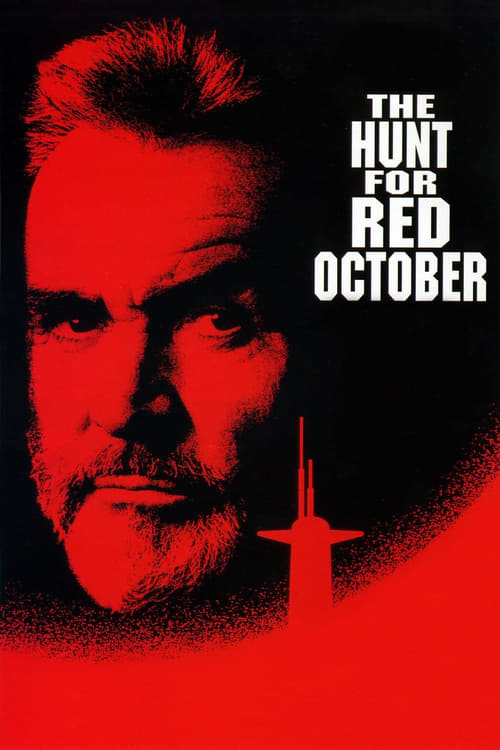

-This example finds entries in "Movie" based on their similarity to the query "red", and prints out the title and release year of the top 5 matches.

-

-Example results

- -Posters for the top 5 matches: - -

- -

- -

- -

- -

-Weaviate output:

-

-```text

-Interstellar 2014 157336

-Distance to query: 0.354

-

-Gravity 2013 49047

-Distance to query: 0.384

-

-Arrival 2016 329865

-Distance to query: 0.386

-

-Armageddon 1998 95

-Distance to query: 0.400

-

-Godzilla 1998 929

-Distance to query: 0.441

-```

-

-

-

-Weaviate output:

-

-```text

-Interstellar 2014 157336

-Distance to query: 0.354

-

-Gravity 2013 49047

-Distance to query: 0.384

-

-Arrival 2016 329865

-Distance to query: 0.386

-

-Armageddon 1998 95

-Distance to query: 0.400

-

-Godzilla 1998 929

-Distance to query: 0.441

-```

-

-

-  -

- -

- -

- -

- -

-Weaviate output:

-

-```text

-Deadpool 2 2018 383498

-Distance to query: 0.670

-

-Bloodshot 2020 338762

-Distance to query: 0.677

-

-Deadpool 2016 293660

-Distance to query: 0.678

-

-300 2007 1271

-Distance to query: 0.682

-

-The Hunt for Red October 1990 1669

-Distance to query: 0.683

-```

-

-

-

-Weaviate output:

-

-```text

-Deadpool 2 2018 383498

-Distance to query: 0.670

-

-Bloodshot 2020 338762

-Distance to query: 0.677

-

-Deadpool 2016 293660

-Distance to query: 0.678

-

-300 2007 1271

-Distance to query: 0.682

-

-The Hunt for Red October 1990 1669

-Distance to query: 0.683

-```

-

-

-

-### Response object

-

-The returned object is in the same format as in the previous example.

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -Posters for the top 5 matches: - -

- -

- -

- -

- -

-Weaviate output:

-

-```text

-Deadpool 2 2018 383498

-Distance to query: 0.670

-

-Bloodshot 2020 338762

-Distance to query: 0.677

-

-Deadpool 2016 293660

-Distance to query: 0.678

-

-300 2007 1271

-Distance to query: 0.682

-

-The Hunt for Red October 1990 1669

-Distance to query: 0.683

-```

-

-

-

-Weaviate output:

-

-```text

-Deadpool 2 2018 383498

-Distance to query: 0.670

-

-Bloodshot 2020 338762

-Distance to query: 0.677

-

-Deadpool 2016 293660

-Distance to query: 0.678

-

-300 2007 1271

-Distance to query: 0.682

-

-The Hunt for Red October 1990 1669

-Distance to query: 0.683

-```

-

-

-

-

-

-## Hybrid search

-

-### Code

-

-This example finds entries in "Movie" with the highest hybrid search scores for the term "history", and prints out the title and release year of the top 5 matches.

-

-Example results

- -```text -American History X 1998 -BM25 score: 2.707 - -A Beautiful Mind 2001 -BM25 score: 1.896 - -Legends of the Fall 1994 -BM25 score: 1.663 - -Hacksaw Ridge 2016 -BM25 score: 1.554 - -Night at the Museum 2006 -BM25 score: 1.529 -``` - -

-

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Legends of the Fall 1994 -Hybrid score: 0.016 - -Hacksaw Ridge 2016 -Hybrid score: 0.016 - -A Beautiful Mind 2001 -Hybrid score: 0.015 - -The Butterfly Effect 2004 -Hybrid score: 0.015 - -Night at the Museum 2006 -Hybrid score: 0.012 -``` - -

-

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Dune 2021 -Distance to query: 0.199 - -Tenet 2020 -Distance to query: 0.200 - -Mission: Impossible - Dead Reckoning Part One 2023 -Distance to query: 0.207 - -Onward 2020 -Distance to query: 0.214 - -Jurassic World Dominion 2022 -Distance to query: 0.216 -``` - -

-

-

-### Response object

-

-Each response object is similar to that from a regular search query, with an additional `generated` attribute. This attribute will contain the generated output for each object.

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Interstellar -Interstellaire -Gravity -Gravité -Arrival -Arrivée -Armageddon -Armageddon -Godzilla -Godzilla -``` - -

-

-

-### Optional parameters

-

-You can also pass on a list of properties to be used, as the `groupedProperties` parameter. This can be useful to reduce the amount of data passed on to the large language model and omit irrelevant properties.

-

-### Response object

-

-A RAG query with the `groupedTask` parameter will return a response with an additional `generated` attribute. This attribute will contain the generated output for the set of objects.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Interstellar -Gravity -Arrival -Armageddon -Godzilla -These movies all involve space exploration, extraterrestrial beings, or catastrophic events threatening Earth. They all deal with themes of survival, human ingenuity, and the unknown mysteries of the universe. -``` - -

- Example

-

-

-

-### Close the connection

-

-After you have finished using the Weaviate client, you should close the connection. This frees up resources and ensures that the connection is properly closed.

-

-We suggest using a `try`-`finally` block as a best practice. For brevity, we will not include the `try`-`finally` blocks in the remaining code snippets.

-

-Example getMeta() output

-

-

-

-

-Next, you will create a corresponding object collection and import the data.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-See sample data

- -| | backdrop_path | genre_ids | id | original_language | original_title | overview | popularity | poster_path | release_date | title | video | vote_average | vote_count | -|---:|:---------------------------------|:----------------|-----:|:--------------------|:----------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------:|:---------------------------------|:---------------|:----------------------------|:--------|---------------:|-------------:| -| 0 | /3Nn5BOM1EVw1IYrv6MsbOS6N1Ol.jpg | [14, 18, 10749] | 162 | en | Edward Scissorhands | A small suburban town receives a visit from a castaway unfinished science experiment named Edward. | 45.694 | /1RFIbuW9Z3eN9Oxw2KaQG5DfLmD.jpg | 1990-12-07 | Edward Scissorhands | False | 7.7 | 12305 | -| 1 | /sw7mordbZxgITU877yTpZCud90M.jpg | [18, 80] | 769 | en | GoodFellas | The true story of Henry Hill, a half-Irish, half-Sicilian Brooklyn kid who is adopted by neighbourhood gangsters at an early age and climbs the ranks of a Mafia family under the guidance of Jimmy Conway. | 57.228 | /aKuFiU82s5ISJpGZp7YkIr3kCUd.jpg | 1990-09-12 | GoodFellas | False | 8.5 | 12106 | -| 2 | /6uLhSLXzB1ooJ3522ydrBZ2Hh0W.jpg | [35, 10751] | 771 | en | Home Alone | Eight-year-old Kevin McCallister makes the most of the situation after his family unwittingly leaves him behind when they go on Christmas vacation. But when a pair of bungling burglars set their sights on Kevin's house, the plucky kid stands ready to defend his territory. By planting booby traps galore, adorably mischievous Kevin stands his ground as his frantic mother attempts to race home before Christmas Day. | 3.538 | /onTSipZ8R3bliBdKfPtsDuHTdlL.jpg | 1990-11-16 | Home Alone | False | 7.4 | 10599 | -| 3 | /vKp3NvqBkcjHkCHSGi6EbcP7g4J.jpg | [12, 35, 878] | 196 | en | Back to the Future Part III | The final installment of the Back to the Future trilogy finds Marty digging the trusty DeLorean out of a mineshaft and looking for Doc in the Wild West of 1885. But when their time machine breaks down, the travelers are stranded in a land of spurs. More problems arise when Doc falls for pretty schoolteacher Clara Clayton, and Marty tangles with Buford Tannen. | 28.896 | /crzoVQnMzIrRfHtQw0tLBirNfVg.jpg | 1990-05-25 | Back to the Future Part III | False | 7.5 | 9918 | -| 4 | /3tuWpnCTe14zZZPt6sI1W9ByOXx.jpg | [35, 10749] | 114 | en | Pretty Woman | When a millionaire wheeler-dealer enters a business contract with a Hollywood hooker Vivian Ward, he loses his heart in the bargain. | 97.953 | /hVHUfT801LQATGd26VPzhorIYza.jpg | 1990-03-23 | Pretty Woman | False | 7.5 | 7671 | - -

-

-

-### Response object

-

-The returned object is an instance of a custom class. Its `objects` attribute is a list of search results, each object being an instance of another custom class.

-

-Each returned object will:

-- Include all properties and its UUID by default except those with blob data types.

-- Not include any other information (e.g. references, metadata, vectors.) by default.

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -In Time 2011 -Distance to query: 0.179 - -Gattaca 1997 -Distance to query: 0.180 - -I, Robot 2004 -Distance to query: 0.182 - -Mad Max: Fury Road 2015 -Distance to query: 0.190 - -The Maze Runner 2014 -Distance to query: 0.193 -``` - -

-

-

-

-## Hybrid search

-

-### Code

-

-This example finds entries in "Movie" with the highest hybrid search scores for the term "history", and prints out the title and release year of the top 5 matches.

-

-Example results

- -```text -American History X 1998 -BM25 score: 2.707 - -A Beautiful Mind 2001 -BM25 score: 1.896 - -Legends of the Fall 1994 -BM25 score: 1.663 - -Hacksaw Ridge 2016 -BM25 score: 1.554 - -Night at the Museum 2006 -BM25 score: 1.529 -``` - -

-

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Legends of the Fall 1994 -Hybrid score: 0.016 - -Hacksaw Ridge 2016 -Hybrid score: 0.016 - -A Beautiful Mind 2001 -Hybrid score: 0.015 - -The Butterfly Effect 2004 -Hybrid score: 0.015 - -Night at the Museum 2006 -Hybrid score: 0.012 -``` - -

-

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -Dune 2021 -Distance to query: 0.199 - -Tenet 2020 -Distance to query: 0.200 - -Mission: Impossible - Dead Reckoning Part One 2023 -Distance to query: 0.207 - -Onward 2020 -Distance to query: 0.214 - -Jurassic World Dominion 2022 -Distance to query: 0.216 -``` - -

-

-

-### Response object

-

-Each response object is similar to that from a regular search query, with an additional `generated` attribute. This attribute will contain the generated output for each object.

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -In Time -À temps -Looper -Boucleur -I, Robot -Je, Robot -The Matrix -La Matrice -Children of Men -Les enfants des hommes -``` - -

-

-

-### Optional parameters

-

-You can also pass on a list of properties to be used, as the `groupedProperties` parameter. This can be useful to reduce the amount of data passed on to the large language model and omit irrelevant properties.

-

-### Response object

-

-A RAG query with the `groupedTask` parameter will return a response with an additional `generated` attribute. This attribute will contain the generated output for the set of objects.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example results

- -```text -In Time -Looper -I, Robot -The Matrix -Children of Men -These movies all involve futuristic settings and explore themes related to the manipulation of time, technology, and the potential consequences of advancements in society. They also touch on issues such as inequality, control, and the impact of human actions on the future of humanity. -``` - - -

-## Install Python

-

-### Is Python installed?

-

-Open a terminal window (e.g. bash, zsh, Windows PowerShell, Windows Terminal), and run:

-

-```shell

-python --version

-```

-

-If that did not work, you may need to use `python3` instead of `python`:

-

-```shell

-python3 --version

-```

-

-If you have Python installed, you should see a response like `Python 3.11.8`. If you have Python 3.8 or higher installed, you can skip the remainder of this section.

-

-### Install Python

-

-To install, follow the instructions for your system on [Python.org](https://www.python.org/downloads/).

-

-Once you have Python installed, check the version again to confirm that you have a recommended version installed.

-

-:::tip Advanced option: `pyenv`

-Another good way to install Python is to install `pyenv`. This will allow you to manage multiple versions of Python on your system. You can find instructions on how to install `pyenv` [here](https://github.com/pyenv/pyenv?tab=readme-ov-file#installation).

-:::

-

-## Set up a virtual environment

-

-A virtual environment allows you to isolate various Python projects from each other. This is useful because it allows you to install dependencies for each project without affecting the others.

-

-### Create a virtual environment

-

-We recommend using `venv` to create a virtual environment. Navigate to your project directory (e.g. `cd PATH/TO/PROJECT`), and run:

-

-```shell

-python -m venv .venv

-```

-

-Or, if `python3` is your Python command:

-

-```shell

-python3 -m venv .venv

-```

-

-This will create a virtual environment in a directory called `.venv` in your project directory.

-

-### Activate the virtual environment

-

-Each virtual environment can be 'activated' and 'deactivated'. When activated, the Python commands you run will use the Python version and libraries installed in the virtual environment.

-

-To activate the virtual environment, go to your project directory and run:

-

-```shell

-source .venv/bin/activate

-```

-

-Or, if you are using Windows:

-

-

-

-## Install Python

-

-### Is Python installed?

-

-Open a terminal window (e.g. bash, zsh, Windows PowerShell, Windows Terminal), and run:

-

-```shell

-python --version

-```

-

-If that did not work, you may need to use `python3` instead of `python`:

-

-```shell

-python3 --version

-```

-

-If you have Python installed, you should see a response like `Python 3.11.8`. If you have Python 3.8 or higher installed, you can skip the remainder of this section.

-

-### Install Python

-

-To install, follow the instructions for your system on [Python.org](https://www.python.org/downloads/).

-

-Once you have Python installed, check the version again to confirm that you have a recommended version installed.

-

-:::tip Advanced option: `pyenv`

-Another good way to install Python is to install `pyenv`. This will allow you to manage multiple versions of Python on your system. You can find instructions on how to install `pyenv` [here](https://github.com/pyenv/pyenv?tab=readme-ov-file#installation).

-:::

-

-## Set up a virtual environment

-

-A virtual environment allows you to isolate various Python projects from each other. This is useful because it allows you to install dependencies for each project without affecting the others.

-

-### Create a virtual environment

-

-We recommend using `venv` to create a virtual environment. Navigate to your project directory (e.g. `cd PATH/TO/PROJECT`), and run:

-

-```shell

-python -m venv .venv

-```

-

-Or, if `python3` is your Python command:

-

-```shell

-python3 -m venv .venv

-```

-

-This will create a virtual environment in a directory called `.venv` in your project directory.

-

-### Activate the virtual environment

-

-Each virtual environment can be 'activated' and 'deactivated'. When activated, the Python commands you run will use the Python version and libraries installed in the virtual environment.

-

-To activate the virtual environment, go to your project directory and run:

-

-```shell

-source .venv/bin/activate

-```

-

-Or, if you are using Windows:

-

-- -Additionally, there are many other environment management tools available, such as `conda`, `pipenv`, and `poetry`. If you are already using one of these tools, you can use them instead of `venv`. -::: - -## Install the Weaviate client - -Now, you can install the [Weaviate client library](../../weaviate/client-libraries/index.mdx), which will make it much easier to interact with Weaviate using Python. - -[Activate your virtual environment](#-activate-the-virtual-environment), then install the Weaviate client with: - -```shell -pip install weaviate-client -``` - -### Confirm the installation - -To confirm that the Weaviate client is installed, run the following Python code: - -

- -If you are using an earlier version of Weaviate, or have asynchronous indexing disabled, you will need to use a different configuration. Please refer to the [PQ configuration documentation](/weaviate/configuration/compression/pq-compression.md#manually-configure-pq) for more information. -::: - -## Customize PQ - -Many PQ parameters are configurable. While the default settings are suitable for many use cases, you may want to customize the PQ configuration to suit your specific requirements. - -The example below shows how to configure PQ with custom settings, such as with a lower training set size, and a different number of centroids. - -

-

-

-## Why use multi-tenancy?

-

-A typical multi-tenancy use-case is in a software-as-a-service (SaaS) application. In many SaaS applications, each end user or account will have private data that should be not be accessible to anyone else.

-

-### Example case study

-

-In this course, we'll learn about multi-tenancy by putting ourselves in the shoes of a developer building an application called `MyPrivateJournal`.

-

-`MyPrivateJournal` is a SaaS (software-as-a-service) application where users like *Steve*, *Alice* and so on can write and store their journal entries. Each user's entries should be private and not accessible to anyone else.

-

-Using single-tenant collections, you might implement this with:

-

-1. **A monolithic collection**: To store the entire dataset, with an end user identifier property

-1. **Per end-user collections**: Where each end user's data would be in a separate collection

-

-While these may work to some extent, both of these options have significant limitations.

-

-- Using a monolithic collection:

- - A developer mistake could easily expose Steve's entries to Alice, which would be a significant privacy breach.

- - As `MyPrivateJournal` grows, Steve's query would become slower as it must look through the entire collection.

- - When Steve asks `MyPrivateJournal` to delete his data, the process would be complex and error-prone.

-- Using end-user-specific collections:

- - `MyPrivteJournal` may need to spend more on hardware to support the high number of collections.

- - Changes to configurations (e.g. adding a new property) would need to be run separately for each collection.

-

-Multi-tenancy in Weaviate solves these problems by providing a way to isolate each user's data while sharing the same configuration.

-

-### Benefits of multi-tenancy

-

-In multi-tenant collection, each "tenant" is isolated from each other, while sharing the same set of configurations. This arrangement helps make multi-tenancy far more resource-efficient than using many individual collections.

-

-A Weaviate node can host more tenants than single-tenant collections.

-

-It also makes developers' job easier, as there is only one set of collection configurations. The data isolation between tenants eliminates risks of accidental data leakage and makes it easier to manage individual tenants and tenant data.

-

-#### `MyPrivateJournal` and multi-tenancy

-

-So, the `MyPrivateJournal` app can use multi-tenancy and store each user's journal entries in a separate tenant. This way, Steve's entries are isolated from Alice's, and vice versa. This isolation makes it easier to manage each user's data and reduces the risk of data leakage.

-

-As you will see later, `MyPrivateJournal` can also offload inactive users' data to cold storage, reducing the hot (memory) and warm (disk) resource usage of the Weaviate node.

-

-## Tenants vs collections

-

-Each multi-tenant collection can have any number of tenants.

-

-A tenant is very similar to a single-tenant collection. For example:

-

-| Aspect | Tenant | Single-tenant collection |

-| ----- | ----- | ----- |

-| Objects | Belong to a tenant | Belong to a collection |

-| Vector indexes | Belong to a tenant | Belong to a collection |

-| Inverted indexes | Belong to a tenant | Belong to a collection |

-| Deletion | Deleting a tenant deletes all tenant data | Deleting a collection deletes all collection data |

-| Query | Can search one tenant at a time | Can search one collection at a time |

-

-But as you will have guessed, there are also differences. We'll cover these in the next sections, as we follow `MyPrivateJournal` implementing multi-tenancy in Weaviate.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-"Multi-tenancy" in other contexts

- -In general, the term "multi-tenancy" refers to a software architecture where a single instance of the software serves multiple "tenants". In that context, each tenant may be a group of users who share common access. - -This is similar to the concept of multi-tenancy in Weaviate, where each tenant is a group of data that is isolated from other tenants. - -

-

- -But we can easily extend this to a multi-node setup by adding additional services. This will allow you to scale your Weaviate instance horizontally, and provide fault tolerance with replication. -

- -For example, here is a multi-node setup with three nodes. - -```yaml ---- -services: - weaviate-node-1: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8180:8080" - - 50151:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - ENABLE_MODULES: 'text2vec-ollama,generative-ollama,backup-filesystem,offload-s3' - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node1' - CLUSTER_GOSSIP_BIND_PORT: '7100' - CLUSTER_DATA_BIND_PORT: '7101' - weaviate-node-2: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8181:8080" - - 50152:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - ENABLE_MODULES: 'text2vec-ollama,generative-ollama,backup-filesystem,offload-s3' - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node2' - CLUSTER_GOSSIP_BIND_PORT: '7102' - CLUSTER_DATA_BIND_PORT: '7103' - CLUSTER_JOIN: 'weaviate-node-1:7100' - weaviate-node-3: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8182:8080" - - 50153:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node3' - CLUSTER_GOSSIP_BIND_PORT: '7104' - CLUSTER_DATA_BIND_PORT: '7105' - CLUSTER_JOIN: 'weaviate-node-1:7100' -... -``` - -

-

-### Configuration highlights

-

-You may have seen Docker configurations elsewhere ([e.g. Docs](/deploy/installation-guides/docker-installation.md), [Academy](../starter_text_data/101_setup_weaviate/20_create_instance/20_create_docker.mdx)). But these highlighted configurations may be new to you:

-

-- `ASYNC_INDEXING`: This will enable asynchronous indexing. This is useful for high-volume data insertion, and enables us to use the `dynamic` index type, which you will learn about later on.

-- `ENABLE_MODULES`: We enable `offload-s3` to demonstrate tenant offloading later on. Offloading helps us to manage inactive users' data efficiently.

-- `AWS_ACCESS_KEY_ID` and `AWS_SECRET_ACCESS_KEY`: These are the AWS credentials that Weaviate will use to access the S3 bucket.

-- `OFFLOAD_S3_BUCKET_AUTO_CREATE`: This will automatically create the S3 bucket if it does not exist.

-

-Save the file to `docker-compose.yaml`, and run the following command to start Weaviate:

-

-```bash

-docker compose up

-```

-

-import OffloadingLimitation from '/_includes/offloading-limitation.mdx';

-

-What about a multi-node setup?

- -Great question! As you probably noticed, we are using a single-node setup here for simplicity. -- -But we can easily extend this to a multi-node setup by adding additional services. This will allow you to scale your Weaviate instance horizontally, and provide fault tolerance with replication. -

- -For example, here is a multi-node setup with three nodes. - -```yaml ---- -services: - weaviate-node-1: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8180:8080" - - 50151:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - ENABLE_MODULES: 'text2vec-ollama,generative-ollama,backup-filesystem,offload-s3' - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node1' - CLUSTER_GOSSIP_BIND_PORT: '7100' - CLUSTER_DATA_BIND_PORT: '7101' - weaviate-node-2: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8181:8080" - - 50152:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - ENABLE_MODULES: 'text2vec-ollama,generative-ollama,backup-filesystem,offload-s3' - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node2' - CLUSTER_GOSSIP_BIND_PORT: '7102' - CLUSTER_DATA_BIND_PORT: '7103' - CLUSTER_JOIN: 'weaviate-node-1:7100' - weaviate-node-3: # Founding member service name - command: - - --host - - 0.0.0.0 - - --port - - '8080' - - --scheme - - http - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - restart: on-failure:0 - ports: - - "8182:8080" - - 50153:50051 - environment: - AUTOSCHEMA_ENABLED: 'false' - QUERY_DEFAULTS_LIMIT: 25 - QUERY_MAXIMUM_RESULTS: 10000 - AUTHENTICATION_ANONYMOUS_ACCESS_ENABLED: 'true' - PERSISTENCE_DATA_PATH: '/var/lib/weaviate' - ASYNC_INDEXING: 'true' - image: cr.weaviate.io/semitechnologies/weaviate:||site.weaviate_version|| - ENABLE_API_BASED_MODULES: 'true' - AWS_ACCESS_KEY_ID: ${AWS_ACCESS_KEY:-} - AWS_SECRET_ACCESS_KEY: ${AWS_SECRET_KEY:-} - OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' - BACKUP_FILESYSTEM_PATH: '/var/lib/weaviate/backups' - CLUSTER_HOSTNAME: 'node3' - CLUSTER_GOSSIP_BIND_PORT: '7104' - CLUSTER_DATA_BIND_PORT: '7105' - CLUSTER_JOIN: 'weaviate-node-1:7100' -... -``` - -

- More about

-

-:::info Added in `v1.25`

-The auto tenant creation feature is available from `v1.25.0` for batch imports, and from `v1.25.2` for single object insertions.

-:::

-

-Enabling `auto_tenant_creation` will cause Weaviate to automatically create the tenant when an object is inserted against a non-existent tenant.

-

- -This option is particularly useful for bulk data ingestion, as it removes the need to create the tenant prior to object insertion. Instead, `auto_tenant_creation` will allow the object insertion process to continue without interruption. -

- -A risk of using `auto_tenant_creation` is that an error in the source data will not be caught during import. For example, a source object with erroneously spelt `"TenntOn"` instead of `"TenantOne"` will create a new tenant for `"TenntOne"` instead of raising an error. -

- -The server-side default for `auto_tenant_creation` is `false`. -

-

-More about auto_tenant_creation

-

-:::info Added in `v1.25`

-The auto tenant creation feature is available from `v1.25.0` for batch imports, and from `v1.25.2` for single object insertions.

-:::

-

-Enabling `auto_tenant_creation` will cause Weaviate to automatically create the tenant when an object is inserted against a non-existent tenant.

-- -This option is particularly useful for bulk data ingestion, as it removes the need to create the tenant prior to object insertion. Instead, `auto_tenant_creation` will allow the object insertion process to continue without interruption. -

- -A risk of using `auto_tenant_creation` is that an error in the source data will not be caught during import. For example, a source object with erroneously spelt `"TenntOn"` instead of `"TenantOne"` will create a new tenant for `"TenntOne"` instead of raising an error. -

- -The server-side default for `auto_tenant_creation` is `false`. -

- More about

-

-:::info Added in `v1.25.2`

-The auto tenant activation feature is available from `v1.25.2`.

-:::

-

-If `auto_tenant_activation` is enabled, Weaviate will automatically activate any deactivated (`INACTIVE` or `OFFLOADED`) tenants when they are accessed.

-

- -This option is particularly useful for scenarios where you have a large number of tenants, but only a subset of them are active at any given time. An example is a SaaS app where some tenants may be unlikely due to their local time zone, or their recent activity level. -

- -By enabling `auto_tenant_activation`, you can safely set those less active users to be inactive, knowing that they will be loaded onto memory once requested. -

- -This can help to reduce the memory footprint of your Weaviate instance, as only the active tenants are loaded into memory. -

- -The server-side default for `auto_tenant_activation` is `false`. - -

-

-### Configure vector index

-

-From what we know about other journal use cases, a majority of users will only have a small number of entries. But, a few of those users may have a large number of entries.

-

-This is a tricky situation to balance. If we use a `hnsw` index, it will be fast for users with many entries, but it will require a lot of memory. If we use a `flat` index, it will require less memory, but potentially slower for users with many entries.

-

-What we can do here is to choose a `dynamic` index. A `dynamic` index will automatically switch from `flat` to `hnsw` once it passes a threshold count. This way, we can balance the memory usage and speed for our users.

-

-Here is an example code snippet, configuring a "note" named vector with a `dynamic` index.

-

-More about auto_tenant_activation

-

-:::info Added in `v1.25.2`

-The auto tenant activation feature is available from `v1.25.2`.

-:::

-

-If `auto_tenant_activation` is enabled, Weaviate will automatically activate any deactivated (`INACTIVE` or `OFFLOADED`) tenants when they are accessed.

-- -This option is particularly useful for scenarios where you have a large number of tenants, but only a subset of them are active at any given time. An example is a SaaS app where some tenants may be unlikely due to their local time zone, or their recent activity level. -

- -By enabling `auto_tenant_activation`, you can safely set those less active users to be inactive, knowing that they will be loaded onto memory once requested. -

- -This can help to reduce the memory footprint of your Weaviate instance, as only the active tenants are loaded into memory. -

- -The server-side default for `auto_tenant_activation` is `false`. - -

-

-

-Data being inserted

- -The objects to be added can be a list of dictionaries, as shown here. Note the use of `datetime` objects with a timezone for `DATE` type properties. - -

-

-

-### Search for entries

-

-Additionally, Steve might want to search for entries. For example - he might want to search for entries relating to some food experience that he had.

-

-`MyPrivateJournal` can leverage Weaviate's `hybrid` search to help Steve find the most relevant entries.

-

-Example response

- -Such a query should return a response like: - -

-

-

-You can see that the search syntax is essentially identical to that of a single-tenant collection. So, any search method available for a single-tenant collection can be applied to a multi-tenant collection.

-

-### Summary

-

-In this section, we learned how to work with tenants and their data in a multi-tenant collection. We saw how to create tenants, add data objects, and query tenant data.

-

-In the next section, we will learn how `MyPrivateJournal` can keep their application running smoothly and efficiently by managing tenants.

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-Example response

- -

-

- -To use tenant offloading in Weaviate, you need enable a relevant offloading [module](../../../weaviate/configuration/modules.md). Depending on whether your deployment is on Docker or Kubernetes, you can enable the `offload-s3` module as shown below. - -

-

-

-```yaml

-services:

- weaviate:

- environment:

- # highlight-start

- ENABLE_MODULES: 'offload-s3' # plus other modules you may need

- OFFLOAD_S3_BUCKET: 'weaviate-offload' # the name of the S3 bucket

- OFFLOAD_S3_BUCKET_AUTO_CREATE: 'true' # create the bucket if it does not exist

- # highlight-end

-```

-

-

-

-

-```yaml

-offload:

- s3:

- enabled: true # Set this value to true to enable the offload-s3 module

- envconfig:

- OFFLOAD_S3_BUCKET: weaviate-offload # the name of the S3 bucket

- OFFLOAD_S3_BUCKET_AUTO_CREATE: true # create the bucket if it does not exist

-```

-

-

-

-

-If the target S3 bucket does not exist, the `OFFLOAD_S3_BUCKET_AUTO_CREATE` variable must be set to `true` so that Weaviate can create the bucket automatically.

-

- -#### AWS permissions - -You must provide Weaviate with AWS authentication details. You can choose between access-key or ARN-based authentication. -

- -:::tip Requirements -The Weaviate instance must have the [necessary permissions to access the S3 bucket](https://docs.aws.amazon.com/AmazonS3/latest/userguide/access-policy-language-overview.html). -- The provided AWS identity must be able to write to the bucket. -- If `OFFLOAD_S3_BUCKET_AUTO_CREATE` is set to `true`, the AWS identity must have permission to create the bucket. -::: - -**Option 1: With IAM and ARN roles** -

- -The backup module will first try to authenticate itself using AWS IAM. If the authentication fails then it will try to authenticate with `Option 2`. -

- -**Option 2: With access key and secret access key** -

- -| Environment variable | Description | -| --- | --- | -| `AWS_ACCESS_KEY_ID` | The id of the AWS access key for the desired account. | -| `AWS_SECRET_ACCESS_KEY` | The secret AWS access key for the desired account. | -| `AWS_REGION` | (Optional) The AWS Region. If not provided, the module will try to parse `AWS_DEFAULT_REGION`. | - -Once the `offload-s3` module is enabled, you can offload tenants to the S3 bucket by [setting their activity status](#offload-tenants) to `OFFLOADED`, or load them back to local storage by setting their status to `ACTIVE` or `INACTIVE`. - -

-

-#### Activate users

-

-And then, `MyPrivateJournal` can activate tenants as required. For example, they could activate a tenant when the user logs in, or based on their local time in an inverse pattern to deactivation:

-

-How to set up offloading

- -import OffloadingLimitation from '/_includes/offloading-limitation.mdx'; - -- -To use tenant offloading in Weaviate, you need enable a relevant offloading [module](../../../weaviate/configuration/modules.md). Depending on whether your deployment is on Docker or Kubernetes, you can enable the `offload-s3` module as shown below. - -

- -#### AWS permissions - -You must provide Weaviate with AWS authentication details. You can choose between access-key or ARN-based authentication. -

- -:::tip Requirements -The Weaviate instance must have the [necessary permissions to access the S3 bucket](https://docs.aws.amazon.com/AmazonS3/latest/userguide/access-policy-language-overview.html). -- The provided AWS identity must be able to write to the bucket. -- If `OFFLOAD_S3_BUCKET_AUTO_CREATE` is set to `true`, the AWS identity must have permission to create the bucket. -::: - -**Option 1: With IAM and ARN roles** -

- -The backup module will first try to authenticate itself using AWS IAM. If the authentication fails then it will try to authenticate with `Option 2`. -

- -**Option 2: With access key and secret access key** -

- -| Environment variable | Description | -| --- | --- | -| `AWS_ACCESS_KEY_ID` | The id of the AWS access key for the desired account. | -| `AWS_SECRET_ACCESS_KEY` | The secret AWS access key for the desired account. | -| `AWS_REGION` | (Optional) The AWS Region. If not provided, the module will try to parse `AWS_DEFAULT_REGION`. | - -Once the `offload-s3` module is enabled, you can offload tenants to the S3 bucket by [setting their activity status](#offload-tenants) to `OFFLOADED`, or load them back to local storage by setting their status to `ACTIVE` or `INACTIVE`. - -

- Example

-

-Note that this output is a little longer due to the additional details from the CLIP models.

-

-

-

-### Close the connection

-

-After you have finished using the Weaviate client, you should close the connection. This frees up resources and ensures that the connection is properly closed.

-

-We suggest using a `try`-`finally` block as a best practice. For brevity, we will not include the `try`-`finally` blocks in the remaining code snippets.

-

-Example get_meta output

-

-Note that this output is a little longer due to the additional details from the CLIP models.

-

-

-

-

-## Questions and feedback

-

-import DocsFeedback from '/_includes/docs-feedback.mdx';

-

-See sample text data

- -| | backdrop_path | genre_ids | id | original_language | original_title | overview | popularity | poster_path | release_date | title | video | vote_average | vote_count | -|---:|:---------------------------------|:----------------|-----:|:--------------------|:----------------------------|:--------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------------|-------------:|:---------------------------------|:---------------|:----------------------------|:--------|---------------:|-------------:| -| 0 | /3Nn5BOM1EVw1IYrv6MsbOS6N1Ol.jpg | [14, 18, 10749] | 162 | en | Edward Scissorhands | A small suburban town receives a visit from a castaway unfinished science experiment named Edward. | 45.694 | /1RFIbuW9Z3eN9Oxw2KaQG5DfLmD.jpg | 1990-12-07 | Edward Scissorhands | False | 7.7 | 12305 | -| 1 | /sw7mordbZxgITU877yTpZCud90M.jpg | [18, 80] | 769 | en | GoodFellas | The true story of Henry Hill, a half-Irish, half-Sicilian Brooklyn kid who is adopted by neighbourhood gangsters at an early age and climbs the ranks of a Mafia family under the guidance of Jimmy Conway. | 57.228 | /aKuFiU82s5ISJpGZp7YkIr3kCUd.jpg | 1990-09-12 | GoodFellas | False | 8.5 | 12106 | -| 2 | /6uLhSLXzB1ooJ3522ydrBZ2Hh0W.jpg | [35, 10751] | 771 | en | Home Alone | Eight-year-old Kevin McCallister makes the most of the situation after his family unwittingly leaves him behind when they go on Christmas vacation. But when a pair of bungling burglars set their sights on Kevin's house, the plucky kid stands ready to defend his territory. By planting booby traps galore, adorably mischievous Kevin stands his ground as his frantic mother attempts to race home before Christmas Day. | 3.538 | /onTSipZ8R3bliBdKfPtsDuHTdlL.jpg | 1990-11-16 | Home Alone | False | 7.4 | 10599 | -| 3 | /vKp3NvqBkcjHkCHSGi6EbcP7g4J.jpg | [12, 35, 878] | 196 | en | Back to the Future Part III | The final installment of the Back to the Future trilogy finds Marty digging the trusty DeLorean out of a mineshaft and looking for Doc in the Wild West of 1885. But when their time machine breaks down, the travelers are stranded in a land of spurs. More problems arise when Doc falls for pretty schoolteacher Clara Clayton, and Marty tangles with Buford Tannen. | 28.896 | /crzoVQnMzIrRfHtQw0tLBirNfVg.jpg | 1990-05-25 | Back to the Future Part III | False | 7.5 | 9918 | -| 4 | /3tuWpnCTe14zZZPt6sI1W9ByOXx.jpg | [35, 10749] | 114 | en | Pretty Woman | When a millionaire wheeler-dealer enters a business contract with a Hollywood hooker Vivian Ward, he loses his heart in the bargain. | 97.953 | /hVHUfT801LQATGd26VPzhorIYza.jpg | 1990-03-23 | Pretty Woman | False | 7.5 | 7671 | - - -

- -

- -

- -

- -

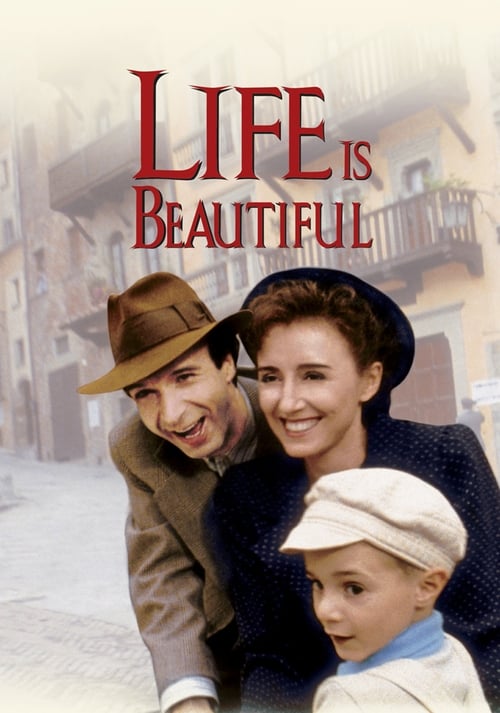

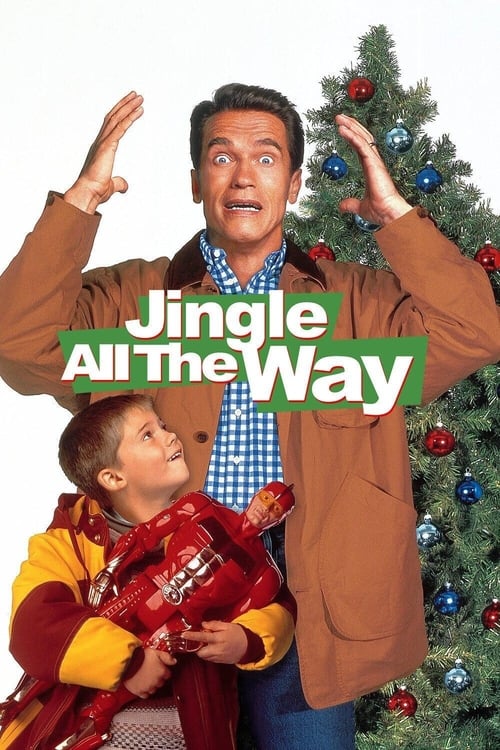

-```text

-Life Is Beautiful 1997 637

-Distance to query: 0.621

-

-Groundhog Day 1993 137

-Distance to query: 0.623

-

-Jingle All the Way 1996 9279

-Distance to query: 0.625

-

-Training Day 2001 2034

-Distance to query: 0.627

-

-Misery 1990 1700

-Distance to query: 0.632

-```

-

-

-

-```text

-Life Is Beautiful 1997 637

-Distance to query: 0.621

-

-Groundhog Day 1993 137

-Distance to query: 0.623

-

-Jingle All the Way 1996 9279

-Distance to query: 0.625

-

-Training Day 2001 2034

-Distance to query: 0.627

-

-Misery 1990 1700

-Distance to query: 0.632

-```

-

-  -

-Luckily for them, the `MovieNVDemo` collection has `poster_title` named vectors which is primarily based on the poster design. So Aesthetico's designers can search against the `poster_title` named vector and find movies that are similar to their poster design. And, they can then perform RAG to summarize the movies that are found.

-

-### Code

-

-This query will find similar movies to the input image, and then provide insights using RAG.

-

-

-

-Luckily for them, the `MovieNVDemo` collection has `poster_title` named vectors which is primarily based on the poster design. So Aesthetico's designers can search against the `poster_title` named vector and find movies that are similar to their poster design. And, they can then perform RAG to summarize the movies that are found.

-

-### Code

-

-This query will find similar movies to the input image, and then provide insights using RAG.

-

-

-

-Inception

-Mission: Impossible

-The Dark Knight

-Lost in Translation

-Independence Day

-Godzilla vs. Kong

-Fargo

-The Amazing Spider-Man

-Godzilla

- -

-

-## Film writers: evaluating ideas

-

-Now, in another project, a set of writers at *ScriptManiacs* are working on a movie script for a science fiction film. They are working a few ideas for the movie title, and they want to see what kinds of imagery and themes are associated with each title.

-

-They could also use the same collection to do what they want to do. In fact, they could run multiple queries against the same collection, each with a different `target_vector` parameter.

-

-The ScriptManiacs writers can:

-- Search against the `title` named vector to find movies with *similar titles*;

-- Search against the `overview` named vector to find movies whose *plots are similar* to their title idea; and

-

-Let's see how they could do it for a title - "Chrono Tides: The Anomaly Rift".

-

-### Code

-

-This example finds entries in "MovieNVDemo" based on their similarity to "Chrono Tides: The Anomaly Rift", then instructs the large language model to find commonalities between them.

-

-Note the `for tgt_vector` loop, which allows the writers to run the same query against different named vectors.

-

-Search results

- -Predator 2-Inception

-Mission: Impossible

-The Dark Knight

-Lost in Translation

-Independence Day

-Godzilla vs. Kong

-Fargo

-The Amazing Spider-Man

-Godzilla

- -

-

-Lara Croft: Tomb Raider

-The Croods: A New Age

-The Twilight Saga: Breaking Dawn - Part 1

-Meg 2: The Trench

- -

-

-#### Similar overviews

-