-

Notifications

You must be signed in to change notification settings - Fork 20

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

about MACs #1

Comments

|

Yes you are right, the prunned input channels are simply assigned as 0s for training convenience, therefore the MACs are not reduced in this implementation. In order to achieve the theoretical computation reduction in the testing phase, as discussed at the end of the paper, a dynamic version of "index layer" should be applied, which is supposed to gather the selected channels to form the input for the group convolution. We also suggest you to refer to the the discussion of index layer in condensenet and FLGC. |

|

how to achieve a dynamic version of "index layer"? i mean, how to realize it in pytorch? the index layer in condensenet and FLGC is "static" while it is "dynamic" in DGC, how to achieve the theoretical computation reduction in the testing phase? |

|

@Sunshine-Ye In Pytroch framework, you could use something like index select once you get the indices of the selected channels. This works when batch size is 1. For batch size > 1, it is true a problem at least under the current Pytorch framework, because individual sample has its different filters corresponding to the selected channels. We are also looking forward to new solutions. |

thanks the authors for this promising work!

In the paper, the convolution in the ith head is conducted with only the selected emphasized channels and the corresponding filters:

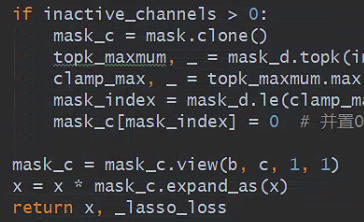

In the code, the unselected channels is also computed with the corresponding filters, instead the unselected channels is masked with 0:

I want know if the actual MACs or flops or computation-cost are effectively reduced in the pytorch implementation?

The text was updated successfully, but these errors were encountered: