diff --git a/.DS_Store b/.DS_Store

new file mode 100644

index 000000000..24d92646f

Binary files /dev/null and b/.DS_Store differ

diff --git a/LICENSE b/LICENSE

new file mode 100644

index 000000000..261eeb9e9

--- /dev/null

+++ b/LICENSE

@@ -0,0 +1,201 @@

+ Apache License

+ Version 2.0, January 2004

+ http://www.apache.org/licenses/

+

+ TERMS AND CONDITIONS FOR USE, REPRODUCTION, AND DISTRIBUTION

+

+ 1. Definitions.

+

+ "License" shall mean the terms and conditions for use, reproduction,

+ and distribution as defined by Sections 1 through 9 of this document.

+

+ "Licensor" shall mean the copyright owner or entity authorized by

+ the copyright owner that is granting the License.

+

+ "Legal Entity" shall mean the union of the acting entity and all

+ other entities that control, are controlled by, or are under common

+ control with that entity. For the purposes of this definition,

+ "control" means (i) the power, direct or indirect, to cause the

+ direction or management of such entity, whether by contract or

+ otherwise, or (ii) ownership of fifty percent (50%) or more of the

+ outstanding shares, or (iii) beneficial ownership of such entity.

+

+ "You" (or "Your") shall mean an individual or Legal Entity

+ exercising permissions granted by this License.

+

+ "Source" form shall mean the preferred form for making modifications,

+ including but not limited to software source code, documentation

+ source, and configuration files.

+

+ "Object" form shall mean any form resulting from mechanical

+ transformation or translation of a Source form, including but

+ not limited to compiled object code, generated documentation,

+ and conversions to other media types.

+

+ "Work" shall mean the work of authorship, whether in Source or

+ Object form, made available under the License, as indicated by a

+ copyright notice that is included in or attached to the work

+ (an example is provided in the Appendix below).

+

+ "Derivative Works" shall mean any work, whether in Source or Object

+ form, that is based on (or derived from) the Work and for which the

+ editorial revisions, annotations, elaborations, or other modifications

+ represent, as a whole, an original work of authorship. For the purposes

+ of this License, Derivative Works shall not include works that remain

+ separable from, or merely link (or bind by name) to the interfaces of,

+ the Work and Derivative Works thereof.

+

+ "Contribution" shall mean any work of authorship, including

+ the original version of the Work and any modifications or additions

+ to that Work or Derivative Works thereof, that is intentionally

+ submitted to Licensor for inclusion in the Work by the copyright owner

+ or by an individual or Legal Entity authorized to submit on behalf of

+ the copyright owner. For the purposes of this definition, "submitted"

+ means any form of electronic, verbal, or written communication sent

+ to the Licensor or its representatives, including but not limited to

+ communication on electronic mailing lists, source code control systems,

+ and issue tracking systems that are managed by, or on behalf of, the

+ Licensor for the purpose of discussing and improving the Work, but

+ excluding communication that is conspicuously marked or otherwise

+ designated in writing by the copyright owner as "Not a Contribution."

+

+ "Contributor" shall mean Licensor and any individual or Legal Entity

+ on behalf of whom a Contribution has been received by Licensor and

+ subsequently incorporated within the Work.

+

+ 2. Grant of Copyright License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ copyright license to reproduce, prepare Derivative Works of,

+ publicly display, publicly perform, sublicense, and distribute the

+ Work and such Derivative Works in Source or Object form.

+

+ 3. Grant of Patent License. Subject to the terms and conditions of

+ this License, each Contributor hereby grants to You a perpetual,

+ worldwide, non-exclusive, no-charge, royalty-free, irrevocable

+ (except as stated in this section) patent license to make, have made,

+ use, offer to sell, sell, import, and otherwise transfer the Work,

+ where such license applies only to those patent claims licensable

+ by such Contributor that are necessarily infringed by their

+ Contribution(s) alone or by combination of their Contribution(s)

+ with the Work to which such Contribution(s) was submitted. If You

+ institute patent litigation against any entity (including a

+ cross-claim or counterclaim in a lawsuit) alleging that the Work

+ or a Contribution incorporated within the Work constitutes direct

+ or contributory patent infringement, then any patent licenses

+ granted to You under this License for that Work shall terminate

+ as of the date such litigation is filed.

+

+ 4. Redistribution. You may reproduce and distribute copies of the

+ Work or Derivative Works thereof in any medium, with or without

+ modifications, and in Source or Object form, provided that You

+ meet the following conditions:

+

+ (a) You must give any other recipients of the Work or

+ Derivative Works a copy of this License; and

+

+ (b) You must cause any modified files to carry prominent notices

+ stating that You changed the files; and

+

+ (c) You must retain, in the Source form of any Derivative Works

+ that You distribute, all copyright, patent, trademark, and

+ attribution notices from the Source form of the Work,

+ excluding those notices that do not pertain to any part of

+ the Derivative Works; and

+

+ (d) If the Work includes a "NOTICE" text file as part of its

+ distribution, then any Derivative Works that You distribute must

+ include a readable copy of the attribution notices contained

+ within such NOTICE file, excluding those notices that do not

+ pertain to any part of the Derivative Works, in at least one

+ of the following places: within a NOTICE text file distributed

+ as part of the Derivative Works; within the Source form or

+ documentation, if provided along with the Derivative Works; or,

+ within a display generated by the Derivative Works, if and

+ wherever such third-party notices normally appear. The contents

+ of the NOTICE file are for informational purposes only and

+ do not modify the License. You may add Your own attribution

+ notices within Derivative Works that You distribute, alongside

+ or as an addendum to the NOTICE text from the Work, provided

+ that such additional attribution notices cannot be construed

+ as modifying the License.

+

+ You may add Your own copyright statement to Your modifications and

+ may provide additional or different license terms and conditions

+ for use, reproduction, or distribution of Your modifications, or

+ for any such Derivative Works as a whole, provided Your use,

+ reproduction, and distribution of the Work otherwise complies with

+ the conditions stated in this License.

+

+ 5. Submission of Contributions. Unless You explicitly state otherwise,

+ any Contribution intentionally submitted for inclusion in the Work

+ by You to the Licensor shall be under the terms and conditions of

+ this License, without any additional terms or conditions.

+ Notwithstanding the above, nothing herein shall supersede or modify

+ the terms of any separate license agreement you may have executed

+ with Licensor regarding such Contributions.

+

+ 6. Trademarks. This License does not grant permission to use the trade

+ names, trademarks, service marks, or product names of the Licensor,

+ except as required for reasonable and customary use in describing the

+ origin of the Work and reproducing the content of the NOTICE file.

+

+ 7. Disclaimer of Warranty. Unless required by applicable law or

+ agreed to in writing, Licensor provides the Work (and each

+ Contributor provides its Contributions) on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or

+ implied, including, without limitation, any warranties or conditions

+ of TITLE, NON-INFRINGEMENT, MERCHANTABILITY, or FITNESS FOR A

+ PARTICULAR PURPOSE. You are solely responsible for determining the

+ appropriateness of using or redistributing the Work and assume any

+ risks associated with Your exercise of permissions under this License.

+

+ 8. Limitation of Liability. In no event and under no legal theory,

+ whether in tort (including negligence), contract, or otherwise,

+ unless required by applicable law (such as deliberate and grossly

+ negligent acts) or agreed to in writing, shall any Contributor be

+ liable to You for damages, including any direct, indirect, special,

+ incidental, or consequential damages of any character arising as a

+ result of this License or out of the use or inability to use the

+ Work (including but not limited to damages for loss of goodwill,

+ work stoppage, computer failure or malfunction, or any and all

+ other commercial damages or losses), even if such Contributor

+ has been advised of the possibility of such damages.

+

+ 9. Accepting Warranty or Additional Liability. While redistributing

+ the Work or Derivative Works thereof, You may choose to offer,

+ and charge a fee for, acceptance of support, warranty, indemnity,

+ or other liability obligations and/or rights consistent with this

+ License. However, in accepting such obligations, You may act only

+ on Your own behalf and on Your sole responsibility, not on behalf

+ of any other Contributor, and only if You agree to indemnify,

+ defend, and hold each Contributor harmless for any liability

+ incurred by, or claims asserted against, such Contributor by reason

+ of your accepting any such warranty or additional liability.

+

+ END OF TERMS AND CONDITIONS

+

+ APPENDIX: How to apply the Apache License to your work.

+

+ To apply the Apache License to your work, attach the following

+ boilerplate notice, with the fields enclosed by brackets "[]"

+ replaced with your own identifying information. (Don't include

+ the brackets!) The text should be enclosed in the appropriate

+ comment syntax for the file format. We also recommend that a

+ file or class name and description of purpose be included on the

+ same "printed page" as the copyright notice for easier

+ identification within third-party archives.

+

+ Copyright [yyyy] [name of copyright owner]

+

+ Licensed under the Apache License, Version 2.0 (the "License");

+ you may not use this file except in compliance with the License.

+ You may obtain a copy of the License at

+

+ http://www.apache.org/licenses/LICENSE-2.0

+

+ Unless required by applicable law or agreed to in writing, software

+ distributed under the License is distributed on an "AS IS" BASIS,

+ WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+ See the License for the specific language governing permissions and

+ limitations under the License.

diff --git a/README.md b/README.md

index 81463bfd7..c2658897e 100644

--- a/README.md

+++ b/README.md

@@ -1,17 +1,350 @@

-# intel-oneAPI

+### Team Name - C5ailabs

+### Problem Statement - Open Innovation in Education

+### Team Leader Email - rohit.sroch@course5i.com

+### Other Team Mates Email - (mohan.rachumallu@course5i.com, sujith.kumar@course5i.com, shubham.jain@course5i.com)

-#### Team Name -

-#### Problem Statement -

-#### Team Leader Email -

+### PPT - https://github.com/rohitc5/intel-oneAPI/blob/main/ppt/Intel-oneAPI-Hackathon-Implementation.pdf

+### Medium Article - https://medium.com/@rohitsroch/leap-learning-enhancement-and-assistance-platform-powered-by-intel-oneapi-ai-analytics-toolkit-656b5c9d2e0c

+### Benchmark Results - https://github.com/rohitc5/intel-oneAPI/tree/main/benchmark on Intel® Dev Cloud machine [Intel® Xeon® Platinum 8480+ (4th Gen: Sapphire Rapids) - 224v CPUs 503GB RAM]

+### Demo Video - https://www.youtube.com/watch?v=CXkR5tklZm0

-## A Brief of the Prototype:

- This section must include UML Daigrms and prototype description

+

+

+# LEAP

+

+Intel® oneAPI Hackathon 2023 - Prototype Implementation for our LEAP Solution

+

+[](https://opensource.org/license/apache-2-0/)

+[](https://github.com/rohitc5/intel-oneAPI/releases)

+[](https://vscode.dev/redirect?url=vscode://ms-vscode-remote.remote-containers/cloneInVolume?url=https://github.com/rohitc5/intel-oneAPI)

+[](https://codespaces.new/rohitc5/intel-oneAPI)

+[](https://star-history.com/#rohitc5/intel-oneAPI)

+

+# A Brief of the Prototype:

+

+#### INSPIRATION

+

+

+MOOCs (Massive Open Online Courses) have surged in popularity in recent years, particularly during the COVID-19 pandemic. These

+online courses are typically free or low-cost, making education more accessible worldwide.

+

+Online learning is crucial for students even post-pandemic due to its flexibility, accessibility, and quality. But still, the learning experience for students is not optimal, as in case of doubts they need to repeatedly go through videos and documents or ask in the forum which may not be effective because of the following challenges:

+

+- Resolving doubts can be a time-consuming process.

+- It can be challenging to sift through pile of lengthy videos or documents to find relevant information.

+- Teachers or instructors may not be available around the clock to offer guidance

+

+#### PROPOSED SOLUTION

+

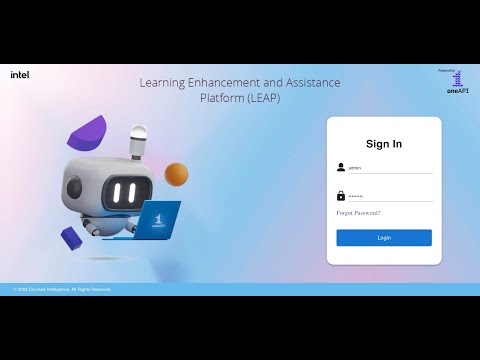

+To mitigate the above challenges, we propose LEAP (Learning Enhancement and Assistance Platform), which is an AI-powered

+platform designed to enhance student learning outcomes and provide equitable access to quality education. The platform comprises two main features that aim to improve the overall learning experience of the student:

+

+❑ Ask Question/Doubt: This allows the students to ask real-time questions around provided reading material, which includes videos and

+documents, and get back answers along with the exact timestamp in the video clip containing the answer (so that students don’t have to

+always scroll through). Also, It supports asking multilingual question, ensuring that language barriers do not hinder a student's learning

+process.

+

+❑ Interactive Conversational AI Examiner: This allows the students to evaluate their knowledge about the learned topic through an AI

+examiner conducting viva after each learning session. The AI examiner starts by asking question and always tries to motivate and provide

+hints to the student to arrive at correct answer, enhancing student engagement and motivation.

+

+# Detailed LEAP Process Flow:

+

+

+

+# Technology Stack:

+

+- [Intel® oneAPI AI Analytics Toolkit](https://www.intel.com/content/www/us/en/developer/tools/oneapi/ai-analytics-toolkit-download.html) Tech Stack

+

+

+

+1. [Intel® Extension for Pytorch](https://github.com/intel/intel-extension-for-pytorch): Used for our Multilingual Extractive QA model Training/Inference optimization.

+2. [Intel® Neural Compressor](https://github.com/intel/neural-compressor): Used for Multilingual Extractive QA model Inference optimization.

+3. [Intel® Extension for Scikit-Learn](https://github.com/intel/scikit-learn-intelex): Used for Multilingual Embedding model Training/Inference optimization.

+4. [Intel® distribution for Modin](https://github.com/modin-project/modin): Used for basic initial data analysis/EDA.

+

+- Prototype webapp Tech Stack

+

+

+

+# Demo Video

+

+[](https://www.youtube.com/watch?v=CXkR5tklZm0)

+

+# Step-by-Step Code Execution Instructions:

+

+### Quick Setup Option

+

+- Make sure you have already installed docker (https://docs.docker.com/get-docker/) and docker-compose (https://docs.docker.com/compose/)

+

+- Clone the Repository

+```python

+ $ git clone https://github.com/rohitc5/intel-oneAPI/

+ $ cd intel-oneAPI

+

+```

+- Start the LEAP RESTFul Service to consume both components (Ask Question/Doubt and Interactive Conversational AI Examiner) as a REST API.

+Also Start the webapp demo build using streamlit.

+

+```python

+ # copy the dataset

+ $ cp -r ./dataset webapp/

+

+ # build using docker compose

+ $ docker compose build

+

+ # start the services

+ $ docker compose up

+```

+

+- Go to http://localhost:8502

+

+

+### Manual Setup Option

+

+- Clone the Repository

+

+```python

+ $ git clone https://github.com/rohitc5/intel-oneAPI/

+ $ cd intel-oneAPI

+```

+

+- Train/Fine-tune the Extractive QA Multilingual Model (Part of our **Ask Question/Doubt** Component).

+Please note that, by default we use this (https://huggingface.co/ai4bharat/indic-bert) as a Backbone (BERT topology)

+and finetune it on SQuAD v1 dataset. Moreover, IndicBERT is a multilingual ALBERT model pretrained exclusively on 12 major Indian languages. It is pre-trained on novel monolingual corpus of around 9 billion tokens and subsequently evaluated on a set of diverse tasks. So finetuning, on SQuAD v1 (English) dataset automatically results in cross-lingual

+transfer on other 11 indian languages.

+

+Here is the architecture of `Ask Question/Doubt` component:

+

+

+

+```python

+ $ cd nlp/question_answering

+

+ # install dependencies

+ $ pip install -r requirements.txt

+

+ # modify the fine-tuning params mentioned in finetune_qa.sh

+ $ vi finetune_qa.sh

+

+ ''''

+ export MODEL_NAME_OR_PATH=ai4bharat/indic-bert

+ export BACKBONE_NAME=indic-mALBERT-uncased

+ export DATASET_NAME=squad # squad, squad_v2 (pass --version_2_with_negative)

+ export TASK_NAME=qa

+

+ # hyperparameters

+ export SEED=42

+ export BATCH_SIZE=32

+ export MAX_SEQ_LENGTH=384

+ export NUM_TRAIN_EPOCHS=5

+

+ export KEEP_ACCENTS=True

+ export DO_LOWER_CASE=True

+ ...

+

+ ''''

+

+ # start the training after modifying params

+ $ bash finetune_qa.sh

+```

+

+- Perform Post Training Optimization of Extractive QA model using IPEX (Intel® Extension for Pytorch), Intel® Neural Compressor and run the benchmark for comparison with Pytorch(Base)-FP32

+

+```python

+ # modify the params in pot_benchmark_qa.sh

+ $ vi pot_benchmark_qa.sh

+

+ ''''

+ export MODEL_NAME_OR_PATH=artifacts/qa/squad/indic-mALBERT-uncased

+ export BACKBONE_NAME=indic-mALBERT-uncased

+ export DATASET_NAME=squad # squad, squad_v2 (pass --version_2_with_negative)

+ export TASK_NAME=qa

+ export USE_OPTIMUM=True # whether to use hugging face wrapper optimum around intel neural compressor

+

+ # other parameters

+ export BATCH_SIZE=8

+ export MAX_SEQ_LENGTH=384

+ export DOC_STRIDE=128

+ export KEEP_ACCENTS=True

+ export DO_LOWER_CASE=True

+ export MAX_EVAL_SAMPLES=200

+

+ export TUNE=True # whether to tune or not

+ export PTQ_METHOD="static_int8" # "dynamic_int8", "static_int8", "static_smooth_int8"

+ export BACKEND="default" # default, ipex

+ export ITERS=100

+ ...

+

+ ''''

-## Tech Stack:

- List Down all technologies used to Build the prototype **Clearly mentioning Intel® AI Analytics Toolkits, it's libraries and the SYCL/DCP++ Libraries used**

-

-## Step-by-Step Code Execution Instructions:

- This Section must contain set of instructions required to clone and run the prototype, so that it can be tested and deeply analysed

+ $ bash pot_benchmark_qa.sh

+

+ # Please note that, above shell script can perform optimization using IPEX to get Pytorch-(IPEX)-FP32 model

+ # or It can perform optimization/quantization using Intel® Neural Compressor to get Static-QAT-INT8,

+ # Static-Smooth-QAT-INT8 models. Moreover, you can choose the backend as `default` or `ipex` for INT8 models.

+

+```

+

+- Run quick inference to test the model output

+

+```python

+ $ python run_qa_inference.py --model_name_or_path=[FP32 or INT8 finetuned model] --model_type=["vanilla_fp32" or "quantized_int8"] --do_lower_case --keep_accents --ipex_enable

+

+```

+

+- Train/Infer/Benchmark TFIDFVectorizer Embedding model for Scikit-Learn (Base) vs Intel® Extension for Scikit-Learn

+

+```python

+ $ cd nlp/feature_extractor

+

+ # train (.fit_transform func), infer (.transform func) and perform benchmark

+ $ python run_benchmark_tfidf.py --course_dir=../../dataset/courses --is_preprocess

+

+ # now rerun but turn on Intel® Extension for Scikit-Learn

+ $ python run_benchmark_tfidf.py --course_dir=../../dataset/courses --is_preprocess --intel_scikit_learn_enabled

+```

+

+- Setup LEAP API

+

+```python

+ $ cd api

+

+ # install dependencies

+ $ pip install -r requirements.txt

+

+ $ cd src/

+

+ # create a local vector store of course content for faster retrieval during inference

+ # Here we get semantic or syntactic (TFIDF) embedding of each content from course and index it.

+ # Please Note that, we use FAISS (local vector store) (https://github.com/facebookresearch/faiss) to create a course content index

+ $ python core/create_vector_index.py --course_dir=../../dataset/courses --emb_model_type=[semantic or syntactic] \

+ --model_name_or_path=[Hugging face model name for semantic] --keep_accents

-## What I Learned:

- Write about the biggest learning you had while developing the prototype

+ # update config.py

+ $ cd ../

+ $ vi config.py

+

+ ''''

+ ASK_DOUBT_CONFIG = {

+ # hugging face BERT topology model name

+ "emb_model_name_or_path": "ai4bharat/indic-bert",

+ "emb_model_type": "semantic", #options: syntactic, semantic

+

+ # finetuned Extractive QA model path previously done

+ "qa_model_name_or_path": "rohitsroch/indic-mALBERT-squad-v2",

+ "qa_model_type": "vanilla_fp32", #options: vanilla_fp32, quantized_int8

+

+ # faiss index file path created previously

+ "faiss_vector_index_path": "artifacts/index/faiss_emb_index"

+ }

+ ...

+

+ ''''

+```

+Please Note that, we have already released our finetuned Extractive QA model available on Huggingface Hub (https://huggingface.co/rohitsroch/indic-mALBERT-squad-v2)

+

+- For our **Interactive Conversational AI Examiner** Component, we use an Instruct-tuned or RLHF Large Language model (LLM) based on recent advancements in Generative AI. You can update the API configuration by specifying hf_model_name (LLM name available in huggingface Hub). Please checkout https://huggingface.co/models for LLMs.

+

+Here is the architecture of `Interactive Conversational AI Examiner` component:

+

+

+

+We can use several open access instruction tuned LLMs from hugging face Hub like MPT-7B-instruct (https://huggingface.co/mosaicml/mpt-7b-instruct), Falcon-7B-instruct (https://huggingface.co/TheBloke/falcon-7b-instruct-GPTQ), etc. (follow https://huggingface.co/models for more options.)

+You need to set the `llm_method` as `hf_pipeline` for this. Here for performance gain, we can use INT8 quantized model optimized using Intel® Neural Compressor (follow https://huggingface.co/Intel)

+

+Moreover, for doing much faster inference we can use open access instruction tuned Adapters (LoRA) with backbone as LLaMA from hugging face Hub like QLoRA

+(https://huggingface.co/timdettmers). You need to set the `llm_method` as `hf_peft` for this. Please follow **QLoRA** research paper (https://arxiv.org/pdf/2305.14314.pdf) for more details.

+

+Please Note that for fun 😄, we also provide usage of Azure OpenAI Service to use models like GPT3 over paid subscription API. You just need to provide `azure_deployment_name`, set `llm_method` as `azure_gpt3` in the below configuration and then add ``

+

+```python

+

+ ''''

+ AI_EXAMINER_CONFIG = {

+ "llm_method": "azure_gpt3", #options: azure_gpt3, hf_pipeline, hf_peft

+

+ "azure_gpt3":{

+ "deployment_name": "text-davinci-003-prod",

+ ...

+ },

+ "hf_pipeline":{

+ "model_name": "tiiuae/falcon-7b-instruct"

+ ...

+ }

+ "hf_peft":{

+ "model_name": "huggyllama/llama-7b",

+ "adapter_name": "timdettmers/qlora-alpaca-7b",

+ ...

+ }

+ }

+

+ # provide your api key

+ os.environ["OPENAI_API_KEY"] = ""

+

+ ''''

+```

+

+- Start the API server

+

+```python

+ $ cd api/src/

+

+ # start the gunicorn server

+ $ bash start.sh

+```

+

+- Start the Streamlit webapp demo

+

+```python

+ $ cd webapp

+

+ # update the config

+ $ vi config.py

+

+ ''''

+ # set the correct dataset path

+ DATASET_COURSE_BASE_DIR = "../dataset/courses/"

+

+ API_CONFIG = {

+ "server_host": "localhost", # set the server host

+ "server_port": "8500", # set the server port

+

+ }

+ ...

+

+ ''''

+

+ # install dependencies

+ $ pip install -r requirements.txt

+

+ $ streamlit run app.py

+

+```

+

+- Go to http://localhost:8502

+

+# Benchmark Results with Intel® oneAPI AI Analytics Toolkit

+

+- We follow the below process flow to optimize our models from both the components

+

+

+

+- We have already added several benchmark results to compare how beneficial Intel® oneAPI AI Analytics Toolkit is compared to baseline. Please go to `benchmark` folder to view the results. Please Note that the shared results are based

+on provided Intel® Dev Cloud machine *[Intel® Xeon® Platinum 8480+ (4th Gen: Sapphire Rapids) - 224v CPUs 503GB RAM]*

+

+# Comprehensive Implementation PPT & Article

+

+- Please go to PPT 🎉 https://github.com/rohitc5/intel-oneAPI/blob/main/ppt/Intel-oneAPI-Hackathon-Implementation.pdf for more details

+

+- Please go to Article 📄 https://medium.com/@rohitsroch/leap-learning-enhancement-and-assistance-platform-powered-by-intel-oneapi-ai-analytics-toolkit-656b5c9d2e0c for more details

+

+# What we learned

+

+

+

+✅ **Utilizing the Intel® AI Analytics Toolkit**: By utilizing the Intel® AI Analytics Toolkit, developers can leverage familiar Python* tools and frameworks to accelerate the entire data science and analytics process on Intel® architecture. This toolkit incorporates oneAPI libraries for optimized low-level computations, ensuring maximum performance from data preprocessing to deep learning and machine learning tasks. Additionally, it facilitates efficient model development through interoperability.

+

+✅ **Seamless Adaptability**: The Intel® AI Analytics Toolkit enables smooth integration with machine learning and deep learning workloads, requiring minimal modifications.

+

+✅ **Fostered Collaboration**: The development of such an application likely involved collaboration with a team comprising experts from diverse fields, including deep learning and data analysis. This experience likely emphasized the significance of collaborative efforts in attaining shared objectives.

diff --git a/api/.DS_Store b/api/.DS_Store

new file mode 100644

index 000000000..6bf478d2f

Binary files /dev/null and b/api/.DS_Store differ

diff --git a/api/Dockerfile b/api/Dockerfile

new file mode 100644

index 000000000..f6bae43f4

--- /dev/null

+++ b/api/Dockerfile

@@ -0,0 +1,17 @@

+FROM python:3.9

+

+RUN apt-get update && apt-get -y install \

+ build-essential libpq-dev ffmpeg libsm6 libxext6 wget

+

+WORKDIR /opt

+COPY requirements.txt requirements.txt

+

+RUN pip install --upgrade pip

+

+RUN pip install -r requirements.txt

+

+COPY src/ .

+

+EXPOSE 8500

+

+ENTRYPOINT [ "bash", "start.sh" ]

diff --git a/api/README.md b/api/README.md

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/requirements.txt b/api/requirements.txt

new file mode 100644

index 000000000..a9e5b053e

--- /dev/null

+++ b/api/requirements.txt

@@ -0,0 +1,21 @@

+fastapi==0.96.0

+uvicorn[standard]==0.22.0

+gunicorn==20.1.0

+pydantic==1.10.8

+python-multipart==0.0.6

+Cython==0.29.35

+pandas==2.0.2

+tiktoken==0.4.0

+langchain==0.0.191

+openai==0.27.8

+faiss-cpu==1.7.4

+nltk==3.8.1

+torch==2.0.1

+transformers==4.29.2

+peft==0.3.0

+optimum[neural-compressor]==1.8.7

+neural-compressor==2.1.1

+webvtt-py==0.4.6

+intel_extension_for_pytorch==2.0.100

+scikit-learn==1.2.2

+scikit-learn-intelex==2023.1.1

diff --git a/api/src/.DS_Store b/api/src/.DS_Store

new file mode 100644

index 000000000..9bcbe9c46

Binary files /dev/null and b/api/src/.DS_Store differ

diff --git a/api/src/__init__.py b/api/src/__init__.py

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/src/artifacts/.DS_Store b/api/src/artifacts/.DS_Store

new file mode 100644

index 000000000..83bd1a599

Binary files /dev/null and b/api/src/artifacts/.DS_Store differ

diff --git a/api/src/artifacts/index/.gitignore b/api/src/artifacts/index/.gitignore

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/src/artifacts/index/faiss_emb_index/index.faiss b/api/src/artifacts/index/faiss_emb_index/index.faiss

new file mode 100755

index 000000000..7211b76c6

Binary files /dev/null and b/api/src/artifacts/index/faiss_emb_index/index.faiss differ

diff --git a/api/src/artifacts/index/faiss_emb_index/index.pkl b/api/src/artifacts/index/faiss_emb_index/index.pkl

new file mode 100755

index 000000000..317c43f9d

Binary files /dev/null and b/api/src/artifacts/index/faiss_emb_index/index.pkl differ

diff --git a/api/src/artifacts/model/.gitignore b/api/src/artifacts/model/.gitignore

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/src/config.py b/api/src/config.py

new file mode 100644

index 000000000..33c055874

--- /dev/null

+++ b/api/src/config.py

@@ -0,0 +1,105 @@

+import json

+import os

+

+PORT = 8500

+

+ASK_DOUBT_CONFIG = {

+ "emb_model_name_or_path": "ai4bharat/indic-bert",

+ "emb_model_type": "semantic", #options: syntactic, semantic

+ "qa_model_name_or_path": "rohitsroch/indic-mALBERT-squad-v2",

+ "qa_model_type": "vanilla_fp32", #options: vanilla_fp32, quantized_int8

+

+ "intel_scikit_learn_enabled": True,

+ "ipex_enabled": True,

+ "keep_accents": True,

+ "normalize_L2": False,

+ "do_lower_case": True,

+ "faiss_vector_index_path": "artifacts/index/faiss_emb_index"

+}

+

+

+AI_EXAMINER_CONFIG = {

+ "llm_method": "azure_gpt3", #options: azure_gpt3, hf_pipeline, hf_peft

+

+ "azure_gpt3":{

+ "deployment_name": "text-davinci-003-prod",

+ "llm_kwargs": {

+ "temperature": 0.3,

+ "max_tokens": 300,

+ "n": 1,

+ "top_p": 1.0,

+ "frequency_penalty": 1.1

+ }

+ },

+ "hf_pipeline":{

+ "model_name": "tiiuae/falcon-7b-instruct",

+ "task": "text-generation",

+ "device": -1,

+ "llm_kwargs":{

+ "torch_dtype": "float16", #bfloat16, float16, float32

+ "device_map": "auto",

+ "load_in_4bit": True,

+ "max_memory": "24000MB",

+ "trust_remote_code": True

+ },

+ "pipeline_kwargs": {

+ "max_new_tokens": 300,

+ "top_p": 0.15,

+ "top_k": 0,

+ "temperature": 0.3,

+ "repetition_penalty": 1.1,

+ "num_return_sequences": 1,

+ "do_sample": True,

+ "stop_sequence": []

+ },

+ "quantization_kwargs": {

+ "load_in_4bit": True, # do 4 bit quantization

+ "load_in_8bit": False,

+ "bnb_4bit_compute_dtype": "float16", #bfloat16, float16, float32

+ "bnb_4bit_use_double_quant": True,

+ "bnb_4bit_quant_type": "nf4"

+ }

+ },

+ "hf_peft":{

+ "model_name": "huggyllama/llama-7b",

+ "adapter_name": "timdettmers/qlora-alpaca-7b",

+ "task": "text-generation",

+ "device": -1,

+ "llm_kwargs":{

+ "torch_dtype": "float16", #bfloat16, float16, float32

+ "device_map": "auto",

+ "load_in_4bit": True,

+ "max_memory": "32000MB",

+ "trust_remote_code": True

+ },

+ "generation_kwargs": {

+ "max_new_tokens": 300,

+ "top_p": 0.15,

+ "top_k": 0,

+ "temperature": 0.3,

+ "repetition_penalty": 1.1,

+ "num_return_sequences": 1,

+ "do_sample": True,

+ "early_stopping": True,

+ "stop_sequence": []

+ },

+ "quantization_kwargs": {

+ "load_in_4bit": True, # do 4 bit quantization

+ "load_in_8bit": False,

+ "bnb_4bit_compute_dtype": "float16", #bfloat16, float16, float32

+ "bnb_4bit_use_double_quant": True,

+ "bnb_4bit_quant_type": "nf4"

+ }

+ }

+}

+

+os.environ["TOKENIZERS_PARALLELISM"] = "True"

+

+# Set this to `azure`

+os.environ["OPENAI_API_TYPE"]= "azure"

+# The API version you want to use: set this to `2022-12-01` for the released version.

+os.environ["OPENAI_API_VERSION"] = "2022-12-01"

+# The base URL for your Azure OpenAI resource. You can find this in the Azure portal under your Azure OpenAI resource.

+os.environ["OPENAI_API_BASE"] = "https://c5-openai-research.openai.azure.com/"

+# The API key for your Azure OpenAI resource. You can find this in the Azure portal under your Azure OpenAI resource.

+os.environ["OPENAI_API_KEY"] = ""

diff --git a/api/src/core/.gitignore b/api/src/core/.gitignore

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/src/core/__init__.py b/api/src/core/__init__.py

new file mode 100644

index 000000000..8b1378917

--- /dev/null

+++ b/api/src/core/__init__.py

@@ -0,0 +1 @@

+

diff --git a/api/src/core/common.py b/api/src/core/common.py

new file mode 100644

index 000000000..5c69eedcf

--- /dev/null

+++ b/api/src/core/common.py

@@ -0,0 +1,14 @@

+import six

+

+

+def convert_to_unicode(text):

+ """Converts `text` to Unicode (if it's not already), assuming utf-8 input."""

+ if six.PY3:

+ if isinstance(text, str):

+ return text

+ elif isinstance(text, bytes):

+ return text.decode("utf-8", "ignore")

+ else:

+ raise ValueError("Unsupported string type: %s" % (type(text)))

+ else:

+ raise ValueError("Not running on Python2 or Python 3?")

\ No newline at end of file

diff --git a/api/src/core/constants.py b/api/src/core/constants.py

new file mode 100644

index 000000000..121d2362b

--- /dev/null

+++ b/api/src/core/constants.py

@@ -0,0 +1 @@

+WARNING_PREFIX = ""

\ No newline at end of file

diff --git a/api/src/core/create_vector_index.py b/api/src/core/create_vector_index.py

new file mode 100644

index 000000000..443e9078d

--- /dev/null

+++ b/api/src/core/create_vector_index.py

@@ -0,0 +1,207 @@

+

+#!/usr/bin/env python

+# coding=utf-8

+# Copyright 2023 C5ailabs Team (Authors: Rohit Sroch) All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+Create a retriever vector index for faster search during inference using FAISS

+"""

+import argparse

+import os

+import sys

+sys.path.append(os.getcwd())

+import webvtt

+from glob import glob

+

+from core.retrievers.huggingface import HuggingFaceEmbeddings

+from core.retrievers.tfidf import TFIDFEmbeddings

+from core.retrievers.faiss_vector_store import FAISS

+from core.retrievers.docstore import Document

+

+def get_subtitles_as_docs(course_dir):

+ # format of courses folder structure is courses/{topic_name}/{week_name}/{subtopic_name}/subtitle-en.vtt

+ path = os.path.join(course_dir, "*/*/*/*.vtt")

+ subtitle_fpaths = glob(path)

+

+ docs = []

+ for subtitle_fpath in subtitle_fpaths:

+ topic_name = subtitle_fpath.split("/")[-4]

+ week_name = subtitle_fpath.split("/")[-3]

+ subtopic_name = subtitle_fpath.split("/")[-2]

+ file_name = subtitle_fpath.split("/")[-1]

+

+ subtitles = webvtt.read(subtitle_fpath)

+ for index, subtitle in enumerate(subtitles):

+ docs.append(

+ Document(

+ page_content=subtitle.text,

+ metadata={

+ "doc_id": index,

+ "start_timestamp": subtitle.start,

+ "end_timestamp": subtitle.end,

+ "topic_name": topic_name,

+ "week_name": week_name,

+ "subtopic_name": subtopic_name,

+ "file_name": file_name,

+ "fpath": subtitle_fpath

+ })

+ )

+

+ return docs

+

+def load_emb_model(

+ model_name_or_path,

+ model_type="semantic",

+ intel_scikit_learn_enabled=True,

+ ipex_enabled=False,

+ model_revision="main",

+ keep_accents=False,

+ cache_dir=".cache"

+ ):

+ """load a Embedding model"""

+ emb_model = None

+

+ if model_type == "semantic":

+ emb_model = HuggingFaceEmbeddings(

+ model_name_or_path=model_name_or_path,

+ ipex_enabled=ipex_enabled,

+ hf_kwargs={

+ "model_revision": model_revision,

+ "keep_accents": keep_accents,

+ "cache_dir": cache_dir

+ }

+ )

+ elif model_type == "syntactic":

+ emb_model = TFIDFEmbeddings(

+ intel_scikit_learn_enabled=intel_scikit_learn_enabled

+ )

+

+ return emb_model

+

+

+def main(args):

+

+ if args.emb_model_type == "semantic":

+ print("**********Creating a Semantic vector index using \

+ HuggingFaceEmbeddings with `model_name_or_path`={}**********".format(

+ args.model_name_or_path

+ ))

+ elif args.emb_model_type == "syntactic":

+ print("**********Creating a Syntactic vector index using TFIDFEmbeddings**********")

+

+ # get the contexts as docs with metadata

+ docs = get_subtitles_as_docs(args.course_dir)

+ # get the embedding model

+ emb_model = load_emb_model(

+ args.model_name_or_path,

+ model_type=args.emb_model_type,

+ intel_scikit_learn_enabled=args.intel_scikit_learn_enabled,

+ ipex_enabled=args.ipex_enabled,

+ keep_accents=args.keep_accents

+ )

+

+ vector_index = FAISS.from_documents(

+ docs, emb_model, normalize_L2=args.normalize_L2)

+ save_path = "faiss_emb_index" if args.output_dir is None \

+ else os.path.join(args.output_dir, "faiss_emb_index")

+ vector_index.save_local(save_path)

+

+ if args.emb_model_type == "syntactic":

+ emb_model.save_tfidf_vocab(emb_model.vectorizer.vocabulary_, save_path)

+

+ print("*"*100)

+ print("Validating for example query...")

+ if args.emb_model_type == "syntactic":

+ # reload with tfidf vocab

+ emb_model = TFIDFEmbeddings(

+ intel_scikit_learn_enabled=args.intel_scikit_learn_enabled,

+ tfidf_kwargs = {

+ "tfidf_vocab_path": save_path

+ }

+ )

+

+ vector_index = FAISS.load_local(

+ save_path, emb_model, normalize_L2=args.normalize_L2)

+ query = "How does a neural network help in predicting housing prices?"

+ docs = vector_index.similarity_search(query, k=3)

+ print("Relevant Docs: {}".format(docs))

+

+ print("*"*100)

+ print("😊 FAISS is a local vector index sucessfully saved with name faiss_emb_index")

+

+

+if __name__ == "__main__":

+

+ parser = argparse.ArgumentParser(description="Preprocess course video subtitle (.vtt) file")

+

+ parser.add_argument(

+ "--course_dir",

+ type=str,

+ help="Base directory containing courses",

+ required=True

+ )

+ parser.add_argument(

+ "--emb_model_type",

+ type=str,

+ default="syntactic",

+ help="Embedding model type as semantic or syntactic",

+ choices=["semantic", "syntactic"]

+ )

+ parser.add_argument(

+ "--model_name_or_path",

+ type=str,

+ default=None,

+ help="Hugging face model_name_or_path in case of emb_model_type as semantic"

+ )

+ parser.add_argument(

+ "--keep_accents",

+ action="store_true",

+ help="To preserve accents (vowel matras / diacritics) while tokenization in case of emb_model_type as semantic",

+ )

+ parser.add_argument(

+ "--intel_scikit_learn_enabled",

+ action="store_true",

+ help="Whether to use intel extension for scikit learn in case of emb_model_type as syntactic",

+ )

+ parser.add_argument(

+ "--ipex_enabled",

+ action="store_true",

+ help="Whether to use intel extension for pytorch in case emb_model_type as semantic",

+ )

+ parser.add_argument(

+ "--normalize_L2",

+ action="store_true",

+ help="Whether to normalize embedding, usually its a good idea in case of emb_model_type as syntactic",

+ )

+ parser.add_argument(

+ "--output_dir",

+ type=str,

+ default=None,

+ help="Output dir where index will be saved"

+ )

+

+ args = parser.parse_args()

+

+ # sanity checks

+ if args.emb_model_type == "semantic":

+ if args.model_name_or_path is None:

+ raise ValueError("Please provide valid `model_name_or_path` as emb_model_type = `semantic`")

+ elif args.emb_model_type == "syntactic":

+ if args.model_name_or_path is not None:

+ raise ValueError("Please don't provide `model_name_or_path` as emb_model_type = `syntatic`")

+

+ if args.output_dir is not None:

+ os.makedirs(args.output_dir, exist_ok=True)

+

+ main(args)

\ No newline at end of file

diff --git a/api/src/core/extractive_qa.py b/api/src/core/extractive_qa.py

new file mode 100644

index 000000000..f4e5ab9f3

--- /dev/null

+++ b/api/src/core/extractive_qa.py

@@ -0,0 +1,326 @@

+#!/usr/bin/env python

+# coding=utf-8

+# Copyright 2023 C5ailabs Team All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+Extractive Question Answering for LEAP platform

+"""

+from transformers import (

+ AutoConfig,

+ AutoModelForQuestionAnswering,

+ AutoTokenizer,

+ pipeline

+)

+# optimum-intel

+from optimum.intel import INCModelForQuestionAnswering

+

+# retrievers

+from core.retrievers.huggingface import HuggingFaceEmbeddings

+from core.retrievers.tfidf import TFIDFEmbeddings

+from core.retrievers.faiss_vector_store import FAISS

+

+from abc import ABC, abstractmethod

+from pydantic import Extra, BaseModel

+from typing import List, Optional, Dict, Any

+

+from utils.logging_handler import Logger

+

+class BaseQuestionAnswering(BaseModel, ABC):

+ """Base Question Answering interface"""

+

+ @abstractmethod

+ async def retrieve_docs(

+ self,

+ question: str,

+ top_n: Optional[int] = 2,

+ sim_score: Optional[float] = 0.9

+ ) -> List[str]:

+ """Take in a question and return List of top_n docs"""

+

+ @abstractmethod

+ async def span_answer(

+ self,

+ question: str,

+ context: List[str],

+ doc_stride: Optional[int] = 128,

+ max_answer_len: Optional[int] = 30,

+ max_seq_len: Optional[int] = 512,

+ top_k: Optional[int] = 1

+ ) -> Dict:

+ """Take in a question and context and return List of top_k answer as dict."""

+

+ @abstractmethod

+ async def predict(

+ self,

+ question: str,

+ doc_stride: Optional[int] = 128,

+ max_answer_length: Optional[int] = 30,

+ max_seq_length: Optional[int] = 512,

+ top_n: Optional[int] = 2,

+ top_k: Optional[int] = 1

+ ) -> Dict:

+ """Predict answer from question and context"""

+

+

+def load_qa_model(

+ model_name_or_path,

+ model_type="vanilla_fp32",

+ ipex_enabled=False,

+ model_revision="main",

+ keep_accents=False,

+ do_lower_case=False,

+ cache_dir=".cache"):

+ """load a QA model"""

+ qa_pipeline = None

+

+ tokenizer = AutoTokenizer.from_pretrained(

+ model_name_or_path,

+ cache_dir=cache_dir,

+ use_fast=True,

+ revision=model_revision,

+ keep_accents=keep_accents,

+ do_lower_case=do_lower_case,

+ )

+ if model_type == "vanilla_fp32":

+ config = AutoConfig.from_pretrained(

+ model_name_or_path,

+ cache_dir=cache_dir,

+ revision=model_revision

+ )

+ model = AutoModelForQuestionAnswering.from_pretrained(

+ model_name_or_path,

+ from_tf=bool(".ckpt" in model_name_or_path),

+ config=config,

+ cache_dir=cache_dir,

+ revision=model_revision

+ )

+ if ipex_enabled:

+ try:

+ import intel_extension_for_pytorch as ipex

+ except:

+ assert False, "transformers 4.29.0 requests IPEX version higher or equal to 1.12"

+ model = ipex.optimize(model)

+

+ qa_pipeline = pipeline(

+ task="question-answering",

+ model=model,

+ tokenizer=tokenizer

+ )

+ elif model_type == "quantized_int8":

+

+ model = INCModelForQuestionAnswering.from_pretrained(

+ model_name_or_path,

+ cache_dir=cache_dir,

+ revision=model_revision

+ )

+ qa_pipeline = pipeline(

+ task="question-answering",

+ model=model,

+ tokenizer=tokenizer

+ )

+

+ return qa_pipeline

+

+def load_emb_model(

+ model_name_or_path,

+ tfidf_vocab_path,

+ model_type="semantic",

+ intel_scikit_learn_enabled=True,

+ ipex_enabled=False,

+ model_revision="main",

+ keep_accents=False,

+ do_lower_case=False,

+ cache_dir=".cache"

+ ):

+ """load a Embedding model"""

+

+ emb_model = None

+

+ if model_type == "semantic":

+ emb_model = HuggingFaceEmbeddings(

+ model_name_or_path=model_name_or_path,

+ ipex_enabled=ipex_enabled,

+ hf_kwargs={

+ "model_revision": model_revision,

+ "keep_accents": keep_accents,

+ "do_lower_case": do_lower_case,

+ "cache_dir": cache_dir

+ }

+ )

+ elif model_type == "syntactic":

+ emb_model = TFIDFEmbeddings(

+ intel_scikit_learn_enabled=intel_scikit_learn_enabled,

+ tfidf_kwargs = {

+ "tfidf_vocab_path": tfidf_vocab_path

+ }

+ )

+

+ return emb_model

+

+def load_faiss_vector_index(

+ faiss_vector_index_path,

+ emb_model,

+ normalize_L2=False):

+

+ faiss_vector_index = FAISS.load_local(

+ faiss_vector_index_path, emb_model,

+ normalize_L2=normalize_L2)

+

+ return faiss_vector_index

+

+class ExtractiveQuestionAnswering(BaseQuestionAnswering):

+ """QuestionAnswering wrapper should take in a question and return a answer."""

+

+ emb_model: Any = None

+ emb_model_type: Optional[str] = "semantic"

+

+ qa_model: Any = None

+ qa_model_type: Optional[str] = "vanilla_fp32"

+

+ faiss_vector_index: Any = None

+

+ class Config:

+ """Configuration for this pydantic object."""

+

+ extra = Extra.forbid

+

+ @classmethod

+ def load(cls,

+ emb_model_name_or_path: str,

+ qa_model_name_or_path: str,

+ faiss_vector_index_path: str,

+ **kwargs):

+ """

+ Args:

+ emb_model_path (str): Embedding model path

+ qa_model_path (str): Question Answering model path

+ faiss_vector_index (str): Faiss Context vector index path

+ """

+

+ emb_model_type = kwargs.get("emb_model_type", "semantic")

+ qa_model_type = kwargs.get("qa_model_type", "vanilla_fp32")

+ normalize_L2 = kwargs.get("normalize_L2", False)

+

+ # load the emb model

+ emb_model = load_emb_model(

+ emb_model_name_or_path,

+ faiss_vector_index_path,

+ model_type=emb_model_type,

+ intel_scikit_learn_enabled=kwargs.get("intel_scikit_learn_enabled", True),

+ ipex_enabled=kwargs.get("ipex_enabled", False),

+ model_revision=kwargs.get("model_revision", "main"),

+ keep_accents=kwargs.get("keep_accents", False),

+ do_lower_case=kwargs.get("do_lower_case", False),

+ cache_dir=kwargs.get("cache_dir", ".cache")

+ )

+ # load the qa model

+ qa_model = load_qa_model(

+ qa_model_name_or_path,

+ model_type=qa_model_type,

+ ipex_enabled=kwargs.get("ipex_enabled", False),

+ model_revision=kwargs.get("model_revision", "main"),

+ keep_accents=kwargs.get("keep_accents", False),

+ do_lower_case=kwargs.get("do_lower_case", False),

+ cache_dir=kwargs.get("cache_dir", ".cache")

+ )

+

+ # load faiss vector index

+ faiss_vector_index = load_faiss_vector_index(

+ faiss_vector_index_path,

+ emb_model=emb_model,

+ normalize_L2=normalize_L2

+ )

+

+ return cls(

+ emb_model=emb_model,

+ emb_model_type=emb_model_type,

+ qa_model=qa_model,

+ qa_model_type=qa_model_type,

+ faiss_vector_index=faiss_vector_index

+ )

+

+ async def retrieve_docs(

+ self,

+ question: str,

+ top_n: Optional[int] = 2,

+ sim_score: Optional[float] = 0.9

+ ) -> List[str]:

+ """Take in a question and return List of top_n docs"""

+ docs = self.faiss_vector_index.similarity_search(

+ question, top_n,

+ sim_score=sim_score)

+

+ return docs

+

+ async def span_answer(

+ self,

+ question: str,

+ context: str,

+ doc_stride: Optional[int] = 128,

+ max_answer_length: Optional[int] = 30,

+ max_seq_length: Optional[int] = 512,

+ top_k: Optional[int] = 1

+ ) -> Dict:

+ """Take in a question and context and return List of top_k answer as dict."""

+

+ preds = self.qa_model(

+ question=question,

+ context=context,

+ doc_stride=doc_stride,

+ max_answer_len=max_answer_length,

+ max_seq_len=max_seq_length,

+ top_k=top_k

+ )

+ return preds

+

+ async def predict(

+ self,

+ question: str,

+ doc_stride: Optional[int] = 128,

+ max_answer_length: Optional[int] = 30,

+ max_seq_length: Optional[int] = 512,

+ top_n: Optional[int] = 2,

+ top_k: Optional[int] = 1

+ ) -> Dict:

+ """Predict answer from question and context"""

+

+ docs = await self.retrieve_docs(question, top_n)

+ relevant_contexts = [

+ {"context": doc.page_content, "metadata": doc.metadata}

+ for doc in docs

+ ]

+

+ contexts = [doc.page_content for doc in docs]

+ context = ". ".join(contexts)

+ preds = await self.span_answer(

+ question, context, doc_stride,

+ max_answer_length, max_seq_length, top_k)

+

+ relevant_context_id = -1

+ for index, dict_ in enumerate(relevant_contexts):

+ if preds["answer"] in dict_["context"]:

+ relevant_context_id = index

+ break

+

+ output = {

+ "question": question,

+ "context": context,

+ "answer": preds["answer"],

+ "score": preds["score"],

+ "relevant_context_id": relevant_context_id,

+ "relevant_contexts": relevant_contexts

+ }

+

+ return output

+

diff --git a/api/src/core/interactive_examiner.py b/api/src/core/interactive_examiner.py

new file mode 100644

index 000000000..14519a22f

--- /dev/null

+++ b/api/src/core/interactive_examiner.py

@@ -0,0 +1,238 @@

+#!/usr/bin/env python

+# coding=utf-8

+# Copyright 2023 C5ailabs Team (Authors: Rohit Sroch) All rights reserved.

+#

+# Licensed under the Apache License, Version 2.0 (the "License");

+# you may not use this file except in compliance with the License.

+# You may obtain a copy of the License at

+#

+# http://www.apache.org/licenses/LICENSE-2.0

+#

+# Unless required by applicable law or agreed to in writing, software

+# distributed under the License is distributed on an "AS IS" BASIS,

+# WITHOUT WARRANTIES OR CONDITIONS OF ANY KIND, either express or implied.

+# See the License for the specific language governing permissions and

+# limitations under the License.

+"""

+Intractive AI Examiner for LEAP platform

+"""

+#lang chain

+import langchain

+from langchain import PromptTemplate, LLMChain

+from langchain.cache import InMemoryCache

+

+from core.prompt import (

+ EXAMINER_ASK_QUESTION_PROMPT,

+ EXAMINER_EVALUATE_STUDENT_ANSWER_PROMPT,

+ EXAMINER_HINT_MOTIVATE_STUDENT_PROMPT

+)

+

+from abc import ABC, abstractmethod

+from pydantic import Extra, BaseModel

+from typing import List, Optional, Dict, Any

+

+from utils.logging_handler import Logger

+

+#langchain.llm_cache = InMemoryCache()

+

+class BaseAIExaminer(BaseModel, ABC):

+ """Base AI Examiner interface"""

+

+ @abstractmethod

+ async def examiner_ask_question(

+ self,

+ context: str,

+ question_type: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner generates a question"""

+

+ @abstractmethod

+ async def examiner_eval_answer(

+ self,

+ ai_question: str,

+ student_solution: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner evaluates student solution"""

+

+ @abstractmethod

+ async def examiner_hint_motivate(

+ self,

+ ai_question: str,

+ student_solution: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner hints and motivate student"""

+

+class InteractiveAIExaminer(BaseAIExaminer):

+ """Interactive AI Examiner"""

+

+ llm_chain_ask_ques: LLMChain

+ prompt_template_ask_ques: PromptTemplate

+

+ llm_chain_eval_ans: LLMChain

+ prompt_template_eval_ans: PromptTemplate

+

+ llm_chain_hint_motivate: LLMChain

+ prompt_template_hint_motivate: PromptTemplate

+

+ class Config:

+ """Configuration for this pydantic object."""

+

+ extra = Extra.forbid

+

+ @classmethod

+ def load(cls,

+ llm: Any,

+ **kwargs):

+ """

+ Args:

+ llm (str): Large language model object

+ """

+

+ verbose = kwargs.get("verbose", True)

+ # prompt templates

+ prompt_template_ask_ques = PromptTemplate(

+ template=EXAMINER_ASK_QUESTION_PROMPT,

+ input_variables=[

+ "context",

+ "question_type",

+ "topic"

+ ]

+ )

+ prompt_template_eval_ans = PromptTemplate(

+ template=EXAMINER_EVALUATE_STUDENT_ANSWER_PROMPT,

+ input_variables=[

+ "ai_question",

+ "student_solution",

+ "topic"

+ ]

+ )

+ prompt_template_hint_motivate = PromptTemplate(

+ template=EXAMINER_HINT_MOTIVATE_STUDENT_PROMPT,

+ input_variables=[

+ "ai_question",

+ "student_solution",

+ "topic"

+ ]

+ )

+ # llm chains

+ llm_chain_ask_ques = LLMChain(

+ llm=llm,

+ prompt=prompt_template_ask_ques,

+ verbose=verbose

+ )

+ llm_chain_eval_ans = LLMChain(

+ llm=llm,

+ prompt=prompt_template_eval_ans,

+ verbose=verbose

+ )

+ llm_chain_hint_motivate = LLMChain(

+ llm=llm,

+ prompt=prompt_template_hint_motivate,

+ verbose=verbose

+ )

+

+ return cls(

+ llm_chain_ask_ques=llm_chain_ask_ques,

+ prompt_template_ask_ques=prompt_template_ask_ques,

+ llm_chain_eval_ans=llm_chain_eval_ans,

+ prompt_template_eval_ans=prompt_template_eval_ans,

+ llm_chain_hint_motivate=llm_chain_hint_motivate,

+ prompt_template_hint_motivate=prompt_template_hint_motivate

+ )

+

+ async def examiner_ask_question(

+ self,

+ context: str,

+ question_type: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner generates a question"""

+ is_predicted = True

+ result = {

+ "prediction": None,

+ "error_message": None

+ }

+ try:

+ output = self.llm_chain_ask_ques.predict(

+ context=context,

+ question_type=question_type,

+ topic=topic)

+ output = output.strip()

+ result["prediction"] = {

+ "ai_question": output

+ }

+

+ return (is_predicted, result)

+ except Exception as err:

+ Logger.error("Error: {}".format(str(err)))

+ is_predicted = False

+ result["error_message"] = str(err)

+ return (is_predicted, result)

+

+ async def examiner_eval_answer(

+ self,

+ ai_question: str,

+ student_solution: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner evaluates student solution"""

+ is_predicted = True

+ result = {

+ "prediction": None,

+ "error_message": None

+ }

+ try:

+ output = self.llm_chain_eval_ans.predict(

+ ai_question=ai_question,

+ student_solution=student_solution,

+ topic=topic)

+ output = output.strip()

+ idx = output.find("Student grade:")

+ student_grade = output[idx + 15: ].strip()

+ result["prediction"] = {

+ "student_grade": student_grade

+ }

+

+ return (is_predicted, result)

+ except Exception as err:

+ Logger.error("Error: {}".format(str(err)))

+ is_predicted = False

+ result["error_message"] = str(err)

+ return (is_predicted, result)

+

+ async def examiner_hint_motivate(

+ self,

+ ai_question: str,

+ student_solution: str,

+ topic: str

+ ) -> Dict:

+ """AI Examiner hints and motivate student"""

+ is_predicted = True

+ result = {

+ "prediction": None,

+ "error_message": None

+ }

+ try:

+ output = self.llm_chain_hint_motivate.predict(

+ ai_question=ai_question,

+ student_solution=student_solution,

+ topic=topic)

+ output = output.strip()

+ idx = output.find("Encourage student:")

+ hint = output[: idx-1].strip()

+ motivation = output[idx + 19: ].strip()

+

+ result["prediction"] = {

+ "hint": hint,

+ "motivation": motivation

+ }

+

+ return (is_predicted, result)

+ except Exception as err:

+ Logger.error("Error: {}".format(str(err)))

+ is_predicted = False

+ result["error_message"] = str(err)

+ return (is_predicted, result)

\ No newline at end of file

diff --git a/api/src/core/llm/__init__.py b/api/src/core/llm/__init__.py

new file mode 100644

index 000000000..e69de29bb

diff --git a/api/src/core/llm/base.py b/api/src/core/llm/base.py

new file mode 100644

index 000000000..2eaa01d52

--- /dev/null

+++ b/api/src/core/llm/base.py

@@ -0,0 +1,65 @@

+

+# lang chain

+from langchain.callbacks.manager import CallbackManager

+from langchain.callbacks.streaming_stdout import StreamingStdOutCallbackHandler

+from langchain.llms import AzureOpenAI

+

+from core.llm.huggingface_pipeline import HuggingFacePipeline

+from core.llm.huggingface_peft import HuggingFacePEFT

+

+

+def get_llm(llm_method="azure_gpt3",

+ callback_manager=None,

+ **kwargs):

+

+ llm = None

+ if callback_manager is None:

+ callback_manager = CallbackManager(

+ [StreamingStdOutCallbackHandler()])

+ if llm_method == "azure_gpt3":

+ """

+ Wrapper around Azure OpenAI

+ https://azure.microsoft.com/en-in/products/cognitive-services/openai-service

+ """

+ llm_kwargs = kwargs.get("llm_kwargs", {})

+ llm = AzureOpenAI(

+ callback_manager=callback_manager,

+ deployment_name=kwargs.get(

+ "deployment_name", "text-davinci-003-prod"),

+ **llm_kwargs

+ )

+ elif llm_method == "hf_pipeline":

+ """

+ Wrapper around HuggingFace Pipeline API.

+ https://huggingface.co/models

+ """

+

+ llm = HuggingFacePipeline.from_model_id(

+ model_id=kwargs.get("model_name", "bigscience/bloom-1b7"),

+ task=kwargs.get("task", "text-generation"),

+ device=kwargs.get("device", -1),

+ model_kwargs=kwargs.get("llm_kwargs", {}),

+ pipeline_kwargs=kwargs.get("pipeline_kwargs", {}),

+ quantization_kwargs=kwargs.get("quantization_kwargs", {})

+ )

+ elif llm_method == "hf_peft":

+ """

+ Wrapper around HuggingFace Peft API.

+ https://huggingface.co/models

+ """

+ llm = HuggingFacePEFT.from_model_id(

+ model_id=kwargs.get("model_name", "huggyllama/llama-7b"),

+ adapter_id=kwargs.get("adapter_name", "timdettmers/qlora-flan-7b"),

+ task=kwargs.get("task", "text-generation"),

+ device=kwargs.get("device", -1),

+ model_kwargs=kwargs.get("llm_kwargs", {}),

+ generation_kwargs=kwargs.get("generation_kwargs", {}),

+ quantization_kwargs=kwargs.get("quantization_kwargs", {})

+ )

+ else:

+ raise ValueError(

+ "Please use a valid llm_name. Supported options are"

+ "[azure-gpt3, hf_pipeline, hf_peft] only."

+ )

+

+ return llm

diff --git a/api/src/core/llm/huggingface_peft.py b/api/src/core/llm/huggingface_peft.py

new file mode 100644

index 000000000..9c848e9ee

--- /dev/null

+++ b/api/src/core/llm/huggingface_peft.py

@@ -0,0 +1,226 @@

+"""Wrapper around HuggingFace Peft APIs."""

+import importlib.util

+import logging

+from typing import Any, List, Mapping, Optional

+

+from pydantic import Extra

+

+from langchain.callbacks.manager import CallbackManagerForLLMRun

+from langchain.llms.base import LLM

+from langchain.llms.utils import enforce_stop_tokens

+

+DEFAULT_MODEL_ID = "huggyllama/llama-7b"

+DEFAULT_ADAPTER_ID = "timdettmers/qlora-flan-7b"

+DEFAULT_TASK = "text-generation"

+VALID_TASKS = ("text-generation")

+

+logger = logging.getLogger(__name__)

+

+

+class HuggingFacePEFT(LLM):

+ """Wrapper around HuggingFace Pipeline API.

+

+ To use, you should have the ``transformers` and `peft`` python packages installed.

+

+ Only supports `text-generation` for now.

+

+ Example using from_model_id:

+ .. code-block:: python

+

+ from langchain.llms import HuggingFacePipeline

+ hf = HuggingFacePipeline.from_model_id(

+ model_id="gpt2",

+ task="text-generation",

+ pipeline_kwargs={"max_new_tokens": 10},

+ )

+ """

+

+ model: Any #: :meta private:

+ tokenizer: Any

+

+ device: int = -1

+ """Device to use"""

+ model_id: str = DEFAULT_MODEL_ID

+ """Model name to use."""

+ adapter_id: str = DEFAULT_ADAPTER_ID

+ """Adapter name to use"""

+ model_kwargs: Optional[dict] = None

+ """Key word arguments passed to the model."""

+ generation_kwargs: Optional[dict] = None

+ """Generation arguments passed to the model."""

+ quantization_kwargs: Optional[dict] = None

+ """Quantization arguments passed to the quantization."""

+

+ class Config:

+ """Configuration for this pydantic object."""

+

+ extra = Extra.forbid

+

+ @classmethod

+ def from_model_id(

+ cls,

+ model_id: str,

+ adapter_id: str,

+ task: str,

+ device: int = -1,

+ model_kwargs: Optional[dict] = {},

+ generation_kwargs: Optional[dict] = {},

+ quantization_kwargs: Optional[dict] = {}

+ ) -> LLM:

+ """Construct the pipeline object from model_id and task."""

+ try:

+ import torch

+ from transformers import (

+ AutoModelForCausalLM,

+ AutoModelForSeq2SeqLM,

+ AutoTokenizer,

+ BitsAndBytesConfig

+ )

+ from peft import PeftModel

+ except ImportError:

+ raise ValueError(

+ "Could not import transformers python package. "

+ "Please install it with `pip install transformers peft`."

+ )

+

+ _model_kwargs = model_kwargs or {}

+ tokenizer = AutoTokenizer.from_pretrained(model_id, **_model_kwargs)

+

+ try:

+ quantization_config = None

+ if quantization_kwargs:

+ bnb_4bit_compute_dtype = quantization_kwargs.get("bnb_4bit_compute_dtype", torch.float32)

+ if bnb_4bit_compute_dtype == "bfloat16":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.bfloat16

+ elif bnb_4bit_compute_dtype == "float16":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.float16

+ elif bnb_4bit_compute_dtype == "float32":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.float32

+

+ quantization_config = BitsAndBytesConfig(**quantization_kwargs)

+

+ torch_dtype = _model_kwargs.get("torch_dtype", torch.float32)

+ if torch_dtype is not None:

+ if torch_dtype == "bfloat16":

+ _model_kwargs["torch_dtype"] = torch.bfloat16

+ elif torch_dtype == "float16":

+ _model_kwargs["torch_dtype"] = torch.float16

+ elif torch_dtype == "float32":

+ _model_kwargs["torch_dtype"] = torch.float32

+

+

+ max_memory= {i: _model_kwargs.get(

+ "max_memory", "24000MB") for i in range(torch.cuda.device_count())}

+ _model_kwargs.pop("max_memory")

+ if task == "text-generation":

+ model = AutoModelForCausalLM.from_pretrained(

+ model_id,

+ max_memory=max_memory,

+ quantization_config=quantization_config,

+ **_model_kwargs

+ )

+ model = PeftModel.from_pretrained(model, adapter_id)

+ else:

+ raise ValueError(

+ f"Got invalid task {task}, "

+ f"currently only {VALID_TASKS} are supported"

+ )

+ except ImportError as e:

+ raise ValueError(

+ f"Could not load the {task} model due to missing dependencies."

+ ) from e

+

+ if importlib.util.find_spec("torch") is not None:

+

+ cuda_device_count = torch.cuda.device_count()

+ if device < -1 or (device >= cuda_device_count):

+ raise ValueError(

+ f"Got device=={device}, "

+ f"device is required to be within [-1, {cuda_device_count})"

+ )

+ if device < 0 and cuda_device_count > 0:

+ logger.warning(

+ "Device has %d GPUs available. "

+ "Provide device={deviceId} to `from_model_id` to use available"

+ "GPUs for execution. deviceId is -1 (default) for CPU and "

+ "can be a positive integer associated with CUDA device id.",

+ cuda_device_count,

+ )

+ if "trust_remote_code" in _model_kwargs:

+ _model_kwargs = {

+ k: v for k, v in _model_kwargs.items() if k != "trust_remote_code"

+ }

+

+ return cls(

+ model=model,

+ tokenizer=tokenizer,

+ device=device,

+ model_kwargs=model_kwargs,

+ generation_kwargs=generation_kwargs,

+ quantization_kwargs=quantization_kwargs

+ )

+

+ @property

+ def _identifying_params(self) -> Mapping[str, Any]:

+ """Get the identifying parameters."""

+ return {

+ "model_id": self.model_id,

+ "model_kwargs": self.model_kwargs,

+ "generation_kwargs": self.generation_kwargs,

+ "quantization_kwargs": self.quantization_kwargs,

+ }

+

+ @property

+ def _llm_type(self) -> str:

+ return "huggingface_peft"

+

+ def _call(

+ self,

+ prompt: str,

+ stop: Optional[List[str]] = None,

+ run_manager: Optional[CallbackManagerForLLMRun] = None,

+ **kwargs: Any,

+ ) -> str:

+ import torch

+ from transformers import GenerationConfig

+ from transformers import StoppingCriteria, StoppingCriteriaList

+

+ device_id = "cpu"

+ if self.device != -1:

+ device_id = "cuda:{}".format(self.device)

+

+ stopping_criteria = None

+ stop_sequence = self.generation_kwargs.get("stop_sequence", [])

+ if len(stop_sequence) > 0:

+ stop_token_ids = [self.tokenizer(

+ stop_word, add_special_tokens=False, return_tensors='pt')['input_ids'].squeeze() for stop_word in stop_sequence]

+ stop_token_ids = [token.to(device_id) for token in stop_token_ids]

+

+ # define custom stopping criteria object

+ class StopOnTokens(StoppingCriteria):

+ def __call__(self, input_ids: torch.LongTensor, scores: torch.FloatTensor, **kwargs) -> bool:

+ for stop_ids in stop_token_ids:

+ if torch.eq(input_ids[0][-len(stop_ids):], stop_ids).all():

+ return True

+ return False

+ stopping_criteria = StoppingCriteriaList([StopOnTokens()])

+

+ # eos_token_id = stop_token_ids[0][0].item()

+ # self.generation_kwargs["pad_token_id"] = 1

+ # self.generation_kwargs["eos_token_id"] = eos_token_id

+

+ self.generation_kwargs.pop("stop_sequence")

+ generation_config = GenerationConfig(**self.generation_kwargs)

+

+ inputs = self.tokenizer(prompt, return_tensors="pt").to(device_id)

+ outputs = self.model.generate(

+ inputs=inputs.input_ids,

+ stopping_criteria=stopping_criteria,

+ generation_config=generation_config

+ )

+ text = self.tokenizer.decode(outputs[0], skip_special_tokens=True)

+ text = text[len(prompt) :]

+ if len(stop_sequence) > 0:

+ text = enforce_stop_tokens(text, stop_sequence)

+

+ return text

\ No newline at end of file

diff --git a/api/src/core/llm/huggingface_pipeline.py b/api/src/core/llm/huggingface_pipeline.py

new file mode 100644

index 000000000..26d455a96

--- /dev/null

+++ b/api/src/core/llm/huggingface_pipeline.py

@@ -0,0 +1,251 @@

+"""Wrapper around HuggingFace Pipeline APIs."""

+import importlib.util

+import logging

+from typing import Any, List, Mapping, Optional

+

+from pydantic import Extra

+

+from langchain.callbacks.manager import CallbackManagerForLLMRun

+from langchain.llms.base import LLM

+from langchain.llms.utils import enforce_stop_tokens

+

+DEFAULT_MODEL_ID = "gpt2"

+DEFAULT_TASK = "text-generation"

+VALID_TASKS = ("text2text-generation", "text-generation", "summarization")

+

+logger = logging.getLogger(__name__)

+

+

+class HuggingFacePipeline(LLM):

+ """Wrapper around HuggingFace Pipeline API.

+

+ To use, you should have the ``transformers`` python package installed.

+

+ Only supports `text-generation`, `text2text-generation` and `summarization` for now.

+

+ Example using from_model_id:

+ .. code-block:: python

+

+ from langchain.llms import HuggingFacePipeline

+ hf = HuggingFacePipeline.from_model_id(

+ model_id="gpt2",

+ task="text-generation",

+ pipeline_kwargs={"max_new_tokens": 10},

+ )

+ Example passing pipeline in directly:

+ .. code-block:: python

+

+ from langchain.llms import HuggingFacePipeline

+ from transformers import AutoModelForCausalLM, AutoTokenizer, pipeline

+

+ model_id = "gpt2"

+ tokenizer = AutoTokenizer.from_pretrained(model_id)

+ model = AutoModelForCausalLM.from_pretrained(model_id)

+ pipe = pipeline(

+ "text-generation", model=model, tokenizer=tokenizer, max_new_tokens=10

+ )

+ hf = HuggingFacePipeline(pipeline=pipe)

+ """

+

+ pipeline: Any #: :meta private:

+ model_id: str = DEFAULT_MODEL_ID

+ """Model name to use."""

+ model_kwargs: Optional[dict] = None

+ """Key word arguments passed to the model."""

+ pipeline_kwargs: Optional[dict] = None

+ """Key word arguments passed to the pipeline."""

+ quantization_kwargs: Optional[dict] = None

+ """Quantization arguments passed to the quantization."""

+

+ class Config:

+ """Configuration for this pydantic object."""

+

+ extra = Extra.forbid

+

+ @classmethod

+ def from_model_id(

+ cls,

+ model_id: str,

+ task: str,

+ device: int = -1,

+ model_kwargs: Optional[dict] = {},

+ pipeline_kwargs: Optional[dict] = {},

+ quantization_kwargs: Optional[dict] = {},

+ **kwargs: Any,

+ ) -> LLM:

+ """Construct the pipeline object from model_id and task."""

+ try:

+ import torch

+ from transformers import (

+ AutoModelForCausalLM,

+ AutoModelForSeq2SeqLM,

+ AutoTokenizer,

+ BitsAndBytesConfig

+ )

+ from transformers import pipeline as hf_pipeline

+ from transformers import StoppingCriteria, StoppingCriteriaList

+

+ except ImportError:

+ raise ValueError(

+ "Could not import transformers python package. "

+ "Please install it with `pip install transformers`."

+ )

+

+ _model_kwargs = model_kwargs or {}

+ tokenizer = AutoTokenizer.from_pretrained(model_id, **_model_kwargs)

+

+ try:

+ quantization_config = None

+ if quantization_kwargs:

+ bnb_4bit_compute_dtype = quantization_kwargs.get("bnb_4bit_compute_dtype", torch.float32)

+ if bnb_4bit_compute_dtype == "bfloat16":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.bfloat16

+ elif bnb_4bit_compute_dtype == "float16":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.float16

+ elif bnb_4bit_compute_dtype == "float32":

+ quantization_kwargs["bnb_4bit_compute_dtype"] = torch.float32

+

+ quantization_config = BitsAndBytesConfig(**quantization_kwargs)

+

+ torch_dtype = _model_kwargs.get("torch_dtype", torch.float32)

+ if torch_dtype is not None:

+ if torch_dtype == "bfloat16":

+ _model_kwargs["torch_dtype"] = torch.bfloat16

+ elif torch_dtype == "float16":

+ _model_kwargs["torch_dtype"] = torch.float16