-

Notifications

You must be signed in to change notification settings - Fork 1.2k

New issue

Have a question about this project? Sign up for a free GitHub account to open an issue and contact its maintainers and the community.

By clicking “Sign up for GitHub”, you agree to our terms of service and privacy statement. We’ll occasionally send you account related emails.

Already on GitHub? Sign in to your account

[URGENT] Reducing our usage of GitHub Runners #14376

[URGENT] Reducing our usage of GitHub Runners #14376

Comments

This PR disables all CI Jobs for macOS and Windows, to reduce GitHub Cost. Details here: apache#14376

This PR disables all CI Jobs for macOS and Windows, to reduce GitHub Cost. Details here: apache/nuttx#14376

|

As commented by @xiaoxiang781216:

I suggest that we monitor the GitHub Cost after disabling macOS and Windows Jobs. It's possible that macOS and Windows Jobs are contributing a huge part of the cost. We could re-enable and simplify them after monitoring. |

|

One of the methods proposed by, if I remember correctly @btashton, is to replace many simple configurations for some boards (mostly for peripherals testing) with one large |

|

@raiden00pl Yep I agree. Or we could test a complex target like |

|

Here's another comment about macOS and Windows by @yamt: #14377 (comment) |

|

sorry, let me ask a dumb question. |

@yamt It's probably a special plan negotiated by ASF and GitHub? It's not mentioned in the ASF Policy for GitHub Actions: https://infra.apache.org/github-actions-policy.html I find this "contract" a little strange. Why are all ASF Projects subjected to the same quotas? And why can't we increase the quota if we happen to have additional funding? Update: More info here: https://cwiki.apache.org/confluence/display/INFRA/GitHub+self-hosted+runners

Update 2: This sounds really complicated. I'd rather use my own Mac Mini to execute the NuttX CI Tests, once a day? |

do you know if the macos/windows premium applies as usual?

yea, i guess projects have very different sizes/demands. |

Is there any merit in "farming out" CI tests to those with boards? I think there was a discussion about NuttX owning a suite of boards but not sure where that got to - and would depend on just 1 or 2 people managing it. As an aside, is there a guide to self-running CI? As I work on a custom board it would be good for me to do this occasionally but I have noi idea where to start! |

|

@TimJTi Here's how I do daily testing on Milk-V Duo S SBC: https://lupyuen.github.io/articles/sg2000a |

And I just RTFM...the "official" guide is here so I'll review both and hopefully get it working - and submit any tweaks/corrections/enhancements I find are needed to the NuttX "How To" documentation |

These work, but it does not describe the entire CI, just how to run pytest checks for |

|

Yes let's cut what we can (but to keep at least minimal functional configure, build, syntax testing) and see what are the cost reduction. We need to show Apache we are working on the problem. So far optimitzations did not cut the use and we are in danger of loosing all CI :-( On the other hand that seems not fair to share the same CI quota as small projects. NuttX is a fully featured RTOS working on ~1000 different devices. In order to keep project code quality we need the CI. Maybe its time to rethink / redesign from scratch the CI test architecture and implementation? |

|

Another problem is that people very often send unfinished undescribed PRs that are updated without a comment or request that triggers whole big CI process several times :-( Some changes are sometimes required and we cannot avoid that this is part of the process. But maybe we can make something more "adaptive" so only minimal CI is launched by default, preferably only in area that was changed, then with all approvals we can make one manual trigger final big check before merge? Long story short: We can switch CI test runs to manual trigger for now to see how it reduces costs. I would see two buttons to start Basic and Advanced (maybe also Full = current setup) CI. |

|

@cederom Maybe our PRs should have a Mandatory Field: Which NuttX Config to build, e.g. |

People often cant fill even one single sentence to describe Summary, Impact, Testing :D This may be detected automatically.. or we can just see what architecture is the cheapest one and use it for all basic tests..? |

Often contributors use CI to test all configuration instead of testing changes locally. On one hand I understand this because compiling all configurations on a local machine takes a lot of time, on the other hand I'm not sure if CI is for this purpose (especially when we have limits on its use).

It won't work. Users are lazy, and in order to choose what needs to be compiled correctly, you need a comprehensive knowledge of the entire NuttX, which is not that easy. |

|

So it looks like for now, where dramatic steps need to be taken, we need to mark all PR as drafts and start CI by hand when we are sure all is ready for merge? o_O |

|

[like] Jerpelea, Alin reacted to your message:

…________________________________

From: CeDeROM ***@***.***>

Sent: Thursday, October 17, 2024 2:11:13 PM

To: apache/nuttx ***@***.***>

Cc: Jerpelea, Alin ***@***.***>; Comment ***@***.***>

Subject: Re: [apache/nuttx] [URGENT] Reducing our usage of GitHub Runners (Issue #14376)

So it looks like for now, where dramatic steps need to be taken, we need to mark all PR as drafts and start CI by hand when we are sure all is ready for merge? o_O — Reply to this email directly, view it on GitHub, or unsubscribe. You

So it looks like for now, where dramatic steps need to be taken, we need to mark all PR as drafts and start CI by hand when we are sure all is ready for merge? o_O

—

Reply to this email directly, view it on GitHub<https://urldefense.com/v3/__https://github.com/apache/nuttx/issues/14376*issuecomment-2419664709__;Iw!!JmoZiZGBv3RvKRSx!60hNhJMIXMMxTP8-Zr9RteOSJ2PJTdGpwx0nE8SOkWeV1d0uxP1v0N860U_WVI_zv-r-PhDE2T6b-zIlN3CrJpLbOg$>, or unsubscribe<https://urldefense.com/v3/__https://github.com/notifications/unsubscribe-auth/AB32XCU22ONPLOEL6JKVC2LZ37AQDAVCNFSM6AAAAABQC44TO2VHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDIMJZGY3DINZQHE__;!!JmoZiZGBv3RvKRSx!60hNhJMIXMMxTP8-Zr9RteOSJ2PJTdGpwx0nE8SOkWeV1d0uxP1v0N860U_WVI_zv-r-PhDE2T6b-zIlN3DcSsTpzw$>.

You are receiving this because you commented.Message ID: ***@***.***>

|

This PR disables all CI Jobs for macOS and Windows, to reduce GitHub Cost. Details here: apache/nuttx#14376

When we submit or update a Complex PR that affects All Architectures (Arm, RISC-V, Xtensa, etc): CI Workflow shall run only half the jobs. Previously CI Workflow will run `arm-01` to `arm-14`, now we will run only `arm-01` to `arm-07`. When the Complex PR is Merged: CI Workflow will still run all jobs `arm-01` to `arm-14` Simple PRs with One Single Arch / Board will build the same way as before: `arm-01` to `arm-14` This is explained here: apache#14376 Note that this version of `arch.yml` has diverged from `nuttx-apps`, since we are unable to merge apache#14377

|

@lupyuen It looks I made a mistake with some commit messages, that caused our branch to get referenced to a few issues in the apache repo. My apologies. I believe I have removed the commit message references, but if there is anything else I need to do to fix this, please let me know and I will get on it ASAP. |

|

@stbenn No worries thanks :-) |

|

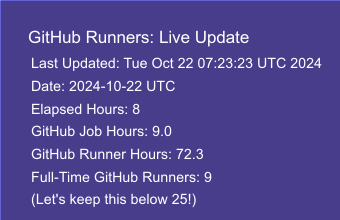

4 Days to Festivity: Yesterday we consumed 13 Full-Time GitHub Runners (half of the ASF Quota for GitHub Runners)... Past 7 Days: We used an average of 9 Full-Time GitHub Runners... So we're on track to make ASF very happy on 30 Oct! Let's monitor today... |

|

Thank you @lupyuen for your amazing work!! Have a good calm weekend :-) :-) |

|

3 Days to Tranquility: Yesterday was a quiet Saturday (no more Release Builds yay!). We consumed only 4 Full-Time GitHub Runners... Let's hope today will be a peaceful Sunday... |

|

Something strange about Network Timeouts in our Docker Workflows: First Run fails while downloading something from GitHub: Second Run fails again, while downloading NimBLE from GitHub: Third Run succeeds. Why do we keep seeing these errors: GitHub Actions with Docker, can't connect to GitHub itself? Is something misconfigured in our Docker Image? But the exact same Docker Image runs fine on my own Build Farm. It doesn't show any errors. Is GitHub Actions starting our Docker Container with the wrong MTU (Network Packet Size)? 🤔 Meanwhile I'm running a script to Restart Failed Jobs on our NuttX Mirror Repos: restart-failed-job.sh |

|

2 Days to Transcendence: Yesterday we consumed 10 Full-Time GitHub Runners. We peaked briefly at 21 while compiling a few NuttX Apps. Let's keep on monitoring thanks! |

Monitoring our CI Servers 24 x 7This runs on my 4K TV (Xiaomi 65-inch) all day, all night: When I'm out on Overnight Hikes: I check my phone at every water break: I have GitHub Scripts that will run on Termux Android (remember to

|

|

Lup's Operations Center =) |

|

1 Day to Utopia: Yesterday was a busy Monday, we consumed 14 Full-Time GitHub Runners. That's 56% of the ASF Quota for Full-Time Runners... We peaked briefly at 26 Full-Time Runners. Let's hang in there thanks! :-) |

|

2 days but we should be fine thanks to our Super Hero @lupyuen !! AVE =) |

|

Thank you so much @cederom! :-) |

|

Kudos Lup!

Best regards

Alin

…________________________________

Från: Lup Yuen Lee ***@***.***>

Skickat: den 29 oktober 2024 00:18

Till: apache/nuttx ***@***.***>

Kopia: Jerpelea, Alin ***@***.***>; Mention ***@***.***>

Ämne: Re: [apache/nuttx] [URGENT] Reducing our usage of GitHub Runners (Issue #14376)

Thank you so much @cederom! :-) — Reply to this email directly, view it on GitHub, or unsubscribe. You are receiving this because you were mentioned. Message ID: <apache/nuttx/issues/14376/2442851421@ github. com>

Thank you so much @cederom<https://urldefense.com/v3/__https://github.com/cederom__;!!JmoZiZGBv3RvKRSx!6c8SScCihQbTxBjmvuht5R5wiFaJwwUNLjUHmSdyVVUDwdnGWnhBnqMjn8oEL0G2KsTpa6P1QLdgkP3tQ2JJCiyo2w$>! :-)

—

Reply to this email directly, view it on GitHub<https://urldefense.com/v3/__https://github.com/apache/nuttx/issues/14376*issuecomment-2442851421__;Iw!!JmoZiZGBv3RvKRSx!6c8SScCihQbTxBjmvuht5R5wiFaJwwUNLjUHmSdyVVUDwdnGWnhBnqMjn8oEL0G2KsTpa6P1QLdgkP3tQ2IwVW-L_w$>, or unsubscribe<https://urldefense.com/v3/__https://github.com/notifications/unsubscribe-auth/AB32XCV6L4G5IE6QCIK4D5TZ53A53AVCNFSM6AAAAABQC44TO2VHI2DSMVQWIX3LMV43OSLTON2WKQ3PNVWWK3TUHMZDINBSHA2TCNBSGE__;!!JmoZiZGBv3RvKRSx!6c8SScCihQbTxBjmvuht5R5wiFaJwwUNLjUHmSdyVVUDwdnGWnhBnqMjn8oEL0G2KsTpa6P1QLdgkP3tQ2IYhS2h5g$>.

You are receiving this because you were mentioned.Message ID: ***@***.***>

|

|

0 Days to Final Audit: ASF Infra Team will be checking on us one last time today! Yesterday was a super busy Tuesday, we consumed 15 Full-Time GitHub Runners (peaked briefly at 31) Past 7 Days: We consumed 12 Full-Time Runners, which is half the ASF Quota of 25 Full-Time Runners yay! FYI: Our "Monthly Bill" for GitHub Actions used to be $18K... Right now our Monthly Bill is $14K. And still dropping! Let's wait for the good news from ASF, thank you everyone! 🙏 |

|

🙏 🙏 🙏 |

|

GitHub Actions had some laggy issues just now: https://www.githubstatus.com/incidents/9yk1fbk0qjjc So please ignore the over-inflated data in our report (because everything got lagged). Thanks! |

|

It's Oct 31 and our CI Servers are still running. We made it yay! 🎉 We got plenty to do:

Thank you everyone for making this happen! 🙏 |

|

BIG THANK YOU @lupyuen FOR YOUR HELP, TIME, AND PATIENCE!! |

Due to the [recent cost-cutting](apache/nuttx#14376), we are no longer running PR Merge Jobs in the `nuttx` and `nuttx-apps` repos. For this to happen, I am now running a script on my computer that will cancel any PR Merge Jobs that appear: [kill-push-master.sh](https://github.com/lupyuen/nuttx-release/blob/main/kill-push-master.sh) This PR disables PR Merge Jobs permanently, so that we no longer need to run the script. This prevents our CI Charges from over-running, in case the script fails to operate properly.

Due to the [recent cost-cutting](apache#14376), we are no longer running PR Merge Jobs in the `nuttx` and `nuttx-apps` repos. For this to happen, I am now running a script on my computer that will cancel any PR Merge Jobs that appear: [kill-push-master.sh](https://github.com/lupyuen/nuttx-release/blob/main/kill-push-master.sh) This PR disables PR Merge Jobs permanently, so that we no longer need to run the script. This prevents our CI Charges from over-running, in case the script fails to operate properly.

Due to the [recent cost-cutting](#14376), we are no longer running PR Merge Jobs in the `nuttx` and `nuttx-apps` repos. For this to happen, I am now running a script on my computer that will cancel any PR Merge Jobs that appear: [kill-push-master.sh](https://github.com/lupyuen/nuttx-release/blob/main/kill-push-master.sh) This PR disables PR Merge Jobs permanently, so that we no longer need to run the script. This prevents our CI Charges from over-running, in case the script fails to operate properly.

Due to the [recent cost-cutting](apache/nuttx#14376), we are no longer running PR Merge Jobs in the `nuttx` and `nuttx-apps` repos. For this to happen, I am now running a script on my computer that will cancel any PR Merge Jobs that appear: [kill-push-master.sh](https://github.com/lupyuen/nuttx-release/blob/main/kill-push-master.sh) This PR disables PR Merge Jobs permanently, so that we no longer need to run the script. This prevents our CI Charges from over-running, in case the script fails to operate properly.

[Article] Optimising the Continuous Integration for NuttXWithin Two Weeks: We squashed our GitHub Actions spending from $4,900 (weekly) down to $890. Thank you everyone for helping out, we saved our CI Servers from shutdown! 🎉 This article explains everything we did in the (Semi-Chaotic) Two Weeks: (1) Shut down the macOS and Windows Builds, revive them in a different form (2) Merge Jobs are super costly, we moved them to the NuttX Mirror Repo (3) We Halved the CI Checks for Complex PRs (4) Simple PRs are already quite fast. (Sometimes 12 Mins!) (5) Coding the Build Rules for our CI Workflow, monitoring our CI Servers 24 x 7 (6) We can’t run All CI Checks, but NuttX Devs can help ourselves! Check out the article: https://lupyuen.codeberg.page/articles/ci3.html |

Due to the [recent cost-cutting](apache#14376), we are no longer running PR Merge Jobs in the `nuttx` and `nuttx-apps` repos. For this to happen, I am now running a script on my computer that will cancel any PR Merge Jobs that appear: [kill-push-master.sh](https://github.com/lupyuen/nuttx-release/blob/main/kill-push-master.sh) This PR disables PR Merge Jobs permanently, so that we no longer need to run the script. This prevents our CI Charges from over-running, in case the script fails to operate properly.

Hi All: We have an ultimatum to reduce (drastically) our usage of GitHub Actions. Or our Continuous Integration will halt totally in Two Weeks. Here's what I'll implement within 24 hours for

nuttxandnuttx-appsrepos:When we submit or update a Complex PR that affects All Architectures (Arm, RISC-V, Xtensa, etc): CI Workflow shall run only half the jobs. Previously CI Workflow will run

arm-01toarm-14, now we will run onlyarm-01toarm-07. (This will reduce GitHub Cost by 32%)When the Complex PR is Merged: CI Workflow will still run all jobs

arm-01toarm-14(Simple PRs with One Single Arch / Board will build the same way as before:

arm-01toarm-14)For NuttX Admins: Our Merge Jobs are now at github.com/NuttX/nuttx. We shall have only Two Scheduled Merge Jobs per day

I shall quickly Cancel any Merge Jobs that appear in

nuttxandnuttx-appsrepos. Then at 00:00 UTC and 12:00 UTC: I shall start the Latest Merge Job atnuttxpr.(This will reduce GitHub Cost by 17%)macOS and Windows Jobs (msys2 / msvc): They shall be totally disabled until we find a way to manage their costs. (GitHub charges 10x premium for macOS runners, 2x premium for Windows runners!)

Let's monitor the GitHub Cost after disabling macOS and Windows Jobs. It's possible that macOS and Windows Jobs are contributing a huge part of the cost. We could re-enable and simplify them after monitoring.

(This must be done for BOTH

nuttxandnuttx-appsrepos. Sadly the ASF Report for GitHub Runners doesn't break down the usage by repo, so we'll never know how much macOS and Windows Jobs are contributing to the cost. That's why we need CI: Disable all jobs for macOS and Windows #14377)(Wish I could run NuttX CI Jobs on my M2 Mac Mini. But the CI Script only supports Intel Macs sigh. Buy a Refurbished Intel Mac Mini?)

We have done an Analysis of CI Jobs over the past 24 hours:

https://docs.google.com/spreadsheets/d/1ujGKmUyy-cGY-l1pDBfle_Y6LKMsNp7o3rbfT1UkiZE/edit?gid=0#gid=0

Many CI Jobs are Incomplete: We waste GitHub Runners on jobs that eventually get superseded and cancelled

When we Half the CI Jobs: We reduce the wastage of GitHub Runners

Scheduled Merge Jobs will also reduce wastage of GitHub Runners, since most Merge Jobs don't complete (only 1 completed yesterday)

See the ASF Policy for GitHub Actions

The text was updated successfully, but these errors were encountered: