From b655d13069243c5384c194f6bdedb9222d8e649a Mon Sep 17 00:00:00 2001

From: Alexis Thual <alexisthual@gmail.com>

Date: Mon, 12 Feb 2024 12:18:31 +0100

Subject: [PATCH] Allow disabling cache

---

README.md | 3 +-

.../endpoints/alignments_explorer.py | 14 +++-----

.../endpoints/features_explorer.py | 21 +++++++-----

api/src/brain_cockpit/utils.py | 34 ++++++++++++++++---

4 files changed, 50 insertions(+), 22 deletions(-)

diff --git a/README.md b/README.md

index 2839f07..cf4abfe 100644

--- a/README.md

+++ b/README.md

@@ -9,7 +9,8 @@

- large surface fMRI datasets projected on surface meshes

- alignments computed between brains, such as those computed with [fugw](https://alexisthual.github.io/fugw/index.html)

-You can try our [online demo](https://brain-cockpit.athual.fr/)!

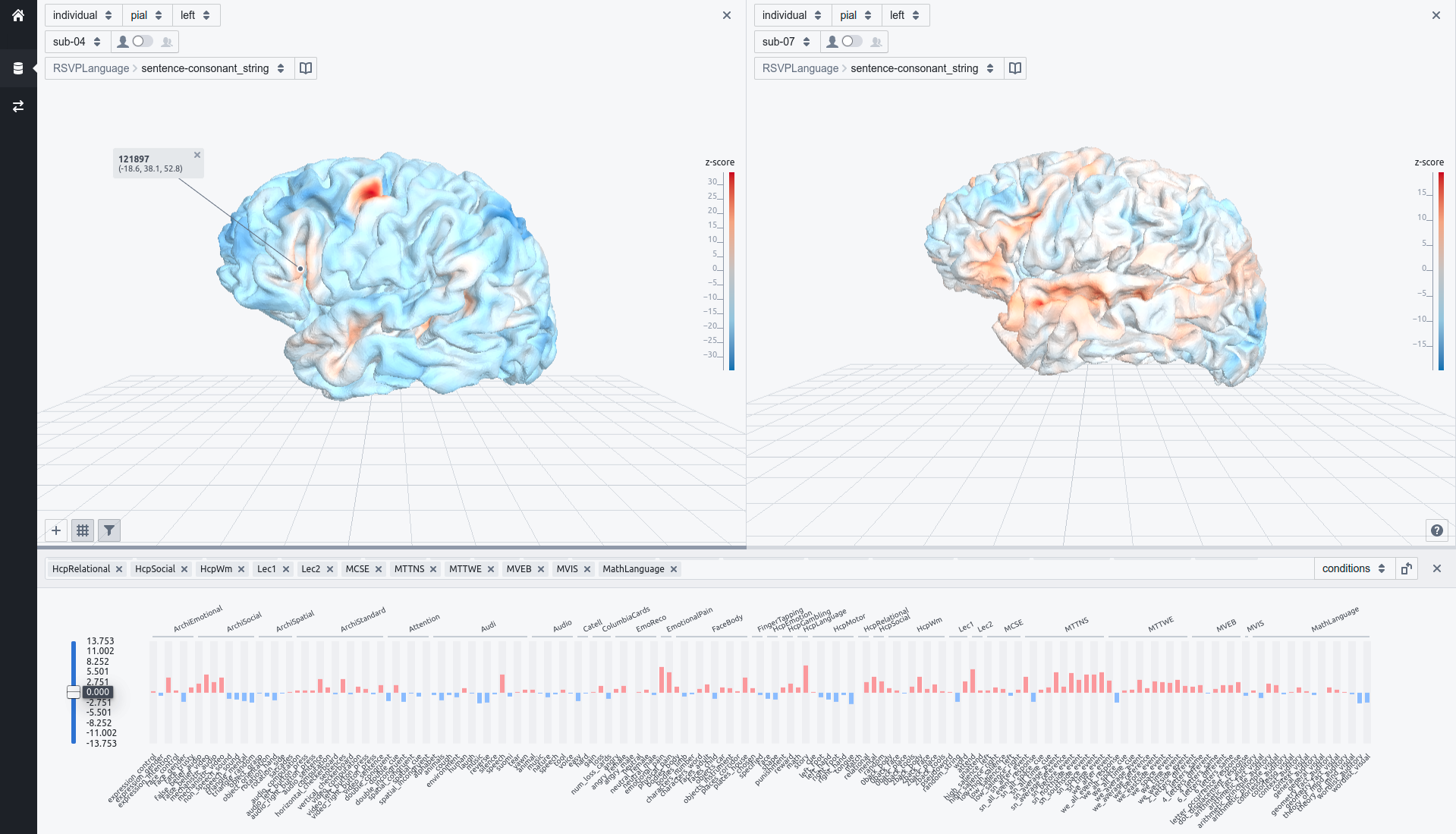

+🚀 You can try our [online demo](https://brain-cockpit.tc.humanbrainproject.eu/) displaying the [Individual Brain Charting dataset](https://individual-brain-charting.github.io/docs/), a vast collection of fMRI protocols collected in a small number of human participants.

+This project was deployed with [EBRAINS](https://www.ebrains.eu/).

diff --git a/api/src/brain_cockpit/endpoints/alignments_explorer.py b/api/src/brain_cockpit/endpoints/alignments_explorer.py

index e75aab7..703418d 100644

--- a/api/src/brain_cockpit/endpoints/alignments_explorer.py

+++ b/api/src/brain_cockpit/endpoints/alignments_explorer.py

@@ -1,23 +1,20 @@

+"""Util functions to create Alignments Explorer endpoints."""

+

import os

import pickle

-

from pathlib import Path

import nibabel as nib

import numpy as np

import pandas as pd

+from flask import jsonify, request, send_from_directory

from brain_cockpit.scripts.gifti_to_gltf import create_dataset_glft_files

from brain_cockpit.utils import console, load_dataset_description

-from flask import jsonify, request, send_from_directory

def create_endpoints_one_alignment_dataset(bc, id, dataset):

- """

- For a given alignment dataset, generate endpoints

- serving dataset meshes and alignment transforms.

- """

-

+ """Create all API endpoints for exploring a given Alignments dataset."""

df, dataset_path = load_dataset_description(

config_path=bc.config_path, dataset_path=dataset["path"]

)

@@ -126,8 +123,7 @@ def align_single_voxel():

def create_all_endpoints(bc):

- """Create endpoints for all available alignments datasets."""

-

+ """Create endpoints for all available Alignments datasets."""

if "alignments" in bc.config and "datasets" in bc.config["alignments"]:

# Iterate through each alignment dataset

for dataset_id, dataset in bc.config["alignments"]["datasets"].items():

diff --git a/api/src/brain_cockpit/endpoints/features_explorer.py b/api/src/brain_cockpit/endpoints/features_explorer.py

index dada075..6a45078 100644

--- a/api/src/brain_cockpit/endpoints/features_explorer.py

+++ b/api/src/brain_cockpit/endpoints/features_explorer.py

@@ -1,22 +1,24 @@

+"""Util functions to create Features Explorer endpoints."""

+

import json

import os

-

from pathlib import Path

import nibabel as nib

import numpy as np

import pandas as pd

+from flask import jsonify, request, send_from_directory

from brain_cockpit import utils

from brain_cockpit.scripts.gifti_to_gltf import create_dataset_glft_files

from brain_cockpit.utils import console, load_dataset_description

-from flask import jsonify, request, send_from_directory

# UTIL FUNCTIONS

# These functions are useful for loading data

def hemi_to_side(hemi):

+ """Transform hemisphere label from nilearn to HCP."""

if hemi == "left":

return "lh"

elif hemi == "right":

@@ -25,6 +27,7 @@ def hemi_to_side(hemi):

def side_to_hemi(hemi):

+ """Transform hemisphere label from HCP to nilearn."""

if hemi == "lh":

return "left"

elif hemi == "rh":

@@ -33,6 +36,7 @@ def side_to_hemi(hemi):

def multiindex_to_nested_dict(df):

+ """Transform DataFrame with multiple indices to python dict."""

if isinstance(df.index, pd.core.indexes.multi.MultiIndex):

return dict(

(k, multiindex_to_nested_dict(df.loc[k]))

@@ -46,7 +50,8 @@ def multiindex_to_nested_dict(df):

def parse_metadata(df):

- """

+ """Parse metadata Dataframe.

+

Parameters

----------

df: pandas DataFrame

@@ -84,11 +89,12 @@ def parse_metadata(df):

def create_endpoints_one_features_dataset(bc, id, dataset):

- memory = utils.get_memory(bc)

+ """Create all API endpoints for exploring a given Features dataset."""

- @memory.cache

+ @utils.bc_cache(bc)

def load_data(df, config_path=None, dataset_path=None):

- """

+ """Load data used in endpoints.

+

Parameters

----------

dataset_path: str

@@ -507,8 +513,7 @@ def get_contrast_mean():

def create_all_endpoints(bc):

- """Create endpoints for all available surface datasets."""

-

+ """Create endpoints for all available Features datasets."""

if "features" in bc.config and "datasets" in bc.config["features"]:

# Iterate through each surface dataset

for dataset_id, dataset in bc.config["features"]["datasets"].items():

diff --git a/api/src/brain_cockpit/utils.py b/api/src/brain_cockpit/utils.py

index 08f30e6..c343c13 100644

--- a/api/src/brain_cockpit/utils.py

+++ b/api/src/brain_cockpit/utils.py

@@ -1,10 +1,11 @@

-import os

+"""Util functions used throughout brain-cockpit."""

+import functools

+import os

from pathlib import Path

import pandas as pd

import yaml

-

from joblib import Memory

from rich.console import Console

from rich.progress import (

@@ -24,6 +25,7 @@

# `rich` progress bar used throughout the codebase

def get_progress(**kwargs):

+ """Return rich progress bar."""

return Progress(

SpinnerColumn(),

TaskProgressColumn(),

@@ -38,10 +40,35 @@ def get_progress(**kwargs):

def get_memory(bc):

+ """Return joblib memory."""

return Memory(bc.config["cache_folder"], verbose=0)

+def bc_cache(bc):

+ """Cache functions with brain-cockpit cache."""

+

+ def _inner_decorator(func):

+ @functools.wraps(func)

+ def wrapped(*args, **kwargs):

+ # Use joblib cache if and only if cache_folder is defined

+ if (

+ bc.config["cache_folder"] is not None

+ and bc.config["cache_folder"] != ""

+ ):

+ console.log(f"Using cache {bc.config['cache_folder']}")

+ mem = get_memory(bc)

+ return mem.cache(func)(*args, **kwargs)

+ else:

+ console.log("Not using cache for dataset")

+ return func(*args, **kwargs)

+

+ return wrapped

+

+ return _inner_decorator

+

+

def load_config(config_path=None, verbose=False):

+ """Load brain-cockpit yaml config from path."""

config = None

if config_path is not None and os.path.exists(config_path):

@@ -60,8 +87,7 @@ def load_config(config_path=None, verbose=False):

def load_dataset_description(config_path=None, dataset_path=None):

- """

- Load dataset CSV file.

+ """Load dataset CSV file.

Returns

-------