-

Notifications

You must be signed in to change notification settings - Fork 2

Datastreaming

The datastreaming system is being built as part of in-kind work to ESS. It will be the system that the ESS uses to take data and write it to file - basically their equivalent to the ICP. The system may also replace the ICP at ISIS in the future.

In general the system works by passing both neutron and SE data into Kafka and having clients that either view data live (like Mantid) or write the data to file, additional information can be found here and here.

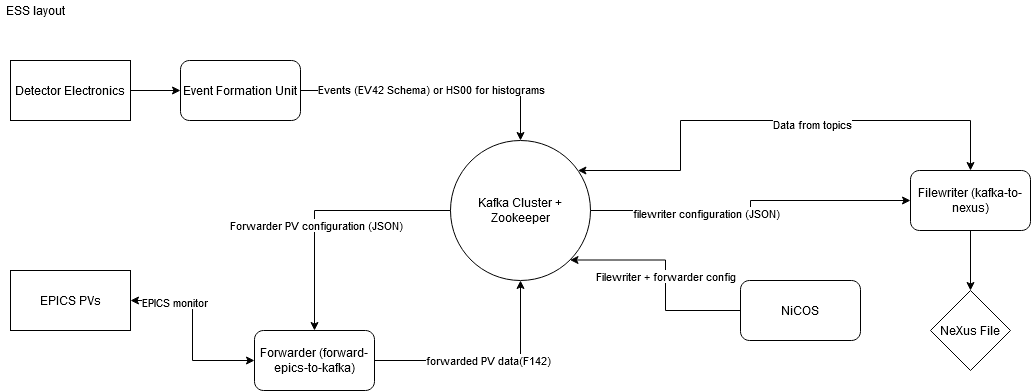

The datastreaming layout proposed looks something like this, not including the mantid steps or anything before event data is collected:

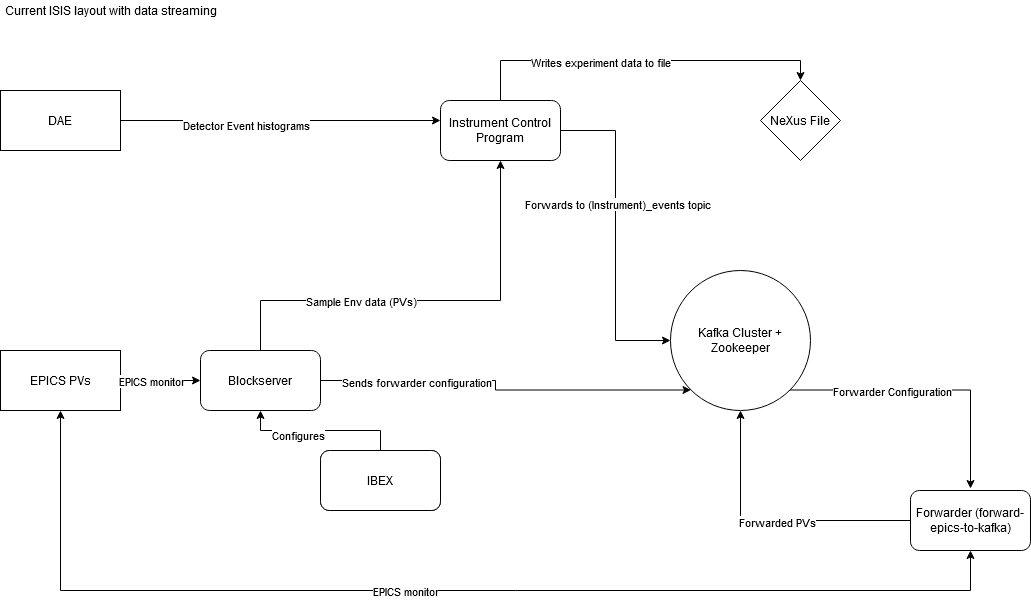

Part of our in-kind contribution to datastreaming is to test the system in production at ISIS. Currently it is being tested in the following way, with explanations of each component below:

There are two Kafka clusters, production (livedata.isis.cclrc.ac.uk:9092) and development (tenten.isis.cclrc.ac.uk:9092 or sakura.isis.cclrc.ac.uk:9092 or hinata.isis.cclrc.ac.uk:9092). The development cluster is set up to auto-create topics and so when new developer machines are run up all the required topics will be created. However, the production server does not auto-create topics this means that when a new real instrument comes online corresponding topics must be created on this cluster, which is done as part of the install script. Credentials for both clusters can be found in the sharepoint.

A Grafana dashboard for the production cluster can be found at madara.isis.cclrc.ac.uk:3000. This shows the topic data rate and other useful information. Admin credentials can also be found in the sharepoint.

Deployment involves the use of Ansible playbooks, the playbooks and instructions for using these can be found here.

The ICP on any instrument that is running in full event mode and with a DAE3 is streaming neutron events into Kafka.

All IBEX instruments are currently forwarding their sample environment PVs into Kafka. This is done in two parts:

This is a Python process that runs on each NDX (see code here) it monitors the blockserver config PVs and any time the config changes it pushes a new configuration to the forwarder, via a Kafka topic forwarder_config. This is a process written and managed by IBEX developers.

The instrument name for the BlockServerToKafka service is BSKAFKA.

This is a C++ program responsible for taking the EPICS data and pushing into Kafka. ISIS currently has two instances of the forwarder running (one for the production and one for development). They are both running as services (Developer Forwarder and Production Forwarder) on NDADATASTREAM, which can be accessed via the ibexbuilder account. The logs for these forwarders are located in C:\Forwarder\dev_forwarder and C:\Forwarder\prod_forwarder.

The filewriter is responsible for taking the neutron and SE data out of Kafka and writing it to a nexus file. When the ICP ends a run it sends a config message to the filewriter, via kafka, to tell it to start writing to file.

- hdf5 conan library does not seem to build under windows, however it's falling over in the conan step

- ess takes ownership of the library

- did not get any further than this as the conan step failed, the rest of the libraries built

- not sure what is falling over but the hdf5 library can probably be fixed

- DATASTREAM is potentially a VM and recursive hyper-v may not work - confirmed

- Docker desktop does not run on build 14393 which is what it's on

- I don't think this will work as we need hyper v for a windows build

- will continue trying to install docker but so far no luck

- windows containers are a bit weird and we may just end up with the same problems as #1

following this link 1.enabled containers and restarted

- installed docker - weird error but seemed to install:

Start-Service : Failed to start service 'Docker Engine (docker)'.

At C:\Users\ibexbuilder\update-containerhost.ps1:393 char:5

+ Start-Service -Name $global:DockerServiceName

+ ~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~~

+ CategoryInfo : OpenError: (System.ServiceProcess.ServiceController:ServiceController) [Start-Service],

ServiceCommandException

+ FullyQualifiedErrorId : StartServiceFailed,Microsoft.PowerShell.Commands.StartServiceCommand

Docker service then does not start and gives this error in logs:

fatal: Error starting daemon: Error initializing network controller: Error creating default network: HNS failed with error : The object already exists.

A quick google leads to this

After deleting hns.data from C:\ProgramData\Microsoft\Windows\HNS and restarting HNS it still does not work and gives the same error.

The best option here would be to try and get it running natively, as DATASTREAM is a Virtual Machine itself and Docker appears to not work. As well as this, we can then run it with nssm which is how we run the forwarder as well, which makes for consistent service management. We could also probably use the log rotation that the forwarder is using which is build into NSSM.

The filewriter is now running in a docker-compose script on NDHSPARE62, this is with Docker desktop rather than the enterprise edition and is not using the LCOW framework. We should think about a more permanent solution, however Docker clearly works on server 2019 and not 2016. NDADATASTREAM is running 2016 so may make sense to update that if we want the filewriter running on it as well.

https://github.com/ISISComputingGroup/isis-filewriter has been created for an easy setup of the filewriter using docker-compose. it is hardcoded currently and requires the file_writer_config.ini to be changed to point at the runInfo topics manually. To begin with we ran it just pointing at ZOOM_runInfo, and it successfully wrote files containing event data.

https://github.com/ISISComputingGroup/combine-runinfo has also been created to workaround the filewriter only being able to point at one configuration topic, so we can use the filewriter for all instruments. combine-runinfo's purpose is to run a Kafka Stream Processor to forward all new configuration changes into the ALL_runInfo topic to be used with a single instance of the filewriter.

This project is written in Kotlin and then compiled with Gradle to create a runnable .jar file. This is flexible, and we could re-write it in Java if it's used permanently and maintaining another language is an issue.

Currently system tests are being run to confirm that the start/stop run and event data messages are being sent into Kafka and that a Nexus file is being written with these events. The Kafka cluster and filewriter are being run in docker containers for these tests and so must be run on a Windows 10 machine. To run these tests you will need to install docker for windows and add yourself as a docker-user.

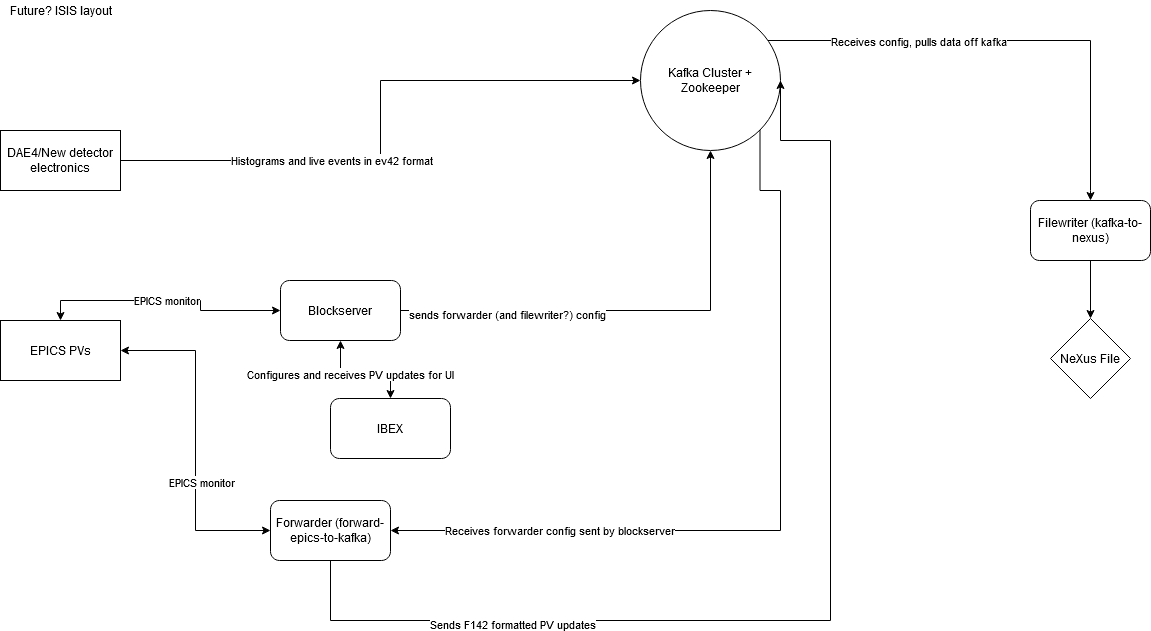

After the in-kind work finishes and during the handover, there are some proposed changes that affect the layout and integration of data streaming at ISIS. This diagram is subject to change, but shows a brief overview of what the future system might look like: